Search with OpenSearch Features

Oracle-managed open source technology

Proven open source technology

OCI Search with OpenSearch takes a proven community-driven, open source search solution and builds a management layer onto it. Simply point Elasticsearch-based APIs to an OCI Search endpoint to get up and running quickly. OCI offers OpenSearch v1.2, v2.11, and v2.15; all are compatible with Elasticsearch 7.10.

Oracle management layer

OCI is responsible for administrative tasks, including deployment, provisioning, patching, and resizing (scaling up or out). OCI Search enables operators to focus on data, not maintenance.

Backups your way

OCI Search users benefit from automated backups of their clusters to an OCI Object Storage bucket within their tenancy, or they can choose to use the OpenSearch Snapshot API to move backups to their own OCI Object Storage bucket. Data provided by backups to OCI Object Storage is secured in flight and at rest. All data stored within OpenSearch is encrypted both at rest and in flight.

Flexible scaling

OCI Search offers a pool of resources based on OCI flexible shapes. Instead of being tied to “fixed” shapes, all customers get fine-grained provisioning control of cores, memory, and storage for their use cases. Only Oracle offers this level of customizability, allowing customers to provision the exact amount of infrastructure required by their workload, thus minimizing waste.

Integrated with OCI Identity and Access Management

OCI Search is fully integrated with Oracle Cloud Infrastructure Identity and Access Management and inherits OCI's simple, integrated, and prescriptive security philosophy.

Encryption

All data at rest and in flight is fully encrypted. OCI Search helps you remain compliant with the Federal Information Processing Standards out of the box.

Index State Management

Customers can use the OpenSearch Index State Management plugin to perform automated, policy-based index lifecycle management actions such as rollovers, merges, deletions, and schedules.

Performance analyzer

For OCI Search deployments with OpenSearch v2.3 and later, customers can use the performance analyzer plugin to query numerous performance metrics for their cluster, including aggregations of those metrics via a REST API.

Supported languages

Through the use of language analyzers (tokenizers), the project supports a number of different languages. See the full list of supported languages for more information.

AI and machine learning

Vector search

OpenSearch's vector search feature empowers developers to create scalable, high performance vector search applications by managing billions of vectors with subsecond query times, leveraging advanced techniques such as efficient filtering and vector quantization for optimized performance and cost.

RAG pipeline

The retrieval-augmented generation (RAG) pipeline bridges the gap between the large language model (LLM) and private corporate data. With v2.11 and later, search can be done in natural language thanks to the integration between OCI Search and the OCI Generative AI and OCI Generative AI Agent services leveraging Cohere’s LLM.

Machine learning framework and multimodal search

OpenSearch's ML framework streamlines model management and offers seamless integration of neural search models, including text and image multimodal search. It enables contributors to easily create AI integrations and supports advanced search capabilities such as conversational and hybrid search.

LangChain integration for AI-powered search

OpenSearch's LangChain integration enables developers to seamlessly build generative chatbots and conversational search experiences by simplifying the use of large language models and vector search, eliminating the need for custom middleware while enhancing AI-driven applications with features such as the OpenSearch vector store and RAG templates.

Enterprise search

Semantic search

Semantic search using a vector database in v2.15 provides a better way to search diverse data by interpreting language nuances and relationships. It significantly improves the accuracy of search results by leveraging the power of the vector database, k-NN (nearest neighbor) plugin, and retrieval-augmented generation pipeline. There’s no need to create a list of synonyms or manage the heavy indexing of all your content; you can perform semantic searches that take into account the context and intent to deliver more accurate results. And you can search your structured (text) and unstructured (images) content while maintaining the privacy of your data.

Conversational search

The built-in conversational service uses generative AI to simplify the user experience. Conversational search is implemented with conversation history, which allows an LLM to remember the context of the current conversation and understand follow-up questions, and RAG, which allows an LLM to supplement its static knowledgebase with proprietary or current information.

Observability

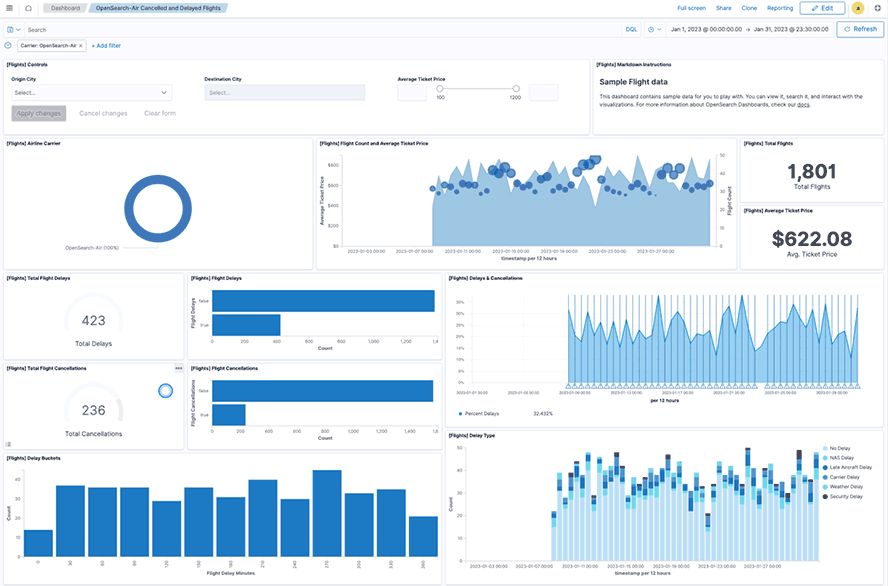

OpenSearch dashboards

You can use OpenSearch observability tools to detect, diagnose, and remedy issues that affect the performance, scalability, or availability of your software or infrastructure. A common open standards–based schema coupled with a piped processing language optimized for observability use cases simplifies the correlation and analysis of logs, metrics, and trace telemetry to support fast time to resolution and a better experience for your end users.

Log monitoring

Perform comprehensive monitoring using tools such as log tail, log surround, log patterns, and log-based metrics.

Anomaly detection

Detect anomalies and analyze streaming data with rich transformation and aggregation.

Security Analytics

The new out-of-the-box Security Analytics helps you detect, investigate, and respond to threats in near real time. Gather a wide variety of data sources, correlate them as illustrated, and detect early potential threats with prepackaged or customizable detection rules that follow a generic, open source format. Create your own notification process so your security team is alerted of potential issues in near real time.

Open source detection rules

Get more than 2,200 prepackaged rules for your security event log sources.

Unified interface

Access user-friendly security threat detection, investigation, and reporting tools.

Automated alerts

Create alerts based on matched detection rules so incident response teams are notified in near real time.

Correlation engine

Configure correlation rules to automatically link security findings and investigate them using a visual knowledge graph.

Customizable tools

Use any custom log source and define your own rules to detect potential threats.