Data Tier Caching for SOA Performance

by Kiran Dattani, Milind Pandit, and Markus Zirn

Published February 2010

Injecting high performance into data services

Part of the Oracle Fusion Middleware Patterns article series.

Downloads

Introduction

Service-Oriented Architecture (SOA) is changing the application development and integration game. Web service standards make it possible to reuse existing business logic much more easily, independent of the specific technology in the the business logic is implemented. BPEL and other orchestration standards make it easy to string together such services via composite process flows. Hence, SOA provides ways to access information as well as combine information more easily.

This leads to significant benefits, notably improved agility and enhanced productivity thanks to better interoperability across existing monolithic applications. At the same time, SOA also raises the bar for IT because business users have higher expectations. Business users, now familiar with using consumer Internet applications and mash-ups from Google, Yahoo, and other companies now demand similar capabilities from their enterprise systems. SOA helps to implement such mash-ups.

The downside to this positive development is performance. Performance/scalability issues have grown to be the one of the topmost concerns when building a SOA application. This concern is not surprising. The results of a SOA composite application are surfaced in a user interface and therefore bound by an acceptable response time. Users typically consider any results beyond five seconds as simply unusable. In online retailing, studies have even shown that shoppers drop off when response times exceed only one second. Because SOA makes use of the verbose XML (extensible mark-up language) format and data is converted (marshaled and un-marshaled) when web services are called, overhead is generated. This abstraction places a performance tax on SOA applications.

The more services in a business process, the more vulnerable it is. If a business process depends on six data services, each of which achieves 99% uptime, the business process itself could have up to 6% downtime. That translates to as many as 525 hours of unplanned downtime each year. Problem doesn't end here. Although data services are designed for centralizing data access, scalability issues arise when many business processes depend on same set of data services. On top of that, if IT is mandated to meet strict SLA agreements, it becomes extremely important to build SOA applications that perform on all RASP levels. But what is the best way to build a scalable data access store in the data tier? What is the best way to enable user applications, and in turn business processes, to quickly access data traditionally stored in databases?

In this article we will discuss how a mid-tier caching strategy can inject high performance into data services as part of a SOA. We will also illustrate the approach a major pharmaceutical company took to improve the performance of a composite application using an Oracle Coherence Grid solution with Oracle SOA Suite.

SOA Caching Strategies

The performance of any SOA application is directly proportional to the amount of time it takes to retrieve the underlying data. Data within a SOA generally falls into one of two categories:

- Service state - This data pertains to the current state of the business process/service, i.e. where is current process instance at this point in time, which processes are active vs. closed vs. aborted, and so on. This data is particularly useful for long-running business process, and is typically stored in a database to provide insulation against machine failures.

- Service Result - This data is delivered by the business process/data service back to the presentation tier. Typically, this data is persistent and stored in backend databases and data warehouses.

Caching can play a very important role in improving the speed to access service state/result data. Caching is used to minimize the amount of traffic and latency between the service using the cache and underlying data providers. In common with any caching solution, caching design for a business service must consider issues such as: how frequently the cached data needs to be updated, whether the data is user-specific or application-wide, what mechanism to use to indicate that the cache needs updating, and so on.

It is possible to cache both types of data: service state and service result.

- Service State Caching allows you to share service state data between services in a business process in memory.

- Service Result Caching allows you to cache results from frequently accessed business services or data services.

Figure 1: SOA Composite App can boost performance by caching data services and business services data

Figure 2 illustrates how a composite SOA application can be architected for boosted performance by implementing caching at two layers:

- The Upper cache layer caches both business service state as well as business service result (i.e. results from data service layer)

- The Lower cache layer in turn caches both the data service state (if needed) as well as application data coming from underlying databases and applications.

Let's understand how this works for data services layer. The idea is to add the cache access immediately before the data source access by conditionally short-circuiting the data source access if there is a cache hit and by adding a cache update after the data source access. The first time a service is called, the result is cached and served to the consumer. On subsequent calls, the application logic looks at the cache first and retrieves data directly from there, should it be available. This technique is called cache-aside

Figure 2: Cache-aside architecture can avoid expensive database access

With data now located in memory in the middle tier, data access is now much faster, enabling more-intelligent services and faster response times. Basically, the primary goal is to avoid as many performance-degrading database accesses as possible by asking the question: "does this information have to be real-time or is near real-time good enough?" In the case where multiple invocations to a service over a short time are likely to return the same result, relaxing the requirement to near real-time data served from a cache will result in significant performance enhancements.

Cache-aside is an important performance-enhancing strategy, specifically for SOA applications that primarily read data from multiple different backend sources. A typical scenario involves assembling a 360-degree view of a customer across multiple data stores.

Cache-aside is common in the travel industry, where, for example, booking data is stored, nearly in real-time, in a cache. Allowing a database hit for every instance of someone browsing for hotel nights or airline seats would translate into scalability issues. Sometimes, this even requires a look-up in a third party system, with each look-up resulting in a fee. Hence, most browsing for trips happens with near real-time data served from a cache. You can use the same technique to speed up your SOA application.

Providing a 360-Degree Customer View

A very large, research-based pharmaceutical company was challenged with customer information spread across multiple data stores. Additional back-end systems had been added through geographic expansion and mergers and acquisitions on the business side. Customer information sat in multiple transactional CRM and ERP systems, in Master Data Management stores, and in business intelligence data warehouses. This environment made it difficult to deliver a 360 degree view of a given customer, a view that reflected all available information from past customer interactions. As a restult, the company's sales force faced a constant struggle to get up-to-date information on customers (i.e. physicians). Aggregating and filtering lists of information across multiple data sources for any given physician posed significant challenge.

To further aggravate the problem, sales people needed real-time access to customer information, from anywhere. Imagine a scenario in which a sales rep is talking to a physician and has to recommend new products based on the physician's specialty, research, and buying pattern. Lack of this information can hamper the conversation quality as well as the chance to up-sell/cross-sell. Hence, it was necessary that the new solution deliver a real-time experience to its sales force on their mobile devices.

The pharmaceutical company quickly realized it had to build an agile and fully configurable data integration solution to deliver a 360-degree view of the health care provider using a multi-channel SOA- and Web 2.0-based solution presentation layer to successfully search for a customer across Healthcare Project Management (HCPM), Primary Care Case Management (PCCM), and marketing/campaign management apps (UNICA) and CRM (Siebel), Finance (Oracle e-Business Suite), Master Data Management (Siperian) and several other data sources.

Solution using Oracle SOA Suite and Oracle Coherence

This solution was architected to build the "Get Customer Activity" composite application which provided following features:

- Initiate and retrieve data from multiple data sources concurrently

- Return a list of candidate customers based on search criteria

- Select a customer from the candidate customer list and return an aggregated and filtered set of activity information specific to that candidate customer across multiple data sources (Betsy, USDW, and PubMed)

- Select a customer activity to view activity detail and return activity information detail specific to the customer

- Limit user visibility to data based on entitlement criteria (activity, channel, and territory)

- Return phonetically matched data.

Figure 3: GCA Composite app displaying consolidated customer information

The pharmaceutical company selected Oracle SOA Suite for process and data integration and Microsoft Sharepoint to provide a Web 2.0 layer to deliver the end information to the sales reps.

The company also decided to pursue a caching strategy as a performance boost for SOA data services. However, the solution would have to be capable of meeting three important caching needs:

- The solution would have to accomodate a data service cache size that could easily exceed the memory available on one box

- In anticipation of gradual, global adoption, the solution would have to be capable of linear scalability through the addition of servers to the distributed cache

- The solution would have to leverage the existing set of commodity servers in the company's SOA mid-tier in the most efficient manner possible for caching.

In short, the company needed a solution that could combine RAM memory available across multiple heterogeneous servers into a distributed shared memory pool. Based on these requirements, the company selected Oracle Coherence to distribute service data across multiple heterogeneous servers and coordinate that data across nodes in the clustered cache.

Figure 4: "Get Customer Activity (GCA)" Composite Application using Oracle SOA Suite and Oracle Coherence

The GCA composite application provides important information on a specific customer activity through the following process:

- Oracle BPEL PM orchestrates business services (GetCustomer Spain, GetCustomer Germany. etc)

- The business services invoke Oracle ESB data services (getActivities, getCustomers by region, getCustomeractivity by Applications)

- The data services in turn extract required data (through the security and governance layer) from relevant data sources (Siebel, UNICA, Siperian etc) and return the data back to Oracle BPEL PM

- Oracle BPEL PM eventually surfaces the result to the sales rep on a Sharepoint portal.

Figure 5: The GetCustomers (Germany) process flow

The speed with which the data is delivered to the sales rep's PDA depends on the speed with which the data services can retrieve data from the underlying data layer. Let's examine the role Oracle Coherence plays in this process.

BPEL - Coherence Integration Architecture

Within the GCA Composite Application, Oracle Coherence is used to cache the data service results data. Since this data doesn't change frequently, Oracle Coherence serves the data from memory rather than from the data source via a database call.

Figure 6: Interaction between BPEL PM, Coherence and ESB

Figure 6, above, illustrates the sequence flow between BPEL PM, Coherence, and ESB for extracting customer activity information. GetCustomer is a BPEL-based business service. Such business services typically would call the GetCustomerActivity data service (at the ESB layer), which in turn extracts the required data from underlying data services.

But since Coherence is used for caching the result data from the ESB data service, the sequence is bit different. The first time the GetCustomer service is called, the result from GetCustomerActivity is cached and served to the consumer. On subsequent calls, the GetCustomer BPEL Service looks at the cache first and retrieves data directly from there, should it be available. Caching CustomerActivity data in memory in the middle tier boosts data access speeds.

How exactly should Coherence be used from BPEL, the SOA orchestration language? Given that the pharmaceutical company's goal for using mid-tier caching was scalable performance, architecture could not compromise efficiency. Oracle Coherence offers a Java API; a native service call from BPEL to Coherence was a preference over a standard web service interface. The performance overhead of invoking web service operations is several orders of magnitude larger than that of invoking native Java classes. That's because marshaling and un-marshaling XML, processing SOAP envelopes, etc. are expensive operations.

Figure 7: Refined Approach with WSIF Binding

Native connectivity to Java resources is not a standard feature of BPEL, but Oracle BPEL Process Manager (PM) offers a solution for this purpose - WebServices Invocation Framework (WSIF) - that requires no modifications or extensions to BPEL code. A detailed description of how to use WSIF with Oracle BPEL Process Manager is available in the Oracle BPEL Cookbook. In tests, we have experienced order of magnitude performance improvements leveraging WSIF bindings over standard web service interfaces to Oracle Coherence.

Integrating Oracle BPEL PM with Oracle Coherence

Let's take a quick look at the GetCustomer BPEL Process. As seen in Figure 8 (below), this process first checks the cache (also see Condition in Figure 10). If the cache is empty, the process calls the underlying data service; otherwise, the results are returned from the cache.

Figure 8: BPEL PM Process (GetCustomer) Calls Coherence Cache

Partner links define how different entities (in this case the Coherence Web Service) interact with the BPEL process. Each partner link is related to and characterized by a specific partnerLinkType. (See Figure 9.)

InvokePagingCacheService internally calls the Coherence WSIF webService. ParnerLinkType is defined in the WSDL file.

Figure 9: PagingCacheService Partnerlink

Figure 10 (below) illustrates how the conditional expression is defined based on the value of the cache retrieved from PagingCacheService.

Figure 10: Condition to check whether cache is empty

Now that we've seen how a BPEL process invokes the Coherence cache service, let's look at how this cache service is created. Briefly stated, the process invloves creating a Java application that will read from/write to the cache. We then wrap this Java application as a web service to be called from the BPEL Process.

From an implementation perspective, following steps are necessary:

- Create the Schema for Request and Response and WSDL for Cache Service

- Create Java Objects from the Schema

- Create Java implementation class for Cache Service

- Create coherence_config.xml to configure coherence

- Package coherence.jar and tangosol.jar within BPEL Jar

- Deploy and verify the process

1. Create the Schema for Request and Response and WSDL for Cache Service

Because the BPEL process communicates with other Web services, it relies heavily on the WSDL description of the Web services invoked by the composite Web service.

Depending on the Customer schema, the search request and response schemas have to be prepared. For example, a Customer search request will have firstname, lastname, search type, etc. The Response will have a list of Customers, with each customer's address, purchased products, etc.

Figure 11: WSDL for Coherence Cache Service

2. Create Java Objects from the Schemas

Using schema (the utility bundled with SOA Suite), Java classes are created and then compiled

3. Create the Java implementation class to call Coherence

This is the Java application the BPEL PM service will call via the WSIF interface. Here's a quick snapshot of the Java code you'll need to read from Coherence cache and return the results to the BPEL PM Service. The pertinent sections are highlighted in yellow.

public class CacheServiceImpl {

static NamedCache _customerQueryResultCache=null;

static {

/** Read coherence configuration file to initialize parameters like cache

expiration, eviction policy **/

System.setProperty("tangosol.coherence.cacheconfig", "coherence_config.xml");

Thread thread = Thread.currentThread();

ClassLoader loaderPrev = thread.getContextClassLoader();

try

{

thread.setContextClassLoader(com.tangosol.net.NamedCache.class.getClassLoader());

/** An instance of a cache is created from the CacheFactory class. This

instance, called CustomerQueryResultCache, is created using the getCache()

method of the CacheFactory class. Cache name GetCustomer.cache is mapped

to a distributed caching scheme.**/

_customerQueryResultCache = CacheFactory.getCache("GetCustomer.cache");

System.out.println("cache: "+_customerQueryResultCache);

}

finally

{

Thread.currentThread().setContextClassLoader(loaderPrev);

}

}

public CacheReadResponseType cacheRead(CacheReadRequestType input) throws

ApplicationFault, SystemFault, BusinessFault

{

String key = input.getInput().getKey();

CacheReadResponseType cacheResponseType = null;

/** A CustomerQueryResultCache is a java.util.Map that holds resources

shared across nodes in a cluster. Use the key (in this case customer id)

to retrieve the cache entry using the get() method) **/

CustomerQueryResultSet customerQueryResultSet =

(CustomerQueryResultSet)_customerQueryResultCache.get(key);

System.out.println(" Requested key Id is : " + key);

System.out.println(" Read from cache is : " + customerQueryResultSet);

cacheResponseType = CacheReadResponseTypeFactory.createFacade();

CacheReadResponse cacheResp =

CacheReadResponseFactory.createFacade();

if (customerQueryResultSet != null)

cacheResp.setCustomerQueryResultSet(customerQueryResultSet);

cacheResponseType.setResult(cacheResp);

return cacheResponseType;

}

}

4. Create coherence_config.xml to configure oherence

Now let's set up the caching configuration, including eviction policy and cache expiration. We'll use the distributed cache with expiry set to 0, so that the cached data never expires. Otherwise we would be updating the cache when the data changes in database.

<distributed-scheme>

<scheme-name>default-distributed</scheme-name>

<service-name>DistributedCache</service-name>

<backing-map-scheme>

<local-scheme>

<scheme-ref>default-eviction</scheme-ref>

<!-- Eviction policy set to LRU, so that least recently used cache

data is evicted to make room for new cache -->

<eviction-policy>LRU</eviction-policy>

<high-units>0</high-units>

<!--Expiry set to 0, so that the cached data never expires. -->

<expiry-delay>0</expiry-delay>

</local-scheme>

</backing-map-scheme>

</distributed-scheme>

The coherence_config.xml file should be put in a Jar file and added to the project libraries. (See Figure 9.)

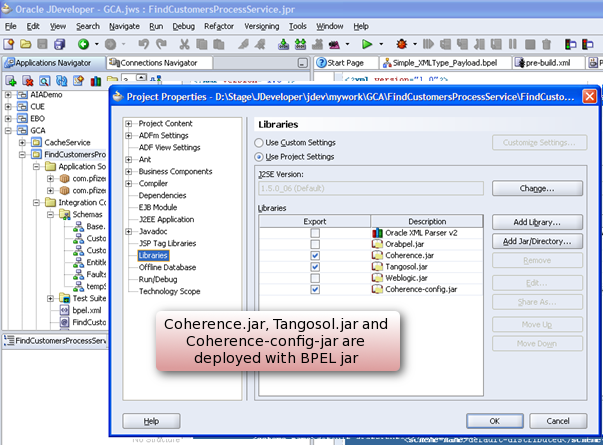

5. Package coherence.jar and tangosol.jar within BPEL Jar

Coherence.jar and tangosol.jar is now added to the project library, and it gets deployed with the BPEL Jar, which will come under BPEL-INF/lib. (See Figure 12.)

Figure 12: Coherence jar files are deployed with BPEL Jar Files

6. Deploy and Verify the Process

Once the process is deployed and the first call is made, the process creates the cacheserver and creates the cache getCustomer.cache. This cache is mapped to the distributed caching scheme. From this cache instance, data is read/written.

Conclusion

By implementing mid-tier caching using Oracle Coherence, our composite app experienced significant benefits:

Figure 13: Coherence Cache reads were 30-50% faster

- Internal performance tests (see Figure 13) revealed response times 30% to 50% faster for data coming from cache. And since the data is closer to the App server, it's easily available and avoids the database trip.

- Increased fault tolerance in the GCA composite application. Data is always available in the event of a failure of any single machine or server.

- Improved utilization of server hardware and more efficient use of server capabilities.

- Reduced data marshalling/un-marshalling during data service calls.

As this article has demonstrated, the use of sophisticated mid-tier caching solutions can improve the performance of SOA applications. A well-designed caching product shields the complex mechanics of a distributed and clustered cache, while allowing SOA architects/developers to focus on leveraging the cache. This results in additional benefits, including improved reliability through redundancy from the cache, and linear cache scalability from the shared-nothing architecture.

Kiran Dattani is Director of Archtecture Finance and Procurement for a major pharmaceutical company, where he responsible for Global Architecture and Enterprise Integration projects. An accomplished speaker, he is a recognized expert in enterprise integration and supply chains in the life sciences and manufacturing industries.

Milind Pandit is a SOA Architect with Oracle Consulting Services, where he assists customers in deploying SOA-based architecture. He has eleven years experience in software design, development, and implementation involving Enterprise Application Integration, J2EE, and Object-Oriented Analysis and Design.

Markus Zirn is a Vice President of Product Management for Oracle Fusion Middleware. He is the editor of The BPEL Cookbook, the author of several articles on SOA and related topics, and a frequent speaker at leading industry and analyst conferences.