Data Services in SOA: Maximizing the Benefits in Enterprise Architecture

Architect: SOA

by Joan Lawson

Taking a horizontal view of the organization at a data level in order to identify and implement data management functionality as services

Published April 2009

Leading enterprises are increasingly identifying and implementing application functionality as services that can participate in orchestrated business processes. These services are often vertical in nature, specific to a business function. The value obtained from this flexibility, however, is gated by the quality of the data that flows through these services. Therefore, the value of such services is significantly enhanced by taking a wider perspective when viewing corporate data. In this article, I will encourage organizations to take a horizontal view of the organization at a data level, to identify and implement data management functionality as services, and to store the data such that it meets broader corporate requirements for business information.

Service-Oriented Architecture Background

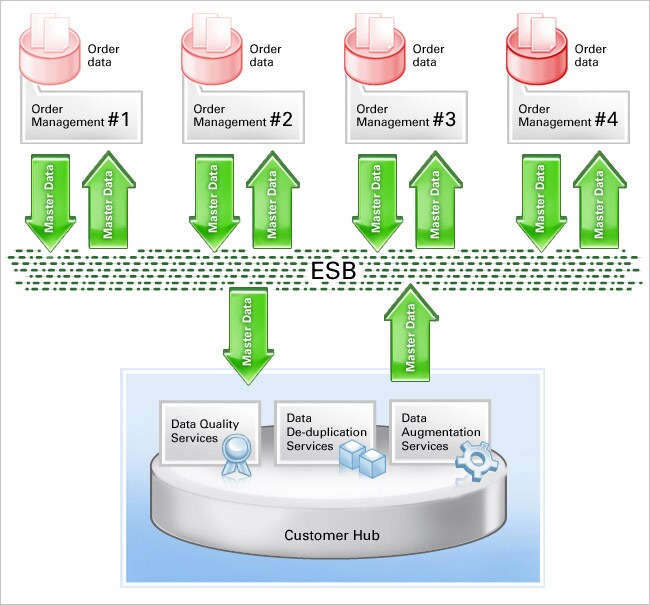

A service-oriented architecture (SOA) is a business-centric architectural approach that supports integrating business data and processes by creating reusable components of functionality, or services. Traditionally, the identification of services has been done at a business function level. For example, a business might have four divisions, each with a distinct system for processing orders. An order processing service would be created for each division, and the service can be used as one step in a larger business order process. This architecture supports an agile business. When a division's operating model changes or a new division is added, the business process can readily be changed without a large implementation effort.

Figure 1: Multiple Order Management applications and customer data repositories

In addition to reducing duplication of logic with the creation of reusable services, a SOA implies governance of the services to ensure that the services are designed, developed, and consumed in alignment with business and architectural requirements. Governance also includes monitoring and measuring the service use throughout the lifecycle of the service. This gives IT the tools to ensure that SOA delivers measurable business value.

Data in SOA

Historically, the focus has been on data. More recently, the focus has been on SOA. However, there has been a lack of discussion of how the two areas interrelate. A data-centric SOA allows enterprises to leverage new and existing IT investments to support business requirements, transition between applications, and deliver trusted business information. As stated by Eric Thoo of Gartner in his paper "Market Trends: Data Services" (2008):

To achieve the business benefits expected from SOA initiatives, organizations must include a strong data-oriented perspective in their work. Focusing only on the modularization, reuse, and composition of application logic is not enough – SOAs also demand that firms address data issues.

Data issues is a broad term that comprises data management, data quality, and data movement. Without a focus on data, a SOA is little more than an improved design pattern for integrations. With a focus on data, a SOA enables a company to have high-quality, consistent data at the right place at the right time. In addition, it pushes the concepts of SOA into the data tier, creating reusable data services that can be leveraged in a business process orchestration or a composite application.

Returning to the example of multiple order processing services given above, incorporating data services allows an enterprise to decentralize applications while centralizing the data management services and abstracting the data repositories.

Figure 2: Application services exposed to an ESB

The Value of Data Services

Data services extend the value of business services in a SOA. Data content and access is consistent and trusted. It removes the complexities of accessing heterogeneous data.

The advantages to the business community include the consistent implementation of data management rules across the data domains, control of data access and security, and provisioning of data to the right application at the right time. These services are the basic building blocks of achieving customer intimacy, a single view of the customer that enables a high-quality, seamless customer experience. This benefits not only the business users but also the customers and partners with whom the business regularly interacts. Data quality is ensured, and data accessibility is optimized.

Data services reduce risk and redundancy in the technology landscape. The creation of data services ensures that there is one source of truth for both data access and data management. Data management code is not duplicated throughout the applications. In addition, SOA governance provides invaluable tools with the mapping of relationships and dependencies of services, processes, policies, and applications. Adding data services to the topology gives a clear picture of the alignment of the business, data, and application layers. Moreover, service performance and compliance is reportable across the landscape.

My experience is that combining Oracle SOA Suite and Oracle Data Integration Suite to build and implement data services yielded a reduction of development costs by 30 percent, improved speed of data handling by 50 percent, and improved business process execution times by 70 percent. The following picture describes the architectural details of a SOA-based data integration infrastructure.

Figure 3: Data and applications services exposed to the ESB

Data Services for Master Data in a SOA

Data quality services. Data quality services use algorithms and predefined business rules to clean, reformat, and de-duplicate low-quality business data. Traditionally, data quality processing has taken place when data is moved between the online transaction processing (OLTP) application and a data warehouse or mart before being made available to a BI environment. This approach is not optimal for the following reasons:

- Poor quality data exists, and will continue to exist in the OLTP applications

- Improved data in the data warehouse is inconsistent with data in the OLTP application. This results in discrepancies between transactional reporting sourced from the applications and BI-based reporting sourced from the data warehouse.

By incorporating data management services into data entry and business processes, data is improved in the source application. The data becomes more valuable and usable at the source and is clean and consistent with the data warehouse.

Based on Figure 3:

- Master data is captured in the various front-end and back-end business applications.

- Before writing to the application's database, the master data can be passed into a business process that will define one or more data quality services to be consumed to clean, de-duplicate, reformat, and/or enhance the master data.

- After the data is improved, the business process will then consume a data distribution service to write the data to the proper data repositories. See the Data Distribution Services section, below.

- Rejected master data can be passed to another business process that can include human workflow steps to correct the data so that it can be distributed to the defined data stores.

With the construction of data quality services, the business rules for cleansing and enhancing data can be incrementally enhanced without affecting the business applications that rely on quality data.

In a recent project in which I was involved, during the order add process, attributes for new customers were messaged to data services, which cleaned, standardized, and de-duplicated the data and then returned it to the customer master data tables. The data services were consumed by two order add processes-that of the e-commerce environment and that of the customer relationship management (CRM) application. One service centralized the common logic that served both application endpoints.

Data distribution services

After performing Data Quality services on the master data, the data can be distributed based on the enterprise architectural design. Data distribution can be event-based, publishing the data into an integration platform with defined subscribers. Alternatively, data distribution can be process-based, using a BPEL process to distribute the data to the applications that need it. In either case, data services, employed at the target, distribute the data into the necessary data repositories.

A typical design is to store the master data directly in the business applications. If a data warehouse is a component of the overall design, the consistent data present in the applications ensures the same quality in the data warehouse.

A second approach is to store the data in the business applications and concurrently write the master (dimension) data, in real time, to the data warehouse. This approach maximizes the value of the data that is in the integration layer, reducing the batch processing that is often scheduled off -hours. The master data can either be transformed in flight and loaded into the data warehouse, or it can be loaded into a staging area and then transformed at a later time.

A third approach is master data management (MDM). MDM consolidates and maintains authoritative master data across the enterprise and distributes this master information to all operational and analytical applications as a shared service. The data distribution process can also include invoking a service to write the master data to the MDM data store.

With the construction of data distribution services, target applications can be added or changed without impact to the business applications, because rules for distribution reside in the middleware environment and not in the source application.

For another project in which I participated, we took advantage of this design pattern with the messaging of customer master data to downstream applications and to the customer dimension of the data warehouse. Real-time order data was messaged to an operational data warehouse where it was used together with the customer data to produce a real-time dashboard of order activity.

Data access services

Once stored, data access services provide direct, consistent access to the data stores. The services may be as simple as querying the master data in a single database or as complex as federating data from multiple locations, aggregating data in real time. Many factors need consideration in the implementation of a data access service to ensure optimal performance.

Although the implementation of data access services will vary considerably, the value of this design style is the presentation of a simple query service to consuming applications. The implementation of data access is abstracted so that it decouples and hides the underlying data source from the business applications. The data access services might even perform transformation or aggregation functions to process the data. These, too, are abstracted from the business applications.

The value of a service-oriented approach is readily apparent in data access services. With the construction of data access services, the rules for managing, securing, and provisioning the data are defined and implemented in a centralized layer of services. This layer is modifiable, without impact to the business applications. Data access services enable the highest degree of flexibility, whether changes are made at the application level or the data level. For example, new applications can consume the existing data access services, enforcing the same level of data provisioning without embedding duplicate logic in the new application. At the data level, you can move to an MDM hub without modification to the existing applications. All the code for data access resides in the services.

Data federation services

Data federation looks like a data access service on the front end, but on the back end, the abstraction layer points to multiple distributed data stores, where each data store only has a part of a record. For example, to obtain a complete customer record, a data federation service may retrieve data from four different data repositories of four different business applications. Data federation services act like a virtual data source with one virtual table. A data federation service manages the queries to the four tables , filtering and joining the returned data and returning a single result set from the service.

Data Services for Transactional Data in a SOA

Data services are not limited to the management of master data. An enterprise architecture also benefits from services that are built to obtain and deliver transactional data for purposes of providing actionable information for workers at all levels of the organization. This enables them to take corrective actions when monitoring thresholds are exceeded.

In Claudia Imhoff's article "Operational Business Intelligence - A Prescription for Operational Success" (2007), she states, "Business intelligence has 'invaded' the operational space in a big way, offering inline analytics, real-time or near real-time decision-making support for all employees in the enterprise. Today's BI environment includes three forms of BI-strategic, tactical, and operational."

Employing the concept of operational business intelligence (BI) data from production systems together with data from a reporting database, such as a data warehouse, can be used for real-time tracking, monitoring, and forecasting of business information. Consider operational BI as an end-to-end process layered over unified data services-data quality, data distribution, and data access.

The sources for the continuous acquisition of data for operational BI include the following:

- A business process language, such as BPEL, enables the capture of data and events from business process workflows.

- A messaging system, such as Oracle Service Bus, enables the capture of transactional data messaged to the integration platform.

- Database objects, such as triggers and log scraping, support change data capture (CDC) for data in the OLTP databases.

Once acquired, operational data can be distributed to one or more physical or in-memory stores for processing:

- Data can be written to a physical operational data store.

- Data can be passed to Business Activity Monitoring and Complex Event Processing services, which can accept tens of thousands of transactional updates per second into a memory-based persistent cache.

- Alternatively, a virtual business view of dispersed data, as in federated data architecture, captures the data that is required to measure the business.

Filtering, correlating, and analyzing data to determine if an alert needs to be generated and presented can include the following:

- Transactional data combined with historical knowledge from the data warehouse

- Business rules execution

- Advanced analytics processing

Disposition of alerts to the business community can include the following:

- A live data dashboard with stoplight reporting

- Initiating a business process (for example, with BPEL) to deliver the alert

- Executing a business process to automate the alert resolution

Practical Implementation

Using data services in your enterprise architecture requires paying close attention to the details of architectural design and testing. By doing so, performance concerns will be addressed, and in many cases, performance will be optimized by the placement of data in the right location at the right time.

Localize master data objects to collect all data at the system of record, process through data services, and then return to the system of record. By creating a stub of the master data object, there will be no affect on transactional processes while the data services process the master data. In fact, this approach offloads processing from the local application and onto the service processes.

Push as many reporting tasks to the reporting layer, using the data warehouse repositories. In the design presented here, the data warehouse repositories collectively contain the master and transactional data. The movement of report processing to the reporting layer improves the performance of local applications.

Supporting Technology

Oracle SOA Suite and Oracle Data Integration Suite together provide a comprehensive set of components to build, implement, and manage data services in a SOA.

SOA/services integration platform. As defined in the Oracle white paper, Revolution in Agility (2008 ), successful integration requires a complete platform that delivers the following:

- Business integration, including a business process management, which allows each process to be expressed as a "story" (such as order fulfillment)

- A data service architecture, which delivers the content-the "nouns" (such as customer and product)

- A service-enabled application integration infrastructure, which delivers the functionality-the "verbs" (such as purchase and ship)

- An aggregator (such as Oracle Service Bus), which connects the nouns and verbs into the desired story (and allows the company to continuously edit the story)

Oracle SOA Suite delivers these components as well as Oracle Application Integration Architecture, which supplies content on top.

Data integration platform. Data quality services are provided through Oracle Data Quality (an Oracle Data Integration Suite component), a rule-based engine that standardizes and cleanses name, address, and other data; validates, repairs, and enriches data; and identifies and processes duplicates.

Oracle Data Integrator Enterprise Edition (an Oracle Data Integration Suite component) is the foundation model used for the design and development of ETL/ELT (extract-transform-load) program components. These are the classic data services, taking in a data set, transforming it, and producing an enhanced data set. Oracle Data Integrator Oracle Data Integrator-Enterprise Edition can transform both single and bulk data payloads. These batch data services can expose web services that allow invocation from the integration platform.

MDM platform. Oracle's master data management platform consolidates and manages master data for provisioning to both OLTP and analytical applications. Oracle's suite includes customer, product, and site hubs, as prebuilt extensible master data models for each of these data objects.

Summary

A horizontal view of data across the enterprise allows you to build a much more flexible and useful service layer across business applications. Abstracting those functions and exposing data services results in data that is of higher quality and available in the right place at the right time; technology components that are reusable and agile; and business applications and processes that are more broadly useful to the enterprise, customers, and partners.

Joan Lawson led Enterprise Architecture programs at Monster Worldwide and other industry-leading companies. After a worldwide implementation of the Oracle SOA Suite, Joan was named Oracle Magazine's 2007 "IT Manager of the Year." Joan was recently selected as an Oracle ACE with expertise in Middleware and SOA. She blogs at joanlawson.blogspot.com.