How to Deploy Oracle RAC on an Exclusive-IP Oracle Solaris Zones Cluster

by Vinh Tran

Published October 2014 (Updated on Sept 2016)

Using Oracle RAC 12c, Oracle Solaris 11.2, and Oracle Solaris Cluster 4.2

How to create an exclusive-IP Oracle Solaris Zones cluster, install and configure Oracle Grid Infrastructure 12c and Oracle Real Application Clusters (Oracle RAC) 12c in the exclusive-IP zone cluster, and create an Oracle Solaris Cluster 4.2 resource for the Oracle RAC instance.

Table of Contents

This article describes how to create an exclusive-IP Oracle Solaris Zones cluster in an Oracle Solaris Cluster 4.2 environment on Oracle Solaris 11.2, install and configure Oracle Grid Infrastructure 12c and Oracle RAC 12c in the zone cluster, and create an Oracle Solaris Cluster 4.2 resource for the Oracle RAC instance.

The following table provides links to other articles that describe how to perform similar tasks using other versions of the software.

| Article | Oracle RAC Version | Oracle Solaris Version | Oracle Solaris Cluster Version |

|---|---|---|---|

| "How to Deploy Oracle RAC 11.2.0.3 on Zone Clusters" | 11.2.0.3 | 10 | 3.3 |

| "How to Deploy Oracle RAC on Oracle Solaris 11 Zone Clusters" | 11.2.0.3 | 11 | 4.0 |

Introduction

Oracle Solaris Cluster provides the capability to create high-availability zone clusters. A zone cluster consists of several Oracle Solaris Zones, each of which resides on its own separate server; the zones that comprise the cluster are linked together into a single virtual cluster. Because zone clusters are isolated from each other, they provide increased security. Because the zones are clustered, they provide high availability for the applications they host. Multiple zone clusters can exist on single physical cluster, providing a means to consolidate multicluster applications on a single cluster.

You can install Oracle RAC inside a zone cluster to run multiple instances of an Oracle database at the same time. This allows you to have separate database versions or separate deployments of the same database (for example, one for production and one for development). Using this architecture, you can also deploy different parts of your multitier solution into different virtual zone clusters. For example, you can deploy Oracle RAC and an application server in different zones of the same cluster. This approach allows you to isolate tiers and administrative domains from each other, while taking advantage of the simplified administration provided by Oracle Solaris Cluster.

For information about different configurations available for deploying Oracle RAC in a zone cluster.

Note: This article is not a performance best practices guide and it does not cover the following topics:

- Oracle Solaris OS installation

- Storage configuration

- Network configuration

- Oracle Solaris Cluster installation

Note: For information about installing Oracle Solaris Cluster 4.0, see the "How to Install and Configure a Two-Node Cluster Using Oracle Solaris Cluster 4.0 on Oracle Solaris 11" article.

Overview of the Process

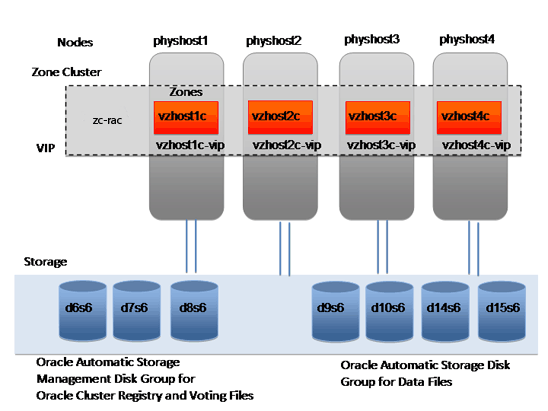

This article describes how to set up Oracle RAC in an Oracle Solaris Cluster four-node zone cluster configuration using Oracle Automatic Storage Management (see Figure 1).

Three significant steps have to be performed:

- Create a zone cluster and the specific Oracle RAC infrastructure in this zone cluster.

- Prepare the environment, and then install and configure Oracle Grid Infrastructure and the Oracle RAC 12c database.

- Create the Oracle Solaris Cluster resources, link them up, and bring them online.

Figure 1. Four-node zone cluster configuration

Prerequisites

Ensure that the following prerequisites have been completed:

- Ensure that Oracle Solaris 11.2 is installed and network name services are enabled. In this example, DNS and LDAP name services are used.

- Ensure that Oracle Solaris Cluster 4.2 is installed and configured with the

ha-cluster-fullpackage. - Ensure that shared disks, also known as

/dev/did/rdskdevices, are identified. Listing 1 is an example of how to identify a shared disk from the global zone of any cluster node:phyhost1# cldev status === Cluster DID Devices === Device Instance Node Status --------------- ---- ------ /dev/did/rdsk/d1 phyhost1 Ok /dev/did/rdsk/d10 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d14 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d15 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d16 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d17 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d18 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d19 phyhost2 Ok /dev/did/rdsk/d2 phyhost1 Ok /dev/did/rdsk/d20 phyhost2 Ok /dev/did/rdsk/d21 phyhost3 Ok /dev/did/rdsk/d22 phyhost3 Ok /dev/did/rdsk/d23 phyhost4 Ok /dev/did/rdsk/d24 phyhost4 Ok /dev/did/rdsk/d6 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d7 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d8 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d9 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 OkListing 1. Identifying a shared disk

The output in Listing 1 shows that disks

d6,d7,d8,d9,d10,d14,d15,d16,d17, andd18are shared byphyhost1,phyhost2,phyhost3, andphyhost4.The following shared disks will be used for the Oracle Automatic Storage Management disk group to store Oracle Cluster Registry and voting files:

/dev/did/rdsk/d6s6/dev/did/rdsk/d7s6/dev/did/rdsk/d8s6

The following shared disks will be used for the Oracle Automatic Storage Management disk group to store data files:

/dev/did/rdsk/d9s6/dev/did/rdsk/d10s6/dev/did/rdsk/d14s6/dev/did/rdsk/d15s6

In this example, slice 6 is 102 GB in size. For information about disk size requirements, see the Oracle Grid Infrastructure Installation Guide.

- Ensure that Oracle virtual IP (VIP) and Single Client Access Name (SCAN) IP requirements have been allocated on the public network, for example:

vzhost1d, IP address 10.134.35.99, is used for the SCAN IP address.vzhost1c-vip, IP address 10.134.35.100, is used as the VIP address forvzhost1c.vzhost2c-vip, IP address 10.134.35.101, is used as the VIP address forvzhost2c.vzhost3c-vip, IP address 10.134.35.102, is used as the VIP address forvzhost3c.vzhost4c-vip, IP address 10.134.35.103, is used as the VIP address forvzhost4c.

Note: Do not manually plumb the above IP addresses anywhere on the network, including in the cluster.

- Ensure that network data links have been created for the exclusive IP zone cluster nodes. For example, type the following commands to create VNICs named

vnic1andvnic2on physical network interfacesnet0andnet4.# dladm create-vnic -l net0 vnic1 # dladm create-vnic -l net4 vnic2 - VNICs or Infiniband partition is required for the cluster interconnect to be used in the exclusive IP-zone. For this configuration, Infiniband partition is used for cluster interconnect. For example, type the following commands to identify and to create Infiniband partition on top of global zone interconnect.

Type the following command to identify IB physical link that can be used as cluster interconnect.

# clinterconnect status === Cluster Transport Paths === Endpoint1 Endpoint2 Status --------- --------- ------ Phyhost1:gzpriv.ibd7 phyhost4:gzpriv.ibd7 Path online Phyhost1:gzpriv.ibd6 phyhost4:gzpriv.ibd6 Path online Phyhost1:gzpriv.ibd7 phyhost2:gzpriv.ibd7 Path online Phyhost1:gzpriv.ibd6 phyhost2:gzpriv.ibd6 Path online Phyhost1:gzpriv.ibd7 phyhost3:gzpriv.ibd7 Path online Phyhost1:gzpriv.ibd6 phyhost3:gzpriv.ibd6 Path online Phyhost2:gzpriv.ibd7 phyhost3:gzpriv.ibd7 Path online Phyhost2:gzpriv.ibd7 phyhost4:gzpriv.ibd7 Path online Phyhost2:gzpriv.ibd6 phyhost3:gzpriv.ibd6 Path online Phyhost2:gzpriv.ibd6 phyhost4:gzpriv.ibd6 Path online Phyhost3:gzpriv.ibd7 phyhost4:gzpriv.ibd7 Path online Phyhost3:gzpriv.ibd6 phyhost4:gzpriv.ibd6 Path onlineType the following command to display the IB partition link information.

# dladm show-link LINK CLASS MTU STATE OVER net3 phys 1500 unknown -- net4 phys 1500 up -- net1 phys 1500 up -- net2 phys 1500 unknown -- net0 phys 1500 up -- net5 phys 1500 unknown -- net6 phys 65520 up -- net7 phys 65520 up -- phys 1500 up -- gzpriv.ibd6 part 65520 up net6 gzpriv.ibd7 part 65520 up net7 vnic1 vnic 1500 up net0 vnic2 vnic 1500 up net4 [root@phyhost1 ~]# dladm show-part LINK PKEY OVER STATE FLAGS gzpriv.ibd6 8001 net6 up ---- gzpriv.ibd7 8002 net7 up ----The Identified links are

net6andnet7.net6is the first interconnect andnet7is the second interconnect.Type the following command to display only the physical links, port GUID, HCA GUID, and P_Key present on the port at the time the command is running.

# dladm show-ib LINK HCAGUID PORTGUID PORT STATE GWNAME GWPORT PKEYS net7 212800013EB442 212800013EB444 2 up -- -- 8001,8002,8004,FFFF net8 21280001CF6662 21280001CF6663 1 up -- -- 8011,FFFF net6 212800013EB442 212800013EB443 1 up -- -- 8001,8002,8004,FFFF net9 21280001CF6662 21280001CF6664 2 up -- -- 8004,8012,FFFFType the following command on each global cluster node to create an IB partition link.

# dladm create-part –l net6 –P 8001 zcRacPriv.ibd6 # dladm create-part –l net7 –P 8002 zcRacPriv.ibd7

Create the Exclusive-IP Zone Cluster

- Use the

sysconfigtool to create a system configuration profile:- Type the following command:

# sysconfig create-profile -o /var/tmp/zc2.xml -g location,identity,naming_services,users - Press F2 to go to the Network screen. Type

physhost1asthe computer name. - Press F2 to continue to the DNS Name Service screen. Select Configure DNS.

- Press F2 to go to the DNS Server Addresses screen. Type the IP address of the DNS server(s). At least one IP address is required.

DNS Server IP address: 192.168.1.10 DNS Server IP address: 192.168.1.11 - Press F2 to go to the DNS Search List screen. Enter a list of domains to be searched when a DNS query is made. If no domain is entered, only the DNS domain chosen for this system is searched.

Search domain: us.oracle.com Search domain: oracle.com Search domain: - Press F2 to go to the Alternate Name Service screen. Select LDAP from the list.

- Press F2 to go to the screen where you can specify the domain name for the LDAP name server. Type the domain name using its exact capitalization and punctuation.

Domain Name: us.oracle.com - Press F2 to continue to the screen where you can specify the LDAP profile.

Profile name: <name> Profile server host name or IP address: 192.168.1.12 Search base: dc=us,dc=oracle,dc=com - Press F2 to continue to the LDAP Proxy screen. Select Yes if a proxy is used.

- Press F2 to continue to the LDAP Profile Proxy Bind Information screen. Type the proxy bind distinguished name.

Proxy bind distinguished name: myproDN="cn=s_admin,ou=adminusers,dc=oracle,dc=com" Proxy bind password: <password> - Press F2 to continue to the Time Zone: Regions screen. Select the region that contains your time zone.

- Press F2 to continue to the Time Zone: Locations screen. Select the location that contains your time zone.

- Press F2 to continue to the Time Zone screen. Select your time zone.

- Press F2 to continue to the Users screen. Define a root password for the system and a user account for yourself. Also create a user account.

- Press F2 to continue to the System Configuration Summary screen. Review the list of all the settings.

Time Zone: US/Pacific Language: *The following can be changed when logging in. Default language: C/POSIX Terminal type: vt100 Users: No user account Network: Computer name: physhost1 Name service: DNS DNS servers: 192.168.1.10 192.168.1.11 DNS Domain search list: us.oracle.com oracle.com Domain: us.oracle.com Note: DNS will be configured to resolve host and IP node names. This setting can be modified upon rebooting. For example: # svccfg -s svc:/system/name-service/switch svc:/system/name-service/switch> setprop config/host="cluster files dns " svc:/system/name-service/switch> quit # svcadm refresh svc:/system/name-service/switch See nsswitch.conf(4), svccfg(1M) and nscfg(1M). - Press F2 to apply the settings.

- Type the following command:

- Create a file called

zone.cfgwith the following content:create set zonepath=/export/zones/zc-rac set brand=solaris set autoboot=true set limitpriv=default,proc_priocntl,proc_clock_highres set enable_priv_net=true set ip-type=exclusive add dedicated-cpu set ncpus=16 end add capped-memory set physical=12g set swap=12g set locked=12g end add node set physical-host=phyhost1 set hostname=vzhost1c add net set physical=vnic4 end add net set physical=vnic5 end add privnet set physical=zcRacPriv.ibd6 end add privnet set physical=vzRacPriv.ibd7 end end add node set physical-host=phyhost2 set hostname=vzhost2c add net set physical=vnic4 end add net set physical=vnic5 end add privnet set physical=zcRacPriv.ibd6 end add privnet set physical=vzRacPriv.ibd7 end end add node set physical-host=phyhost3 set hostname=vzhost3c add net set physical=vnic4 end add net set physical=vnic5 end add privnet set physical=zcRacPriv.ibd6 end add privnet set physical=vzRacPriv.ibd7 end end add node set physical-host=phyhost4 set hostname=vzhost4c add net set physical=vnic4 end add net set physical=vnic5 end add privnet set physical=zcRacPriv.ibd6 end add privnet set physical=vzRacPriv.ibd7 end end add net set address=vzhost1d end add net set address=vzhost1c-vip end add net set address=vzhost2c-vip end add net set address=vzhost3c-vip end add net set address=vzhost4c-vip end add device set match="/dev/did/rdsk/d6s6" end add device set match="/dev/did/rdsk/d7s6" end add device set match="/dev/did/rdsk/d8s6" end add device set match="/dev/did/rdsk/d9s6" end add device set match="/dev/did/rdsk/d10s6" end add device set match="/dev/did/rdsk/d14s6" end add device set match="/dev/did/rdsk/d15s6" end add device set match="/dev/did/rdks/d16s6" end add device set match="/dev/did/rdsk/d17s6" end add device set match="/dev/did/rdsk/d18s6" end - If the SCAN host name,

vzhost1d, resolves to multiple IP addresses, configure a separate global net resource for each IP address. For example, ifvzhost1dresolves to three IP addresses (10.134.35.97, 10.134.35.98, and 10.134.35.99), add the following global net resources to thezone.cfgfile:add net set address=10.134.35.97 end add net set address=10.134.35.98 end add net set address=10.134.35.99 end - As

root, run the following commands from one node to create the cluster:# clzonecluster configure -f /var/tmp/zone.cfgzc-rac # clzonecluster install -c /var/tmp/zc2.xml/sc_profile.xml zc-rac # clzonecluster status === Zone Clusters === --- Zone Cluster Status --- Name Node Name Zone HostName Status Zone Status ---- --------- ------------- ------ ----------- zc-rac phyhost1 vzhost1c Offline Installed phyhost2 vzhost2c Offline Installed phyhost3 vzhost3c Offline Installed phyhost4 vzhost4c Offline Installed # clzc boot zc-rac # clzc status === Zone Clusters === --- Zone Cluster Status --- Name Node Name Zone HostName Status Zone Status ---- --------- ------------- ------ ----------- zc-rac phyhost1 vzhost1c Online Running phyhost2 vzhost2c Online Running phyhost3 vzhost3c Online Running phyhost4 vzhost4c Online Running - Configure the zone IP address:

root@phyhost1:/var/tmp# zlogin zc-rac root@vzhost1c:~# dladm show-link LINK CLASS MTU STATE OVER clprivnet2 phys 65520 up -- zcRacPriv.ibd6 part 65520 up ? zcRacPriv.ibd7 part 65520 up ? vnic4 vnic 1500 up ? vnic5 vnic 1500 up ? zcRacStor.ibd6 part 65520 unknown ? zcRacStor.ibd7 part 65520 unknown ? root@vzhost1c:~# ipadm show-addr ADDROBJ TYPE STATE ADDR lo0/v4 static ok 127.0.0.1/8 zcRacPriv.ibd6/? static ok 172.16.4.129/26 zcRacPriv.ibd7/? static ok 172.16.4.193/26 clprivnet2/? static ok 172.16.4.65/26 lo0/v6 static ok ::1/128 root@vzhost1c:~# ipadm create-ip vnic4 root@vzhost1c:~# ipadm create-ip vnic5 root@vzhost1c:~# ipadm create-ipmp rac_ipmp1 root@vzhost1c:~# ipadm add-ipmp -i vnic4 -i vnic5 rac_ipmp1 root@vzhost1c:~# ipadm create-addr -T static -a 10.134.54.40/24 rac_ipmp1/v4 root@vzhost1c:~# ipadm set-ifprop -p standby=on -m ip vnic5 root@vzhost1c:~# ipmpstat -g GROUP GROUPNAME STATE FDT INTERFACES sc_ipmp1 rac_ipmp1 ok -- vnic4 (vnic - To configure kernel parameter values, add resource control values to the

/etc/projectfile. For more information, see Oracle Database Installation Guide 12c Release 1 for Oracle Solaris section D.1. When you are done, check the values in the/etc/projectfile. The output should be similar to the following:# cat /etc/project system:0:::: user.root:1:::: noproject:2:::: default:3::::process.max-file-descriptor=(basic,1024,deny),(priv,65536,deny);process.max-sem-nsems= (privileged,1024,deny);process.max-sem-ops=(privileged,512,deny);project.max-msg-ids= (privileged,4096,deny);project.max-sem-ids=(privileged,65535,deny);project.max-shm-ids= (privileged,4096,deny);project.max-shm-memory=(privileged,466003951616,deny);project.max-tasks= (priv,131072,deny) group.staff:10:::: - Install these required Oracle Solaris 11 packages if not ready installed:

pkg://solaris/developer/assembler-

pkg://solaris/developer/build/make -

pkg://solaris/system/xopen/xcu4 -

pkg://solaris/x11/diagnostic/x11-info-clients

Create the Oracle RAC Framework Resources for the Zone Cluster

As root, perform the following steps from one node to create the Oracle RAC framework:

- Execute

clsetupfrom any global zone cluster node:# /usr/cluster/bin/clsetup *** Main Menu *** Please select from one of the following options: 1) Quorum 2) Resource groups 3) Data Services 4) Cluster interconnect 5) Device groups and volumes 6) Private hostnames 7) New nodes 8) Zone Cluster 9) Other cluster tasks ?) Help with menu options q) Quit Option: 3 *** Data Services Menu *** Please select from one of the following options: * 1) Apache Web Server * 2) Oracle Database * 3) NFS * 4) Oracle Real Application Clusters * 5) PeopleSoft Enterprise Application Server * 6) Highly Available Storage * 7) Logical Hostname * 8) Shared Address * 9) Per Node Logical Hostname *10) WebLogic Server * ?) Help * q) Return to the Main Menu Option: 4 *** Oracle Solaris Cluster Support for Oracle RAC *** Oracle Solaris Cluster provides a support layer for running Oracle Real Application Clusters (RAC) database instances. This option allows you to create the RAC framework resource group, storage resources, database resources and administer them, for managing the Oracle Solaris Cluster support for RAC. After the RAC framework resource group has been created, you can use the Oracle Solaris Cluster system administration tools to administer a RAC framework resource group that is configured on a global cluster. To administer a RAC framework resource group that is configured on a zone cluster, instead use the appropriate Oracle Solaris Cluster command. Is it okay to continue (yes/no) [yes]? Please select from one of the following options: 1) Oracle RAC Create Configuration 2) Oracle RAC Ongoing Administration q) Return to the Data Services Menu Option: 1 >>> Select Oracle Real Application Clusters Location <<< Oracle Real Application Clusters Location: 1) Global Cluster 2) Zone Cluster Option [2]: 2 >>> Select Zone Cluster <<< From the list of zone clusters, select the zone cluster where you would like to configure Oracle Real Application Clusters. 1) zc-rac ?) Help d) Done Selected: [zc-rac] >>> Select Oracle Real Application Clusters Components to Configure <<< Select the component of Oracle Real Application Clusters that you are configuring: 1) RAC Framework Resource Group 2) Storage Resources for Oracle Files 3) Oracle Clusterware Framework Resource 4) Oracle Automatic Storage Management (ASM) 5) Resources for Oracle Real Application Clusters Database Instances Option [1]: 1 This wizard guides you through the creation and configuration of the Real Application Clusters (RAC) framework resource group. Before you use this wizard, ensure that the following prerequisites are met: * All pre-installation tasks for Oracle Real Application Clusters are completed. * The Oracle Solaris Cluster nodes are prepared. * The data services packages are installed. * All storage management software that you intend to use is installed and configured on all nodes where Oracle Real Application Clusters is to run. Press RETURN to continue >>> Select Nodes <<< Specify, in order of preference, a list of names of nodes where Oracle Real Application Clusters is to run. If you do not explicitly specify a list, the list defaults to all nodes in an arbitrary order. The following nodes are available on the zone cluster z11skgxn: 1) vzhost1c 2) vzhost2c 3) vzhost3c 4) vzhost4c r) Refresh and Clear All a) All ?) Help d) Done Selected: [vzhost1c, vzhost2c, vzhost3c, vzhost4c] Options: d >>> Review Oracle Solaris Cluster Objects <<< The following Oracle Solaris Cluster objects will be created. Select the value you are changing: Property Name Current Setting ============= =============== 1) Resource Group Name rac-framework-rg 2) RAC Framework Resource N...rac-framework-rs d) Done ?) Help Option: d >>> Review Configuration of RAC Framework Resource Group <<< The following Oracle Solaris Cluster configuration will be created. To view the details for an option, select the option. Name Value ==== ===== 1) Resource Group Name rac-framework-rg 2) RAC Framework Resource N...rac-framework-rs c) Create Configuration ?) Help Option: c - From any global zone cluster node, verify the status of the Oracle RAC framework resources:

# clrs status -Z zc-rac === Cluster Resources === Resource Name Node Name State Status Message ------------- --------- ----- -------------- rac-framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online

Set Up the Root Environment in Zone Cluster zc-rac

As root on each global zone cluster node (phyhost1, phyhost2, phyhost3, and phyhost4), perform the following steps:

- Log in to the non-global zone node:

# /usr/sbin/zloginzc-rac [Connected to zone 'zc-rac' pts/2] Last login: Thu Aug 25 17:30:14 on pts/2 Oracle Corporation SunOS 5.11 11.2 June 2014 - Install

makeon each zone cluster node:- Check whether the

make,assembler, andx11-info-clientspackages exist on the node.# pkg list *make* - If the packages do not exist, execute the following commands to install the packages:

# pkg install developer/build/make # pkg install developer/build/assembler # pkg install x11-info-clients # pkg install x11/diagnostic/x11-info-clients

- Check whether the

- Enable Oracle Clusterware's Cluster Time Synchronization Services to run in the observation mode on each zone node:

# touch /etc/inet/ntp.conf - Change

PermitRootLogin notoPermitRootLogin yesin/etc/ssh/sshd_configon each zone node:root@vzhost1c:~# svcadm disable ssh root@vzhost1c:~# svcadm enable ssh

Create a User and Group for the Oracle Software

- As

root, execute the following commands on each node:# groupadd -g 300 oinstall # groupadd -g 301 dba # useradd -g 300 -G 301 -u 302 -d /u01/ora_home -s /usr/bin/bash ouser # mkdir -p /u01/ora_home # chown ouser:oinstall /u01/ora_home # mkdir /u01/oracle # chown ouser:oinstall /u01/oracle # mkdir /u01/grid # chown ouser:oinstall /u01/grid # mkdir /u01/oraInventory # chown ouser:oinstall /u01/oraInventory - Include the following paths in the

.bash_profilefile of the software owner,ouser:export ORACLE_BASE=/u01/oracle export ORACLE_HOME=/u01/oracle/product/12.1 export AWT_TOOLKIT=XToolkit - Create a password for the software owner,

ouser:# passwd ouser New Password: Re-enter new Password: passwd: password successfully changed for ouser - As

ouser, set up SSH on each node:$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/u01/ora_home/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /u01/ora_home/.ssh/id_rsa. Your public key has been saved in /u01/ora_home/.ssh/id_rsa.pub. The key fingerprint is: e6:63:c9:71:fe:d1:8f:71:77:70:97:25:2a:ee:a9:33 local1@vzhost1c $ $ pwd /u01/ora_home/.ssh - On the first node,

vzhost1c, type the following:$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ chmod 600 authorized_keys $ scp authorized_keys vzhost2c:/u01/ora_home/.ssh - On the second node,

vzhost2c, type the following:$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost3c:/u01/ora_home/.ssh - On the third node,

vzhost3c, type the following:$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost4c:/u01/ora_home/.ssh - On the fourth node,

vzhost4c, type the following:$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost1c:/u01/ora_home/.ssh - On the first node,

vzhost1c, type the following:$ cd /u01/ora_home/.ssh $ scp authorized_keys vzhost2c:/u01/ora_home/.ssh $ scp authorized_keys vzhost3c:/u01/ora_home/.ssh - On each node, test the SSH setup:

$ ssh vzhost1c date $ ssh vzhost2c date $ ssh vzhost3c date $ ssh vzhost4c date - As

root, set up the Oracle Automatic Storage Management candidate disk on each local zone cluster node:# for i in 6 7 8 9 10 14 15 > do > chown ouser:oinstall /dev/did/rdsk/d${i}s6 > chmod 660 /dev/did/rdsk/d${i}s6 > done - As the software owner, do the following from one node in the local zone cluster:

$ for i in 6 7 8 9 10 14 15 > do > dd if=/dev/zero of=/dev/did/rdsk/d${i}s6 bs=1024k count=200 > done

Install Oracle Grid Infrastructure 12c in the Oracle Solaris Zones Cluster Nodes

- As the software owner, execute following commands on one node:

$ bash $ export DISPLAY=<hostname>:<n> $ cd <PATH to 12.1 based software image>/grid/ $ ./runInstaller Starting Oracle Universal Installer... Checking Temp space: must be greater than 180 MB. Actual 16796 MB Passed Checking swap space: must be greater than 150 MB. Actual 19527 MB Passed Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed Preparing to launch Oracle Universal Installer from /tmp/OraInstall2014-08-04_02-34-26PM. Please wait ... - Type Y to continue.

- Provide the following input to Oracle Universal Installer:

- On the Select Installation Option screen, select Install and Configure Oracle Grid Infrastructure for a Cluster.

- On the Select Cluster Type screen, select Configure a Standard Cluster.

- On the Select Installation Type screen, select Advanced Installation.

- On the Select Product Languages screen, select English.

- On the Grid Plug and Play Information screen, select the following:

- For Cluster Name, select vzhost-cluster.

- For SCAN Name, select vzhost1d.

- For SCAN Port, select 1521.

GNS is not used in this example.

- On the Cluster Node Information screen, specify the following:

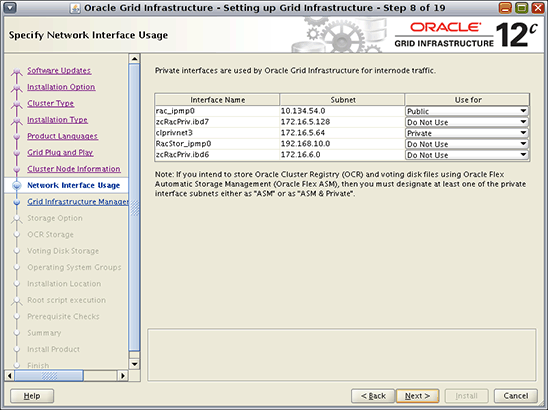

Public Hostname Virtual Hostname vzhost1c vzhost1c-vip vzhost2c vzhost2c-vip vzhost3c vzhost3c-vip vzhost4c vzhost4c-vip - On the Specify Network Interface Usage screen, select one public network and one private network, as shown in Figure 2. Select Do Not Use for the remaining networks.

Figure 2. Specifying network interface usage

- On the Grid Infrastructure Management Repository Option screen, select Yes.

- On the Storage Option Information screen, select Use Standard ASM for Storage.

- On the Create ASM Disk Group screen, do the following, as shown in Figure 3:

- Click the Change Discovery Path button.

- In the Change Discovery Path dialog box, specify the discovery path as

/dev/did/rdsk/d*s6. - In the Disk group name text box, type

crsdg. - For the Candidate Disks, select /dev/did/rdsk/d6s6, /dev/did/rdsk/d7s6, and /dev/did/rdsk/d8s6.

Figure 3. Create Oracle Automatic Storage Management disk group

- On the Specify ASM Password screen, enter the SYS and ASMSNMP accounts' username and password.

- On the Privileged Operating System Groups screen, specify a group for the Oracle ASM Administrator (OSASM), the Oracle ASM DBA (OSDBA for ASM), and the Oracle ASM Operator (OSOPER for ASM). For this example, the default group is used.

- On the Software Installation Location screen, specify the following:

- For Oracle Base, type

/u01/oracle. - For Software Location, type

/u01/grid/product/12.1.

- For Oracle Base, type

- On the Create Inventory screen, select /u01/oraInventory for Inventory Directory.

- On the root script execution configuration screen, enter the

rootpassword if you wish to run the configuration scriptroot.shautomatically. - On the Perform Prerequisite Checks screen, select Ignore All.

- On the Summary screen, click Install to start the software installation.

- On the Install Product screen, confirm the execution of configuration scripts in the dialog box, as shown in Figure 4.

Figure 4. Message for confirming the execution of configuration scripts

- If you see the error message

INS-20802 Oracle Cluster Verification Utility failed, click OK and Skip. Then click Next to continue. - If you see the warning

[INS-32091] Software installation was successful. But some configuration assistants failed, were canceled or skipped, click Yes to continue. The installation of Oracle Grid Infrastructure 12.1 is completed.

- From any node, check the status of Oracle Grid Infrastructure resources:

[root@vzhost1c ~]# /u01/grid/product/12.1/bin/crsctl status res -t -------------------------------------------------------------------------------- Name Target State Server State details -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.CRSDG.dg ONLINE ONLINE vzhost1c STABLE ONLINE ONLINE vzhost2c STABLE ONLINE ONLINE vzhost3c STABLE ONLINE ONLINE vzhost4c STABLE ora.LISTENER.lsnr ONLINE ONLINE vzhost1c STABLE ONLINE ONLINE vzhost2c STABLE ONLINE ONLINE vzhost3c STABLE ONLINE ONLINE vzhost4c STABLE ora.asm ONLINE ONLINE vzhost1c STABLE ONLINE ONLINE vzhost2c Started,STABLE ONLINE ONLINE vzhost3c Started,STABLE ONLINE ONLINE vzhost4c Started,STABLE ora.net1.network ONLINE ONLINE vzhost1c STABLE ONLINE ONLINE vzhost2c STABLE ONLINE ONLINE vzhost3c STABLE ONLINE ONLINE vzhost4c STABLE ora.ons ONLINE ONLINE vzhost1c STABLE ONLINE ONLINE vzhost2c STABLE ONLINE ONLINE vzhost3c STABLE ONLINE ONLINE vzhost4c STABLE -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE vzhost3c STABLE ora.LISTENER_SCAN2.lsnr 1 ONLINE ONLINE vzhost2c STABLE ora.LISTENER_SCAN3.lsnr 1 ONLINE ONLINE vzhost1c STABLE ora.MGMTLSNR 1 ONLINE ONLINE vzhost1c 172.16.4.65,STABLE ora.cvu 1 ONLINE ONLINE vzhost1c STABLE ora.mgmtdb 1 ONLINE ONLINE vzhost1c Open,STABLE ora.oc4j 1 ONLINE ONLINE vzhost1c STABLE ora.scan1.vip 1 ONLINE ONLINE vzhost3c STABLE ora.scan2.vip 1 ONLINE ONLINE vzhost2c STABLE ora.scan3.vip 1 ONLINE ONLINE vzhost1c STABLE ora.vzhost1c.vip 1 ONLINE ONLINE vzhost1c STABLE ora.vzhost2c.vip 1 ONLINE ONLINE vzhost2c STABLE ora.vzhost3c.vip 1 ONLINE ONLINE vzhost3c STABLE ora.vzhost4c.vip 1 ONLINE ONLINE vzhost4c STABLE --------------------------------------------------------------------------------

Install Oracle Database 12.1 and Create the Database

- Execute the following commands to launch the ASM Configuration Assistant, where you can create a disk group called

swbdgfor the database creation:$ export DISPLAY=<hostname>:<n> $ /u01/grid/product/12.1/bin/asmca - In the ASM Configuration Assistant, do the following:

- In the Disk Groups tab, shown in Figure 5, click Create.

Figure 5. Disk Groups tab

- In the Create Disk Group screen, shown in Figure 6, do the following:

- For Disk Group Name, specify

swbdg. - Select /dev/did/rdsk/d9s6, /dev/did/rdsk/d10s6, /dev/did/rdsk/d14s6, and /dev/did/rdsk/d15s6.

- Type

fgr1as the Failure Group for disks/dev/did/rdsk/d9s6and/dev/did/rdsk/d10s6. - Type

fgr2as the Failure Group for disks/dev/did/rdsk/d14s6and/dev/did/rdsk/d15s6. - Click OK to create the disk group.

Figure 6. Creating the disk group

- For Disk Group Name, specify

- After the

swbdgdisk group creation is completed, click Exit to close the ASM Configuration Assistant.

- In the Disk Groups tab, shown in Figure 5, click Create.

- From one of the nodes, run the following commands to open the Oracle Universal Installer to install Oracle Database:

$ export DISPLAY=<hostname>:<n> $ cd <PATH to 12.1 based software image>/database $ ./runInstaller starting Oracle Universal Installer... Checking Temp space: must be greater than 180 MB. Actual 20766 MB Passed Checking swap space: must be greater than 150 MB. Actual 23056 MB Passed Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed Preparing to launch Oracle Universal Installer from /tmp/OraInstall2014-08-05_02-13-31PM. Please wait ... - Provide the following inputs to the Oracle Universal Installer:

- Provide the requested information on the Configuration Security Updates screen and the Download Software Updates screen.

- On the Select Installation Option screen, select Create and configure a database.

- On the System Class screen, select Server Class.

- On the Grid Installation Options screen, select Oracle Real Application Clusters database installation.

- On the Select Cluster Database Type screen, perform the following actions:

- Select Policy managed.

- Provide the serverpool name: swbsvr

- Enter 4 for Cardinality.

- Click Next.

- On the Select Install Type screen, select Advanced Install.

- On the Select Product Languages screen, use the default value.

- On the Select Database Edition screen, select Enterprise Edition.

- On the Specify Installation Location screen, specify the following:

- For Oracle Base, select /u01/oracle.

- For Software Location, select /u01/oracle/product/12.1.

- On the Select Configuration Type screen, select General Purpose/Transaction Processing.

- On the Specify Database Identifiers screen, deselect Create as Container database. Type

swbas the Global Database Name. - On the Specify Configuration Options screen, shown in Figure 7, use the default settings.

Figure 7. Specify Configuration Options screen

- On the Specify Database Storage Options screen, select Oracle Automatic Storage Management.

- On the Specify Management Options screen, use the default options.

- On the Specify Recovery Options screen, select the Enable recovery option. Make sure that Oracle Automatic Storage Management is selected.

- On the Select ASM Disk Group screen, select SWBDG.

- On the Specify Schema Passwords screen, specify a password for the SYS, SYSTEM, SYSMAN, and DBSNMP accounts.

- On the Privileged Operating System Groups screen, specify the following or select the default options:

- For Database Administrator (OSDBA) Group, select dba.

- For Database Operator (OSOPER) Group (Optional), select oinstall.

- For Database Backup and Recovery (OSBACKUPDBA) group, select dba.

- For Data Guard administrative (OSDGDBA) group, select dba.

- For Encryption Keys Management administrative (OSKMDBA) group, select dba.

- On the Perform Prerequisite Checks screen, shown in Figure 8, select Ignore All, and click Yes to continue.

Figure 8. Perform Prerequisite Checks screen

- On the Summary screen, click Install.

- On the subscreen that asks you to run configuration scripts, perform the following steps:

- Open a terminal.

- Log in as

root. - Run the

root.shscript on each cluster node. - Return to the window and click OK to continue.

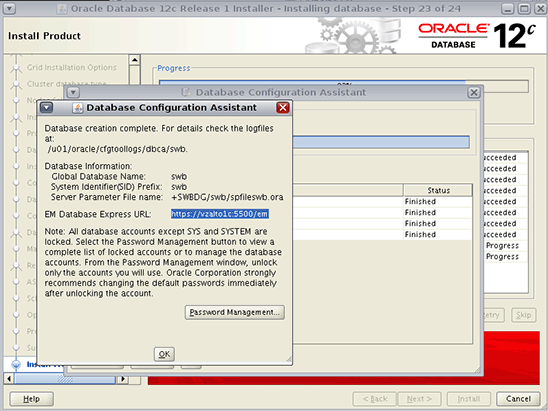

- In the Database Configuration Assistant dialog box, shown in Figure 9, click OK to continue.

Figure 9. Database Configuration Assistant dialog box

- On the Finish Screen, click Close to complete the installation.

Verify Database Status

Type the following commands to verify the database status:

[root@vzhost1c ~]# srvctl config database -dbswb

Database unique name: swb

Database name: swb

Oracle home: /u01/oracle/product/12.1

Oracle user: oracle

Spfile: +SWBDG/swb/spfileswb.ora

Password file: +SWBDG/swb/orapwswb

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools: swbsvr

Database instances:

Disk Groups: SWBDG

Mount point paths:

Services:

Type: RAC

Start concurrency:

Stop concurrency:

Database is policy managed

[root@vzhost1c ~]# srvctl status database -d swb

Instance swb_1 is running on node vzhost2c

Instance swb_2 is running on node vzhost3c

Instance swb_3 is running on node vzhost4c

Instance swb_4 is running on node vzhost1c

Create the Oracle Solaris Cluster Resource

Use the following procedure to create the Oracle Solaris Cluster resource. Alternatively, you can use the clsetup wizard.

- From one zone cluster node, execute the following command to register the

SUNW.crs_frameworkresource type in the zone cluster:# clrt register SUNW.crs_framework - Add an instance of the

SUNW.crs_frameworkresource type to the Oracle RAC framework resource group:# clresource create -t SUNW.crs_framework \ -g rac-framework-rg \ -p resource_dependencies=rac-framework-rs \ crs-framework-rs - Register the scalable Oracle Automatic Storage Management instance proxy resource type:

# clresourcetype register SUNW.scalable_asm_instance_proxy - Register the Oracle Automatic Storage Management disk group resource type:

# clresourcetype register SUNW.scalable_asm_diskgroup_proxy - Create resource groups

asm-inst-rgandasm-dg-rg:# clresourcegroup create -S asm-inst-rgasm-dg-rg - Set a strong positive affinity on

rac-fmwk-rgbyasm-inst-rg:# clresourcegroup set -p rg_affinities=++rac-framework-rgasm-inst-rg - Set a strong positive affinity on

asm-inst-rgbyasm-dg-rg:# clresourcegroup set -p rg_affinities=++asm-inst-rgasm-dg-rg - Create a

SUNW.scalable_asm_instance_proxyresource and set the resource dependencies:# clresource create -g asm-inst-rg \ -t SUNW.scalable_asm_instance_proxy \ -p ORACLE_HOME=/u01/grid/product/12.1 \ -p CRS_HOME=/u01/grid/product/12.1 \ -p "ORACLE_SID{vzhost1c}"=+ASM1 \ -p "ORACLE_SID{vzhost2c}"=+ASM2 \ -p "ORACLE_SID{vzhost3c}"=+ASM3 \ -p "ORACLE_SID{vzhost4c}"=+ASM4 \ -p resource_dependencies_offline_restart=crs-framework-rs \ -d asm-inst-rs - Add an Oracle Automatic Storage Management disk group resource type to the

asm-dg-rgresource group:# clresource create -g asm-dg-rg -t SUNW.scalable_asm_diskgroup_proxy \ -p asm_diskgroups=CRSDG,SWBDG \ -p resource_dependencies_offline_restart=asm-inst-rs \ -d asm-dg-rs - On a cluster node, bring online the

asm-inst-rgresource group in a managed state:# clresourcegroup online -eM asm-inst-rg - On a cluster node, bring online the

asm-dg-rgresource group in a managed state:# clresourcegroup online -eM asm-dg-rg - Create a scalable resource group to contain the proxy resource for the Oracle RAC database server:

# clresourcegroup create -S \ -p rg_affinities=++rac-framework-rg,++asm-dg-rg \ rac-swbdb-rg - Register the

SUNW.scalable_rac_server_proxyresource type:# clresourcetype register SUNW.scalable_rac_server_proxy - Add a database resource to resource group:

# clresource create -g rac-swbdb-rg \ -t SUNW.scalable_rac_server_proxy \ -p resource_dependencies=rac-framework-rs \ -p resource_dependencies_offline_restart=crs-framework-rs,asm-dg-rs \ -p oracle_home=/u01/oracle/product/12.1 \ -p crs_home=/u01/grid/product/12.1 \ -p db_name=swb \ -d rac-swb-srvr-proxy-rs - Bring the resource group online:

# clresourcegroup online -emM rac-swbdb-rg - Check the status of the cluster resource:

# lrs status === Cluster Resources === Resource Name Node Name State Status Message ------------- --------- ----- -------------- crs_framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online rac-framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online asm-inst-rs vzhost1c Online Online - +ASM1 is UP and ENABLED vzhost2c Online Online - +ASM2 is UP and ENABLED vzhost3c Online Online - +ASM3 is UP and ENABLED vzhost4c Online Online - +ASM4 is UP and ENABLED asm-dg-rs vzhost1c Online Online - Mounted: SWBDG vzhost2c Online Online - Mounted: SWBDG vzhost3c Online Online - Mounted: SWBDG vzhost4c Online Online - Mounted: SWBDG rac-swb-srvr-proxy-rs vzhost1c Online Online - Oracle instance UP vzhost2c Online Online - Oracle instance UP vzhost3c Online Online - Oracle instance UP vzhost4c Online Online - Oracle instance UP

See Also

Here are some additional resources:

- "Managing DNS (Tasks)" in the Oracle Solaris Administration: Naming and Directory Services guide

- "Managing the Name Service Switch" in the Oracle Solaris Administration: Naming and Directory Services guide

- Oracle Solaris Cluster 4.2 documentation library

- All Oracle Solaris Cluster technical resources

- Oracle Solaris Cluster 4.2 Release Notes

- Oracle Solaris Cluster patches and updates

- Oracle Solaris Cluster downloads

- Oracle Solaris Cluster training

About the Author

Vinh Tran is a quality engineer in the Oracle Solaris Cluster Group. His responsibilities include, but are not limited to, certification and qualification of Oracle RAC on Oracle Solaris Cluster.

Revision 1.3, 09/20/2016

Revision 1.2, 03/16/2015

Revision 1.1, 02/05/2015

Revision 1.0, 10/20/2014