How to Deploy Oracle RAC 11.2.0.3 on Zone Clusters

by Vinh Tran

Published June 2012

Using Oracle RAC 11.2.0.3, Oracle Solaris 10, and Oracle Solaris Cluster 3.3

How to create an Oracle Solaris Zone cluster, install and configure Oracle Grid Infrastructure 11.2.0.3 and Oracle Real Application Clusters 11.2.0.3 in the zone cluster, and create an Oracle Solaris Cluster resource for Oracle RAC.

- Introduction

- Overview of the Process

- Prerequisites

- Create the Zone Cluster Using the

cfgFile - Create the Oracle RAC Framework Resources for the Zone Cluster

- Set Up the Root Environment in Local Zone Cluster

z11gr2A - Create a User and Group for the Oracle Software

- Install Oracle Grid Infrastructure 11.2.0.3 in the Oracle Solaris Zone Cluster Nodes

- Install Oracle Database 11.2.0.3 and Create the Database

- Create the Oracle Solaris Cluster Resource

- See Also

- About the Author

This is one of three similar articles that explain how to do the same thing but with different versions of the software. This table summarizes them:

| Article | RAC Version | Solaris Version | Cluster Version |

|---|---|---|---|

| How to Deploy Oracle RAC 11.2.0.3 on Oracle Solaris 11 Zone Clusters | 11.2.0.3 | 11 | 4.0 |

| How to Deploy Oracle RAC on an Exclusive-IP Oracle Solaris Zones Cluster | 12c | 11.2 | 4.2 |

Introduction

Oracle Solaris Cluster 3.3 provides the capability to create high-availability zone clusters. A zone cluster consists of several Oracle Solaris Zones, each of which resides on its own separate server; the zones that comprise the cluster are linked together into a single virtual cluster. Because zone clusters are isolated from each other, they provide increased security. Because the zones are clustered, they provide high availability for the applications they host.

By installing Oracle RAC inside a zone cluster, you can run multiple instances of an Oracle database at the same time. This allows you to have separate database versions or separate deployments of the same database (for example, one for production and one for development). Using this architecture, you can also deploy different parts of your multitiered solution into different virtual zone clusters. For example, you could deploy Oracle RAC and an application server in different zones of the same cluster. This approach allows you to isolate tiers and administrative domains from each other, while taking advantage of the simplified administration provided by Oracle Solaris Cluster.

For information on different configurations available for deploying Oracle RAC in zone cluster, see the Running Oracle Real Application Clusters on Oracle Solaris Zone Clusters" white paper.

Note: This document is not a performance best practices guide and it does not cover the following topics:

- Oracle Solaris OS installation

- Storage configuration

- Network configuration

- Oracle Solaris Cluster installation

Overview of the Process

This article describes how to set up Oracle RAC in an Oracle Solaris Cluster 4-node zone cluster configuration using Oracle Automatic Storage Management (see Figure 1).

Three significant steps have to be performed:

- Create a zone cluster and the specific Oracle RAC infrastructure in this zone cluster.

- Prepare the environment, and then install and configure Oracle Grid Infrastructure and the Oracle Database.

- Create the Oracle Solaris Cluster resources, link them up, and bring them online.

Figure 1. Four-Node Zone Cluster Configuration

Prerequisites

Ensure that the following prerequisites have been met:

- Oracle Solaris 10 9/10 or later and Oracle Solaris Cluster 3.3 5/11 are already installed and configured.

-

Oracle Solaris 10 kernel parameters are configured in the

/etc/systemfile in the global zone. The following is an example of the recommended value:

shmsys:shminfo_shmmax 4294967295 -

Shared disks, also known as

/dev/did/rdskdevices, are known. Listing 1 is an example of how to identify a shared disk from the global zone of any cluster node:

phyhost1# cldev status === Cluster DID Devices === Device Instance Node Status --------------- ---- ------ /dev/did/rdsk/d1 phyhost1 Ok /dev/did/rdsk/d10 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d14 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d15 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d16 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d17 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d18 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d19 phyhost2 Ok /dev/did/rdsk/d2 phyhost1 Ok /dev/did/rdsk/d20 phyhost2 Ok /dev/did/rdsk/d21 phyhost3 Ok /dev/did/rdsk/d22 phyhost3 Ok /dev/did/rdsk/d23 phyhost4 Ok /dev/did/rdsk/d24 phyhost4 Ok /dev/did/rdsk/d6 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d7 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d8 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d9 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 OkListing 1. Identifying a Shared Disk

The output shows that disks

d6,d7,d8,d9,d10,d14,d15,d16,d17, andd18are shared byphyhost1,phyhost2,phyhost3, andphyhost4. -

The following shared disks will be used for the Oracle Automatic Storage Management disk group to store Oracle Cluster Registry and voting files:

/dev/did/rdsk/d6s6 /dev/did/rdsk/d7s6 /dev/did/rdsk/d8s6 -

The following shared disks will be used for the Oracle Automatic Storage Management disk group to store data files:

/dev/did/rdsk/d9s6 /dev/did/rdsk/d10s6 /dev/did/rdsk/d14s6 /dev/did/rdsk/d15s6In this example, slice 6 is 102 GB. Please check the Oracle Grid Infrastructure Installation Guide for disk size requirements.

-

Oracle virtual IP (VIP) and Single Client Access Name (SCAN) IP requirements have been set up, for example:

vzhost1d, IP address 10.134.35.99, is used for SCAN IP.vzhost1c-vip, IP address 10.134.35.100, is used as VIP forvzhost1c.vzhost2c-vip, IP address 10.134.35.101, is used as VIP forvzhost2c.vzhost3c-vip, IP address 10.134.35.102, is used as VIP forvzhost3c.vzhost4c-vip, IP address 10.134.35.103, is used as VIP forvzhost4c.

-

There is an IPMP group for the public network with one active and one standby interface. Here is an example of the

/etc/hostname.e1000g0and/etc/hostname.e1000g1setting for an IPMP group calledSC_ipmp0from the global zone:

cat /etc/hostname.e1000g0 phyhost1 netmask + broadcast + group sc_ipmp0 up cat /etc/hostname.e1000g1 group sc_ipmp0 standby up

Create the Zone Cluster Using the cfg File

Perform the following steps to create an Oracle Solaris Zone cluster:

-

Create a file called

zone.cfgwith the content shown in Listing 2.

cat /var/tmp/zone.cfg create set zonepath=/export/zones/z11gR2A add sysid set name_service="NIS{domain_name=solaris.us.oracle.com}" set root_password=passwd end set limitpriv ="default,proc_priocntl,proc_clock_highres,sys_time" add dedicated-cpu set ncpus=16 end add capped-memory set physical=12g set swap=12g set locked=12g end add node set physical-host=phyhost1 set hostname=vzhost1c add net set address=vzhost1c set physical=e1000g0 end end add node set physical-host=phyhost2 set hostname=vzhost2c add net set address=vzhost2c set physical=e1000g0 end end add node set physical-host=phyhost3 set hostname=vzhost3c add net set address=vzhost3c set physical=e1000g0 end end add node set physical-host=phyhost4 set hostname=vzhost4c add net set address=vzhost4c set physical=e1000g0 end end add net set address=vzhost1d end add net set address=vzhost1c-vip end add net set address=vzhost2c-vip end add net set address=vzhost3c-vip end add net set address=vzhost4c-vip end add device set match="/dev/did/rdsk/d6s6" end add device set match="/dev/did/rdsk/d7s6" end add device set match="/dev/did/rdsk/d8s6" end add device set match="/dev/did/rdsk/d9s6" end add device set match="/dev/did/rdsk/d10s6" end add device set match="/dev/did/rdsk/d14s6" end add device set match="/dev/did/rdsk/d15s6" end add device set match="/dev/did/rdks/d16s6" end add device set match="/dev/did/rdsk/d17s6" end add device set match="/dev/did/rdsk/d18s6" endListing 2. Creating the

cfgFile -

If the SCAN host name,

vzhost1d, resolves in multiple IP addresses, configure a separate global net resource for each IP address that the SCAN host name resolves to. For example, if the SCAN resolves to three IP addresses (10.134.35.97, 10.134.35.98, and 10.134.35.99), add the following global net resource to thezone.cfgfile:

add net set address=10.134.35.97 end add net set address=10.134.35.98 end add net set address=10.134.35.99 end -

As

root, run the commands shown in Listing 3 from one node to create the cluster:

# clzonecluster configure -f /var/tmp/zone.cfg z11gr2a # clzonecluster install z11gr2A # clzonecluster status === Zone Clusters === --- Zone Cluster Status --- Name Node Name Zone HostName Status Zone Status ---- --------- ------------- ------ ----------- z11gr2A phyhost1 vzhost1c Offline Installed phyhost2 vzhost2c Offline Installed phyhost3 vzhost3c Offline Installed phyhost4 vzhost4c Offline Installed # clzc boot z11gr2A # clzc status === Zone Clusters === --- Zone Cluster Status --- Name Node Name Zone HostName Status Zone Status ---- --------- ------------- ------ ----------- z11gr2A phyhost1 vzhost1c Online Running phyhost2 vzhost2c Online Running phyhost3 vzhost3c Online Running phyhost4 vzhost4c Online RunningListing 3. Creating the Oracle Solaris Zone Cluster

-

Make sure NTP is disabled but has dummy ntp.conf file so that Grid Infrastructure time synchronization service will run in Observer mode:

# svcs ntp disabled Mar_28 svc:/network/ntp:default # cat > /etc/inet/ntp.conf slewalways yes disable pll ^D -

As

root, executeclsetupfrom one global zone cluster node, as shown in Listing 4.

# /usr/cluster/bin/clsetup *** Main Menu *** Please select from one of the following options: 1) Quorum 2) Resource groups 3) Data Services 4) Cluster interconnect 5) Device groups and volumes 6) Private hostnames 7) New nodes 8) Other cluster tasks ?) Help with menu options q) Quit Option: 3 *** Data Services Menu *** Please select from one of the following options: * 1) Apache Web Server * 2) Oracle * 3) NFS * 4) Oracle Real Application Clusters * 5) SAP Web Application Server * 6) Highly Available Storage * 7) Logical Hostname * 8) Shared Address * ?) Help * q) Return to the Main Menu Option: 4 *** Oracle Solaris Cluster Support for Oracle RAC *** Oracle Solaris Cluster provides a support layer for running Oracle Real Application Clusters (RAC) database instances. This option allows you to create the RAC framework resource group, storage resources, database resources and administer them, for managing the Oracle Solaris Cluster support for Oracle RAC. After the RAC framework resource group has been created, you can use the Oracle Solaris Cluster system administration tools to administer a RAC framework resource group that is configured on a global cluster. To administer a RAC framework resource group that is configured on a zone cluster, instead use the appropriate Oracle Solaris Cluster command. Is it okay to continue (yes/no) [yes]? Please select from one of the following options: 1) Oracle RAC Create Configuration 2) Oracle RAC Ongoing Administration q) Return to the Data Services Menu Option: 1 >>> Select Oracle Real Application Clusters Location <<< Oracle Real Application Clusters Location: 1) Global Cluster 2) Zone Cluster Option [2]: 2 >>> Select Zone Cluster <<< From the list of zone clusters, select the zone cluster where you would like to configure Oracle Real Application Clusters. 1) z11gr2A ?) Help d) Done Selected: [z11gr2A] >>> Select Oracle Real Application Clusters Components to Configure <<< Select the component of Oracle Real Application Clusters that you are configuring: 1) RAC Framework Resource Group 2) Storage Resources for Oracle Files 3) Oracle Clusterware Framework Resource 4) Oracle Automatic Storage Management (ASM) 5) Resources for Oracle Real Application Clusters Database Instances Option [1]: 1 >>> Verify Prerequisites <<< This wizard guides you through the creation and configuration of the Real Application Clusters (RAC) framework resource group. Before you use this wizard, ensure that the following prerequisites are met: * All pre-installation tasks for Oracle Real Application Clusters are completed. * The Oracle Solaris Cluster nodes are prepared. * The data services packages are installed. * All storage management software that you intend to use is installed and configured on all nodes where Oracle Real Application Clusters is to run. Press RETURN to continue >>> Select Nodes <<< Specify, in order of preference, a list of names of nodes where Oracle Real Application Clusters is to run. If you do not explicitly specify a list, the list defaults to all nodes in an arbitrary order. The following nodes are available on the zone cluster z11skgxn: 1) vzhost1c 2) vzhost2c 3) vzhost3c 4) vzhost4c r) Refresh and Clear All a) All ?) Help d) Done Selected: [vzhost1c, vzhost2c, vzhost3c, vzhost4c] Options: d >>> Select Clusterware Support <<< Select the vendor clusterware support that you would like to use. 1) Native 2) UDLM based Option [1]: 1 >>> Review Oracle Solaris Cluster Objects <<< The following Oracle Solaris Cluster objects will be created. Select the value you are changing: Property Name Current Setting ============= =============== 1) Resource Group Name rac-framework-rg 2) RAC Framework Resource N...rac-framework-rs d) Done ?) Help Option: d >>> Review Configuration of RAC Framework Resource Group <<< The following Oracle Solaris Cluster configuration will be created. To view the details for an option, select the option. Name Value ==== ===== 1) Resource Group Name rac-framework-rg 2) RAC Framework Resource N...rac-framework-rs c) Create Configuration ?) Help Option: cListing 4. Executing

clsetup -

From one global zone cluster node, verify the Oracle RAC framework resources:

# clrs status -Z z11gr2A === Cluster Resources === Resource Name Node Name State Status Message ------------- --------- ----- -------------- rac-framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online -

Log in to the local zone node and execute the following command as

root:

# /usr/sbin/zlogin z11gr2A [Connected to zone 'z11gr2A' pts/2] Last login: Thu Aug 25 17:30:14 on pts/2 Oracle Corporation SunOS 5.10 Generic Patch January 2005 -

(Optional) Change the root shell to bash:

# passwd -e Old shell: /sbin/sh New shell: bash passwd: password information changed for root -

Include these paths in

.bash_profile:/u01/grid/product/11.2.0.3/bin /usr/cluster/bin -

As

root, execute the following commands from each node:

# groupadd -g 300 oinstall # groupadd -g 301 dba # useradd -g 300 -G 301 -u 302 -d /u01/ora_home -s /usr/bin/bash ouser # mkdir -p /u01/ora_home # chown ouser:oinstall /u01/ora_home # mkdir /u01/oracle # chown ouser:oinstall /u01/oracle # mkdir /u01/grid # chown ouser:oinstall /u01/grid # mkdir /u01/oraInventory # chown ouser:oinstall /u01/oraInventory -

Create a password for the software owner,

ouser:

# passwd ouser New Password: Re-enter new Password: passwd: password successfully changed for ouser bash-3.00# -

For the Oracle software owner environment, as the software owner

ouser, set up SSH from each node:

$ mkdir .ssh $ chmod 700 .ssh $ cd .ssh $ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/u01/ora_home/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /u01/ora_home/.ssh/id_rsa. Your public key has been saved in /u01/ora_home/.ssh/id_rsa.pub. The key fingerprint is: e6:63:c9:71:fe:d1:8f:71:77:70:97:25:2a:ee:a9:33 local1@vzhost1c $ $ pwd /u01/ora_home/.ssh -

From the first node,

vzhost1c, do the following:

$ cat id_rsa.pub >> authorized_keys $ chmod 600 authorized_keys $ scp authorized_keys vzhost2c:/u01/ora_home/.ssh -

From the second node,

vzhost2c, do the following:

$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost3c:/u01/ora_home/.ssh -

From the third node,

vzhost3c, do the following:

$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost4c:/u01/ora_home/.ssh -

From the fourth node,

vzhost4c, do the following:

$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost1c:/u01/ora_home/.ssh -

From the first node,

vzhost1c, do the following:

$ cd /u01/ora_home/.ssh $ scp authorized_keys vzhost2c:/u01/ora_home/.ssh $ scp authorized_keys vzhost3c:/u01/ora_home/.ssh -

From each node, test the SSH setup:

$ ssh vzhost1c date $ ssh vzhost2c date $ ssh vzhost3c date $ ssh vzhost4c date -

In each local zone cluster node, as

root, set up the Oracle Automatic Storage Management candidate disk:

# for i in 6 7 8 9 10 14 15 > do > chown ouser:oinstall /dev/did/rdsk/d${i}s6 > chmod 660 /dev/did/rdsk/d${i}s6 > done -

In the local zone cluster, as the software owner, do the following from one node:

$ for i in 6 7 8 9 10 14 15 > do > dd if=/dev/zero of=/dev/did/rdsk/d${i}s6 bs=1024k count=200 > done -

As the software owner, execute the following on one node:

$ bash $ export DISPLAY=<hostname>:<n> $ cd <PATH to 11.2.0.3-based software image>/grid/ $ ./runInstaller -

Provide the following input to Oracle Universal Installer:

- On the Select Installation Option page, select Install and Configure Oracle Grid Infrastructure for a Cluster.

- On the Select Installation Type page, select Advanced Installation.

- On the Select Product Languages page, select the appropriate language.

-

On the Grid Plug and Play Information page, specify the following, as shown in Figure 2:

- For Cluster Name, select vzhost-cluster.

- For SCAN Name, select vzhost1d.

- For SCAN Port, select 1521.

Figure 2. Oracle Grid Infrastructure Plug and Play Information -

On the Cluster Node Information page, specify the following, as shown in Figure 3:

Public Hostname Virtual Hostname vzhost1c vzhost1c-vip vzhost2c vzhost2c-vip vzhost3c vzhost3c-vip vzhost4c vzhost4c-vip

Figure 3. Cluster Node Information - On the Specify Network Interface Usage page, accept the default settings.

- On the Storage Option Information page, select the Oracle Automatic Storage Management option.

-

On the Create ASM Disk Group page do the following, as shown in Figure 4:

- Click Change Discovery Path.

- In the Change Discovery Path dialog box, specify the discovery path as /dev/did/rdsk/d*s6.

- For Disk Group Name, specify crsdg.

- For the Candidate Disks, select /dev/did/rdsk/d6s6, /dev/did/rdsk/d7s6, and /dev/did/rdsk/d8s6.

Figure 4. Create Oracle Automatic Storage Management Disk Group -

On the Specify ASM Password page, specify the SYS and ASMSNMP accounts' username and password, as shown in Figure 5.

Figure 5. Oracle Automatic Storage Management Password -

On the Privileged Operating System Groups page, select the following:

- For Oracle ASM DBA (OSDBA for ASM) Group, select oinstall.

- (Optional) For Oracle ASM Operator (OSOPER for ASM) Group (Optional), select a group.

- For Oracle ASM Administrator (OSASM) Group, select oinstall.

-

On the Specify Installation Location page, specify the following:

- For Oracle Base, specify /u01/oracle.

- For Software Location, specify /u01/grid/product/11.2.0.3.

- On the Create Inventory page, select /u01/oraInventory for Inventory Directory.

-

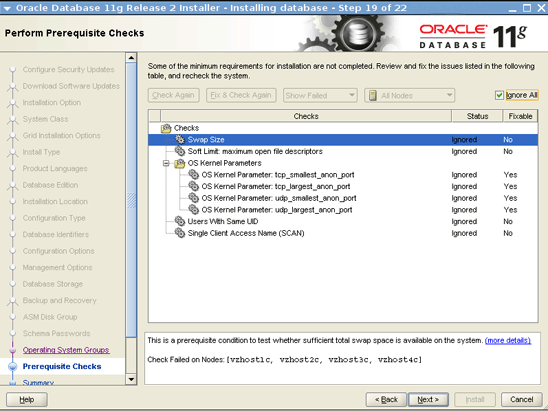

On the Perform Prerequisite Checks page, select Ignore All, as shown in Figure 6.

Figure 6. Perform Prerequisite Checks -

On the Summary page, click Install to start the software installation.

The Execute Configuration Scripts dialog box asks you to execute the

/u01/oraInventory/orainstRoot.shand/u01/grid/product/11.2.0.3/root.shscripts asroot, as shown in Figure 7.

Figure 7. Execute Configuration Scripts Dialog Box -

Open a terminal window and execute the scripts on each node, as shown in Listing 5.

# /u01/oraInventory/orainstRoot.sh Changing permissions of /u01/oraInventory. Adding read,write permissions for group. Removing read,write,execute permissions for world. Changing groupname of /u01/oraInventory to oinstall. The execution of the script is complete. # /u01/grid/product/11.2.0.3/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= ouser ORACLE_HOME= /u01/grid/product/11.2.0.3 Enter the full pathname of the local bin directory: [/usr/local/bin]: /opt/local /bin Creating /opt/local/bin directory... Copying dbhome to /opt/local/bin ... Copying oraenv to /opt/local/bin ... Copying coraenv to /opt/local/bin ... Creating /var/opt/oracle/oratab file... Entries will be added to the /var/opt/oracle/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/grid/product/11.2.0.3/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation OLR initialization - successful root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert Adding Clusterware entries to inittab CRS-2672: Attempting to start 'ora.mdnsd' on 'vzhost1c' CRS-2676: Start of 'ora.mdnsd' on 'vzhost1c' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'vzhost1c' CRS-2676: Start of 'ora.gpnpd' on 'vzhost1c' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'vzhost1c' CRS-2672: Attempting to start 'ora.gipcd' on 'vzhost1c' CRS-2676: Start of 'ora.cssdmonitor' on 'vzhost1c' succeeded CRS-2676: Start of 'ora.gipcd' on 'vzhost1c' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'vzhost1c' CRS-2672: Attempting to start 'ora.diskmon' on 'vzhost1c' CRS-2676: Start of 'ora.diskmon' on 'vzhost1c' succeeded CRS-2676: Start of 'ora.cssd' on 'vzhost1c' succeeded ASM created and started successfully. Disk Group crsdg created successfully. clscfg: -install mode specified Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. CRS-4256: Updating the profile Successful addition of voting disk 621725b80bf24f53bfc8c56f8eaf3457. Successful addition of voting disk 630c40e735134f2bbf78571ea35bb856. Successful addition of voting disk 4a78fd6ce8564fdbbfceac0f0e9d7c37. Successfully replaced voting disk group with +crsdg. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfully replaced ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 621725b80bf24f53bfc8c56f8eaf3457 (/dev/did/rdsk/d6s6) [CRSDG] 2. ONLINE 630c40e735134f2bbf78571ea35bb856 (/dev/did/rdsk/d7s6) [CRSDG] 3. ONLINE 4a78fd6ce8564fdbbfceac0f0e9d7c37 (/dev/did/rdsk/d8s6) [CRSDG] Located 3 voting disk(s). CRS-2672: Attempting to start 'ora.asm' on 'vzhost1c' CRS-2676: Start of 'ora.asm' on 'vzhost1c' succeeded CRS-2672: Attempting to start 'ora.CRSDG.dg' on 'vzhost1c' CRS-2676: Start of 'ora.CRSDG.dg' on 'vzhost1c' succeeded Configure Oracle Grid Infrastructure for a Cluster ... succeededListing 5. Executing the Scripts

-

After executing both scripts, click OK in the GUI to continue.

If the cluster is not using the DNS network client service, an error message,

[INS-20802] Oracle Cluster Verification Utility failed, appears, as shown in Figure 8. You can ignore this error. TheoraInstalllog also shows that the Cluster Verification Utility can't resolve the SCAN name.INFO: Checking Single Client Access Name (SCAN)... INFO: Checking TCP connectivity to SCAN Listeners... INFO: TCP connectivity to SCAN Listeners exists on all cluster nodes INFO: Checking name resolution setup for "vzhost1d"... INFO: ERROR: INFO: PRVG-1101 : SCAN name "vzhost1d" failed to resolve INFO: ERROR: INFO: PRVF-4657 : Name resolution setup check for "vzhost1d" (IP address: 10.134. 35.99) failed INFO: ERROR: INFO: PRVF-4663 : Found configuration issue with the 'hosts' entry in the /etc/n sswitch.conf file INFO: Verification of SCAN VIP and Listener setup failed

Figure 8. Failure Message -

To continue, click OK, then click Skip, and then click Next.

Another error message appears, as shown in Figure 9.

Figure 9. Another Error Message -

Click Yes to continue.

The installation of Oracle Grid Infrastructure 11.2.0.3 is completed.

-

From any node, check the status of Oracle Grid Infrastructure resources, as shown in Listing 6:

# /u01/grid/product/11.2.0.3/bin/crsctl status res -t -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.CRSDG.dg ONLINE ONLINE vzhost1c ONLINE ONLINE vzhost2c ONLINE ONLINE vzhost3c ONLINE ONLINE vzhost4c ora.LISTENER.lsnr ONLINE ONLINE vzhost1c ONLINE ONLINE vzhost2c ONLINE ONLINE vzhost3c ONLINE ONLINE vzhost4c ora.asm ONLINE ONLINE vzhost1c Started ONLINE ONLINE vzhost2c Started ONLINE ONLINE vzhost3c Started ONLINE ONLINE vzhost4c Started ora.gsd OFFLINE OFFLINE vzhost1c OFFLINE OFFLINE vzhost2c OFFLINE OFFLINE vzhost3c OFFLINE OFFLINE vzhost4c ora.net1.network ONLINE ONLINE vzhost1c ONLINE ONLINE vzhost2c ONLINE ONLINE vzhost3c ONLINE ONLINE vzhost4c ora.ons ONLINE ONLINE vzhost1c ONLINE ONLINE vzhost2c ONLINE ONLINE vzhost3c ONLINE ONLINE vzhost4c -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE vzhost1c ora.cvu 1 ONLINE ONLINE vzhost2c ora.oc4j 1 ONLINE ONLINE vzhost3c ora.scan1.vip 1 ONLINE ONLINE vzhost4c ora.vzhost1c.vip 1 ONLINE ONLINE vzhost1c ora.vzhost2c.vip 1 ONLINE ONLINE vzhost2c ora.vzhost3c.vip 1 ONLINE ONLINE vzhost3c ora.vzhost4c.vip 1 ONLINE ONLINE vzhost4cListing 6. Checking the Status of the Resources

-

Execute the following commands to launch the ASM Configuration Assistant, which is shown in Figure 10:

$ export DISPLAY=<hostname>:<n> $ /u01/grid/product/11.2.0.3/bin/asmca

Figure 10. Oracle ASM Configuration Assistant -

In the ASM Configuration Assistant, do the following:

- In the Disk Groups tab, click Create.

-

Do the following in the Create Disk Group page (shown in Figure 11) to create an Oracle Automatic Storage Management disk group called

swbdgfor database creation:

- For Disk Group Name, specify swbdg.

- Select /dev/did/rdsk/d9s6, /dev/did/rdsk/d10s6, /dev/did/rdsk/d14s6, and /dev/did/rdsk/d15s6.

- For Failure Group, specify fgr1 for /dev/did/rdsk/d9s6 and /dev/did/rdsk/d10s6.

- For Failure Group, specify fgr2 for /dev/did/rdsk/d14s6 and /dev/did/rdsk/d15s6.

- Click OK to create the disk group.

Figure 11. Create Disk Group - After the

swbdgdisk group creation is complete, click Exit to close the ASM Configuration Assistant.

-

From one node, run the following commands to tell the Oracle Universal Installer to install Oracle Database:

$ export DISPLAY=<hostname>:<n> $ cd <PATH to 11.2.0.3 based software image>/database $ ./runInstaller -

Provide input to the Oracle Universal Installer, as follows:

- Provide the requested information on the Configure Security Updates page and the Download Software Updates page.

- On the Select Installation Option page, select Create and configure a database.

- On the System Class page, select Server Class.

-

On the Grid Installation Options page, do the following, as shown in Figure 12:

- Select Oracle Real Application Clusters database installation.

- Ensure all nodes are selected.

Figure 12. Oracle Grid Infrastructure Installation Options - On the Select Install Type page, select Advanced install.

- On the Select Product Languages page, use the default value.

- On the Select Database Edition page, select Enterprise Edition.

-

On the Specify Installation Location page, specify the following:

- For Oracle Base, select /u01/oracle.

- For Software Location, select /u01/oracle/product/11.2.0.3.

- On the Select Configuration Type page, select General Purpose/Transaction Processing.

-

On the Specify Database Identifiers page, specify the following:

- For Global Database Name, specify swb.

- For Oracle Service Identifier, specify swb.

-

On the Specify Configuration Options, shown in Figure 13, use the default settings.

Figure 13. Specify Configuration Options - On the Specify Management Options page, use the default settings.

- On the Specify Database Storage Options page, select Oracle Automatic Storage Management and specify the password for the ASMSNMP user.

- On the Specify Recovery Options page, select Do not enable automated backups.

-

On the Select ASM Disk Group page, select SWBDG as the disk group name, as shown in Figure 14.

Figure 14. Select Disk Group Name - On the Specify Schema Passwords page, specify a password for the SYS, SYSTEM, SYSMAN, and DBSNMP accounts.

-

On the Privileged Operating System Groups page, specify the following:

- For Database Administrator (OSDBA) Group, select dba.

- For Database Operator (OSOPER) Group (Optional), select oinstall.

-

On the Perform Prerequisite Checks page, select Ignore All, as shown in Figure 15.

Figure 15. Perform Prerequisite ChecksAn INS-13016 message appears, as shown in Figure 16.

-

Select Yes to continue.

Figure 16. Message During Prerequisite Checks -

On the Summary page, shown in Figure 17, click Install.

Figure 17. Summary Page -

In the Database Configuration Assistant dialog box, click OK to continue, as shown in Figure 18.

Figure 18. Database Configuration Assistant Dialog Box -

The Execute Configuration Scripts dialog box asks you to execute

root.shon each node, as shown in Figure 19.

Figure 19. Execute Configuration Scripts Dialog Box -

After executing the

root.shscript on the last node, click OK to continue. The Finish page shows that the installation and configure of Oracle Database is complete, as shown in Figure 20.

Figure 20. Finish Page

-

From one zone cluster node, execute the following command to register the

SUNW.crs_frameworkresource type in the zone cluster:

# clrt register SUNW.crs_framework -

Add an instance of the

SUNW.crs_frameworkresource type to the Oracle RAC framework resource group:

# clresource create -t SUNW.crs_framework \ -g rac-framework-rg \ -p resource_dependencies=rac-framework-rs \ crs-framework-rs -

Register the scalable Oracle Automatic Storage Management instance proxy resource type:

# clresourcetype register SUNW.scalable_asm_instance_proxy -

Register the Oracle Automatic Storage Management disk group resource type:

# clresourcetype register SUNW.scalable_asm_diskgroup_proxy -

Create resource groups

asm-inst-rgandasm-dg-rg:

# clresourcegroup create -S asm-inst-rg asm-dg-rg -

Set a strong positive affinity on

rac-fmwk-rgbyasm-inst-rg:

# clresourcegroup set -p rg_affinities=++rac-framework-rg asm-inst-rg -

Set a strong positive affinity on

asm-inst-rgbyasm-dg-rg:

# clresourcegroup set -p rg_affinities=++asm-inst-rg asm-dg-rg -

Create a

SUNW.scalable_asm_instance_proxyresource and set the resource dependencies:

# clresource create asm-inst-rg \ -t SUNW.scalable_asm_instance_proxy \ -p ORACLE_HOME=/u01/grid/product/11.2.0.3 \ -p CRS_HOME=/u01/grid/product/11.2.0.3 \ -p "ORACLE_SID{vzhost1c}"=+ASM1 \ -p "ORACLE_SID{vzhost2c}"=+ASM2 \ -p "ORACLE_SID{vzhost3c}"=+ASM3 \ -p "ORACLE_SID{vzhost4c}"=+ASM4 \ -p resource_dependencies_offline_restart=crs-framework-rs \ -d asm-inst-rs -

Add an Oracle Automatic Storage Management disk group resource type to the

asm-dg-rgresource group:

# clresource create -g asm-dg-rg -t SUNW.scalable_asm_diskgroup_proxy \ -p asm_diskgroups=CRSDG,SWBDG \ -p resource_dependencies_offline_restart=asm-inst-rs \ -d asm-dg-rs -

On a cluster node, bring the

asm-inst-rgresource group online in a managed state:

# clresourcegroup online -eM asm-inst-rg -

On a cluster node, bring the

asm-dg-rgresource group online in a managed state:

# clresourcegroup online -eM asm-dg-rg -

Create a scalable resource group to contain the proxy resource for the Oracle RAC database server:

# clresourcegroup create -S \ -p rg_affinities=++rac-framework-rg,++asm-dg-rg \ rac-swbdb-rg -

Register the

SUNW.scalable_rac_server_proxyresource type:

# clresourcetype register SUNW.scalable_rac_server_proxy -

Add a database resource to resource group:

# clresource create -g rac-swbdb-rg \ -t SUNW.scalable_rac_server_proxy \ -p resource_dependencies=rac-framework-rs \ -p resource_dependencies_offline_restart=crs-framework-rs,asm-dg-rs \ -p oracle_home=/u01/oracle/product/11.2.0.3 \ -p crs_home=/u01/grid/product/11.2.0.3 \ -p db_name=swb \ -p "oracle_sid{vzhost1c}"=swb1 \ -p "oracle_sid{vzhost2c}"=swb2 \ -p "oracle_sid{vzhost3c}"=swb3 \ -p "oracle_sid{vzhost4c}"=swb4 \ -d rac-swb-srvr-proxy-rs -

Bring the resource group online:

# clresourcegroup online -emM rac-swbdb-rg -

Check the status of the cluster resource, as shown in Listing 7.

# clrs status === Cluster Resources === Resource Name Node Name State Status Message ------------- --------- ----- --------------- crs_framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online rac-framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online asm-inst-rs vzhost1c Online Online - +ASM1 is UP and ENABLED vzhost2c Online Online - +ASM2 is UP and ENABLED vzhost3c Online Online - +ASM3 is UP and ENABLED vzhost4c Online Online - +ASM4 is UP and ENABLED asm-dg-rs vzhost1c Online Online - Mounted: SWBDG vzhost2c Online Online - Mounted: SWBDG vzhost3c Online Online - Mounted: SWBDG vzhost4c Online Online - Mounted: SWBDG rac-swb-srvr-proxy-rs vzhost1c Online Online - Oracle instance UP vzhost2c Online Online - Oracle instance UP vzhost3c Online Online - Oracle instance UP vzhost4c Online Online - Oracle instance UP - "Running Oracle RAC Application Clusters on Oracle Solaris Zone Clusters" white paper: http://www.oracle.com/technetwork/articles/servers-storage-admin/o11-062-rac-solariszonescluster-429206.pdf

- Oracle Solaris Cluster 3.3 documentation library: http://www.oracle.com/solaris/technologies/cluster-documentation.html

- All Oracle Solaris Cluster technical resources: http://www.oracle.com/solaris/technologies/cluster-technical-resources.html

- Oracle Solaris Cluster 3.3 Release Notes: http://docs.oracle.com/cd/E18728_01/html/E22274/index.html

- Oracle Solaris patches and updates: http://www.oracle.com/middleware/technologies/cluster-archive.html

- Oracle Solaris Cluster downloads: http://www.oracle.com/middleware/technologies/solaris-cluster-downloads.html

- Oracle Solaris Cluster training: http://www.oracle.com/solaris/technologies/cluster-overview.html

Create the Oracle RAC Framework Resources for the Zone Cluster

Perform the following steps from one node to create the Oracle RAC framework:

Set Up the Root Environment in Local Zone Cluster z11gr2A

From each global zone cluster node (phyhost1, phyhost2, phyhost3, and phyhost4), do the following:

Create a User and Group for the Oracle Software

Install Oracle Grid Infrastructure 11.2.0.3 in the Oracle Solaris Zone Cluster Nodes

Install Oracle Database 11.2.0.3 and Create the Database

Create the Oracle Solaris Cluster Resource

Use the following procedure to create the Oracle Solaris Cluster Resource. Alternatively, you can use the clsetup wizard.

See Also

Here are some additional resources:

About the Author

Vinh Tran is a Quality Engineer in the Oracle Solaris Cluster Group. His responsibilities include, but are not limited to, certification and qualification of Oracle RAC on Oracle Solaris Cluster.

Revision 2.0, 06/06/2014

Revision 1.0, 06/26/2012