- Revision History

- Overview

- Update Tasks

- Feature Summary

- Transportation and Global Trade Platform

-

- Architecture

- User Interface Refresh

-

- Accessibility - Keyboard Navigation

- Accessibility - Skip Navigation Menu

- Accessibility - Validate Usage of Color

- Accessibility - Screen Reader

- Accessibility - Documentation

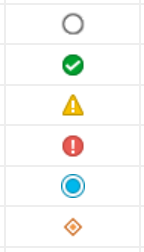

- User Experience - General - New Indicators

- Home Experience Improvements - Default Colors and Theme Management Enhancements

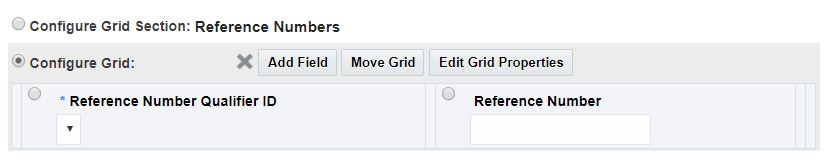

- Manager Layout - Support Removal of Reference Number Grid

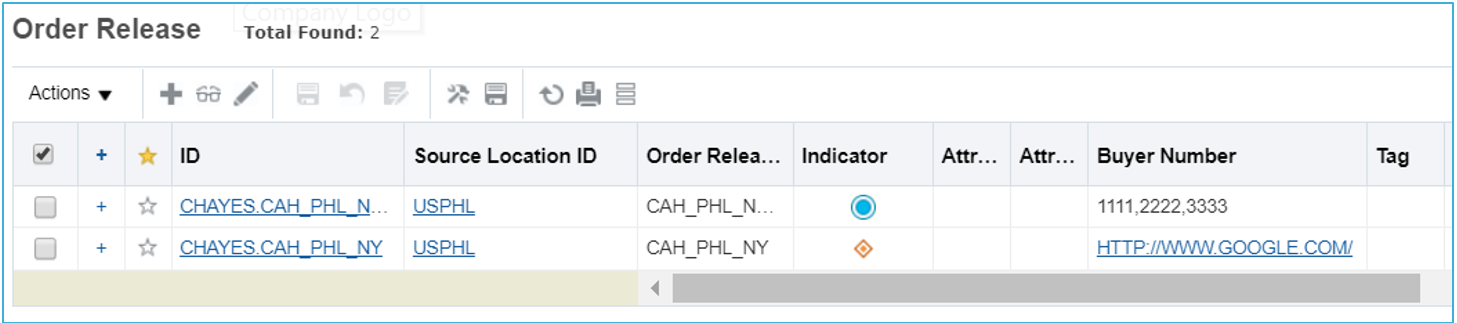

- Screen Set Result Improvements

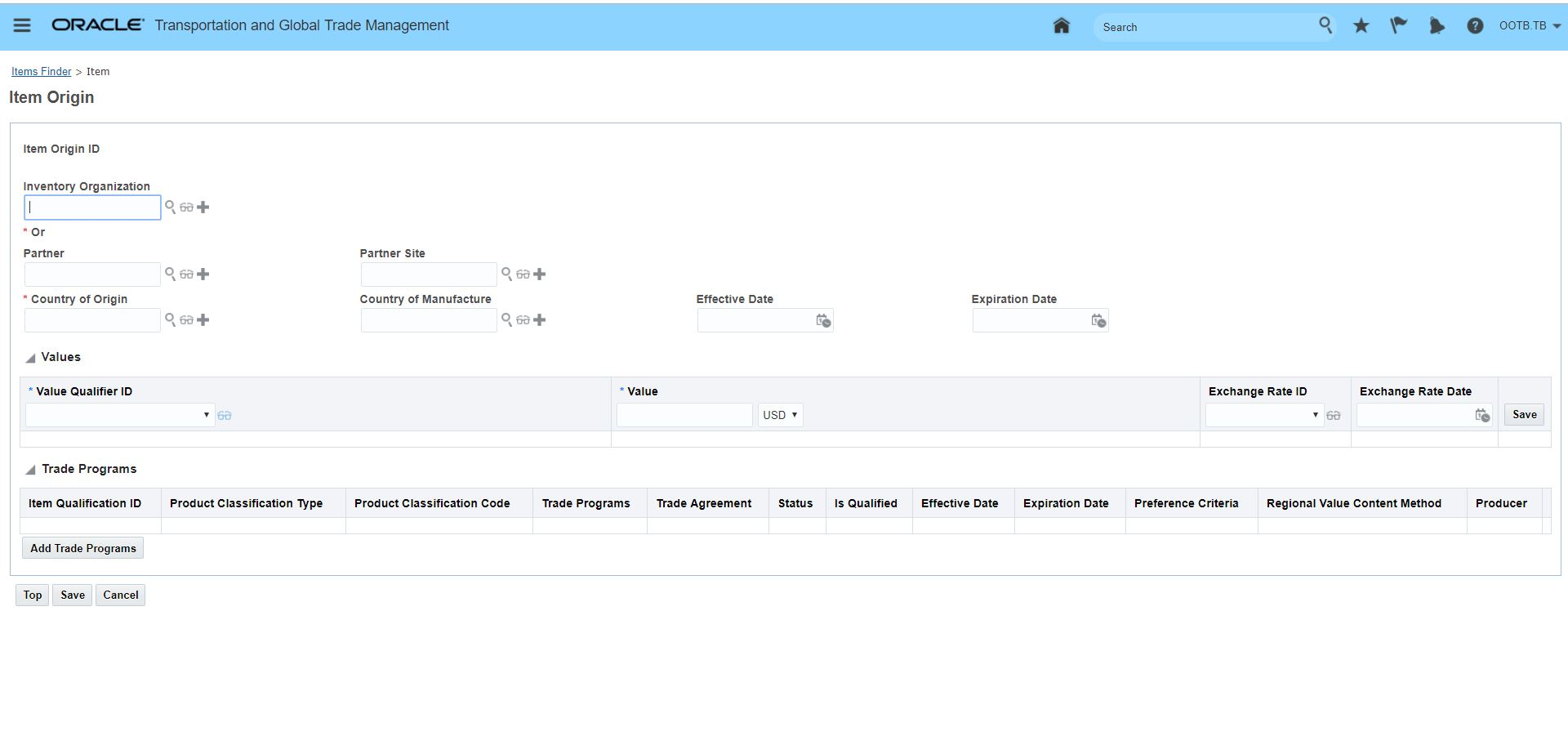

- Item Unified UI

-

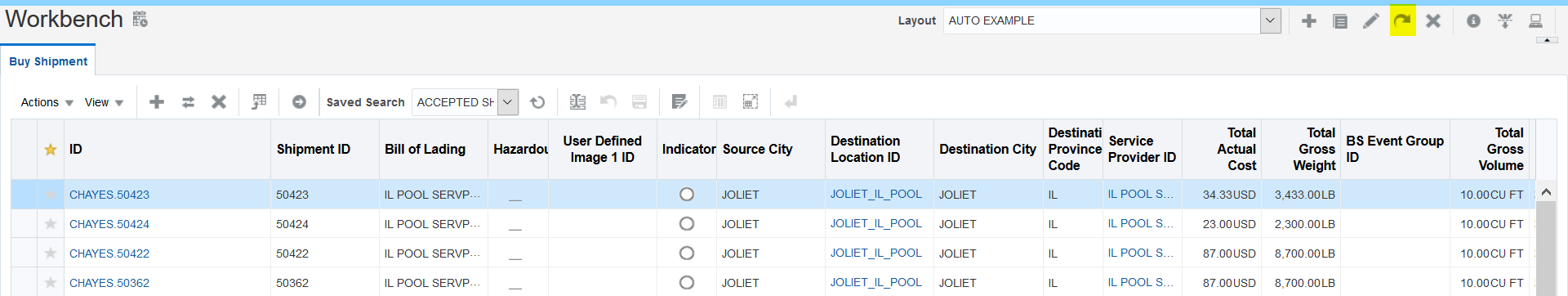

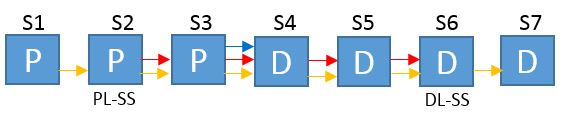

- Workbench

-

- Workbench - Additional GTM Objects Supported in the Workbench

- Workbench - Additional OTM Objects Supported in the Workbench

- Workbench - Export to Excel Support for Workbench Tables

- Workbench - Layout Messages

- Workbench - Splitter Configuration - Split Existing Region

- Workbench - Layout Display Format

- Workbench - Manager Layout a Region

- Workbench - Selected Rows Totals for Workbench Tables

- Workbench - Mass Update Support for Workbench Tables

- Workbench - Multiple Masters to One Detail Table

- Workbench - View Only Access

- Workbench - Refresh All

- Workbench - Refresh After Action

- Workbench - Refresh Detail Tables When Master Table is Refreshed

- Workbench - Saved Query No Longer Runs During Creation or Edit of a Workbench Table

-

- Other Improvements

- Oracle Transportation Management (Base)

- External Distance Engine and Map Enhancements

-

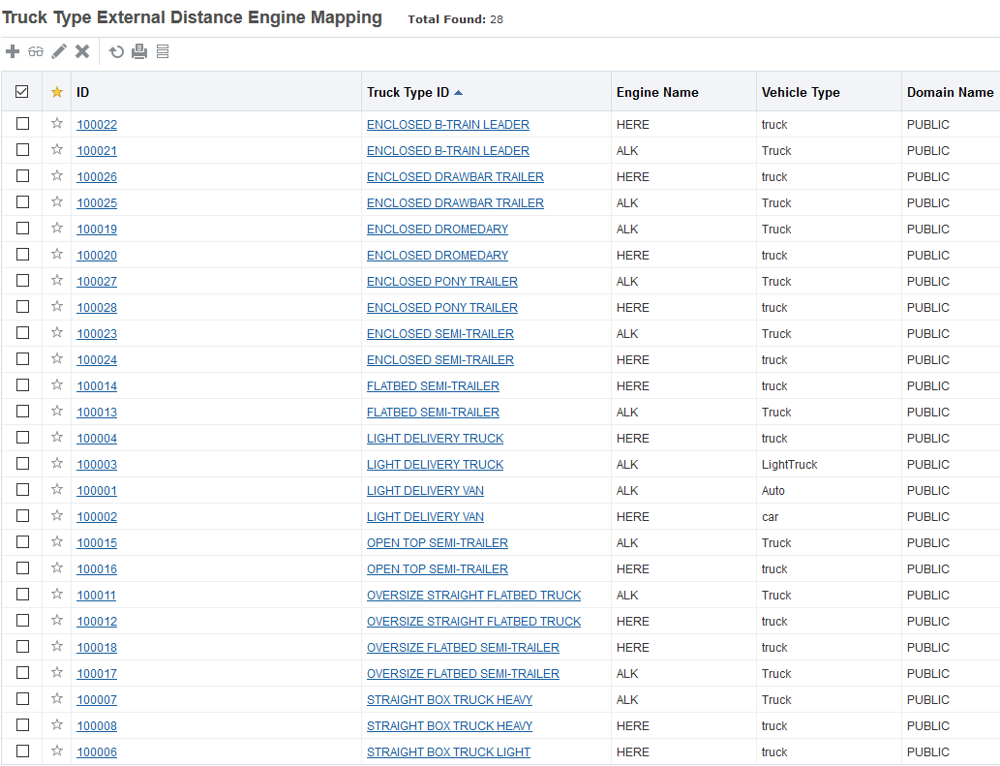

- Simplified External Distance Engine Configuration UI

- Screen Set - Configure Map Hover Fields

- Workbench Map - Expose Vendor Map Controls

- Workbench - Configure Map Hover Text in Screen Set

- Workbench Map - Support Multiple Maps in Workbench Layout

- Workbench Map - External Distance Engine and Map - Consider Traffic Between Stops

- Workbench Map - Consider Hazmat for Each Pair of Stops

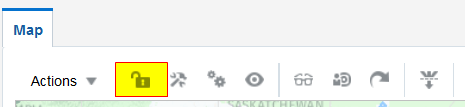

- Workbench Map - Lock Zoom Level and Lock View on Map

- Workbench Map - Additional HERE Supported Parameters

- Workbench Map - ALK Rail Routes

- Workbench Map - Additional ALK Supported Parameters

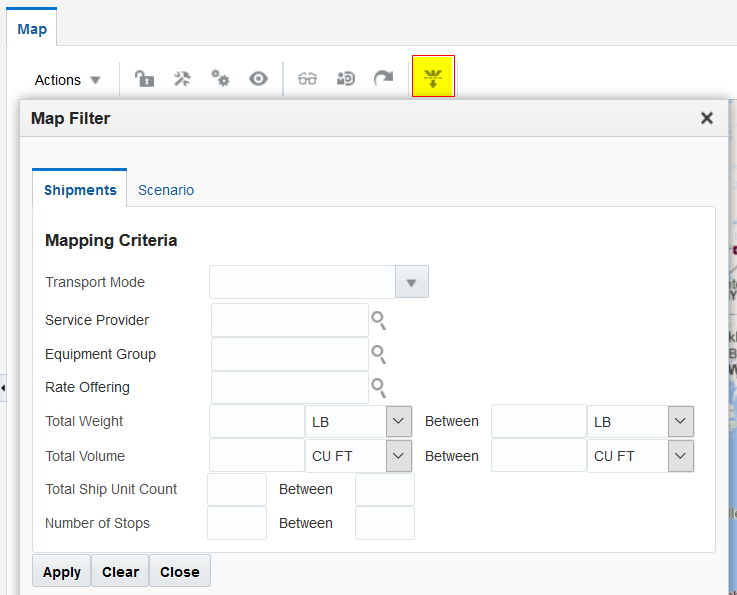

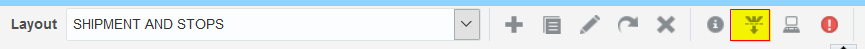

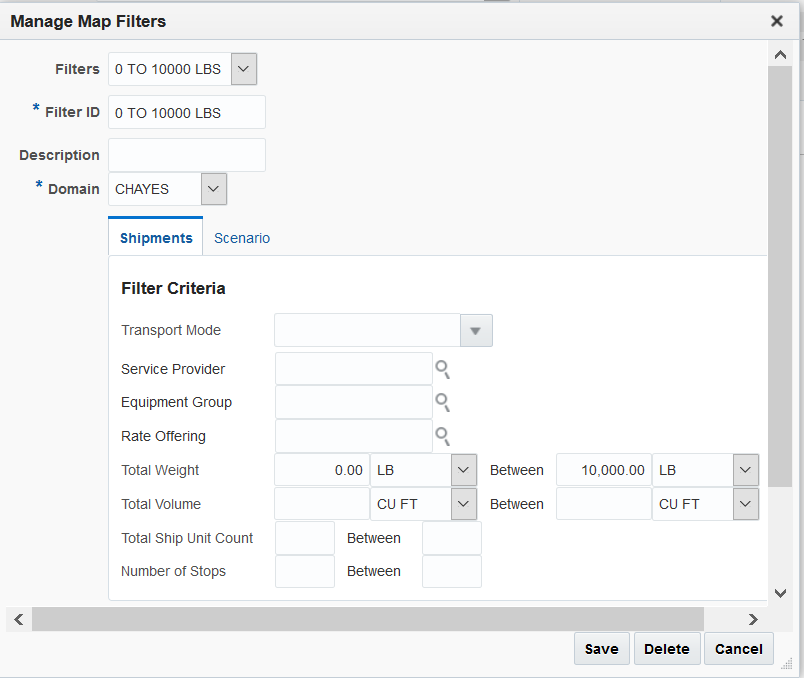

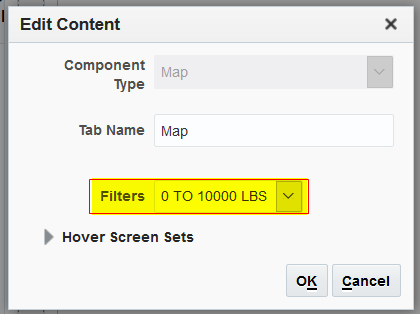

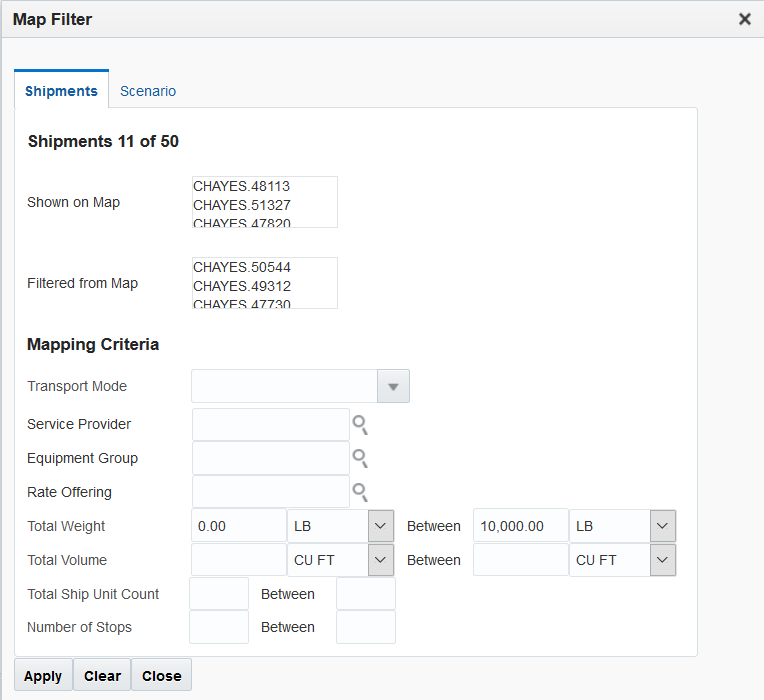

- Workbench Map - Map Filters

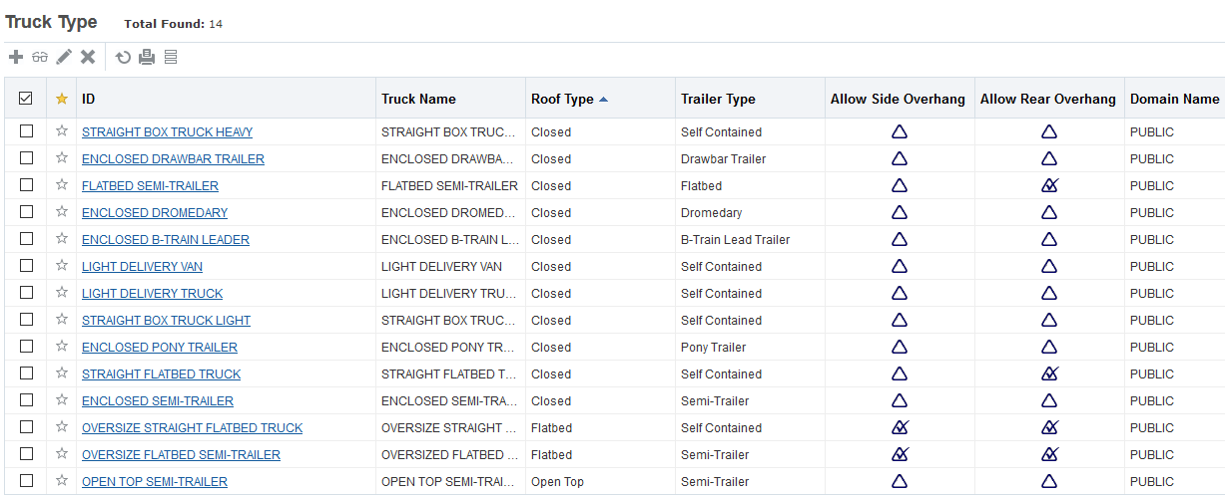

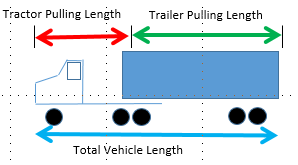

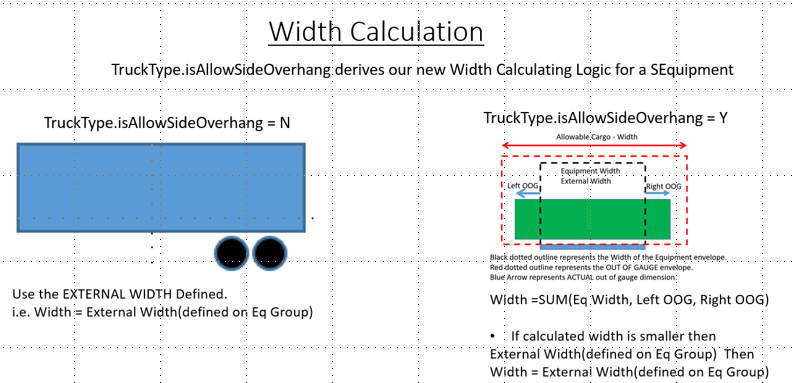

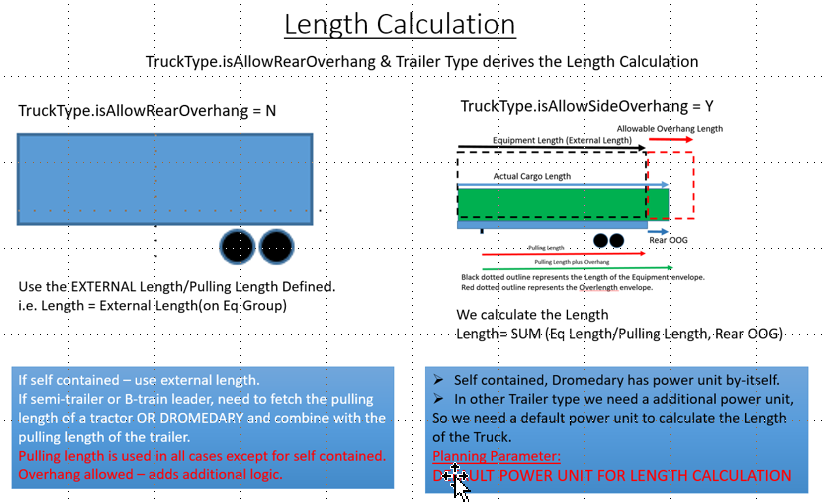

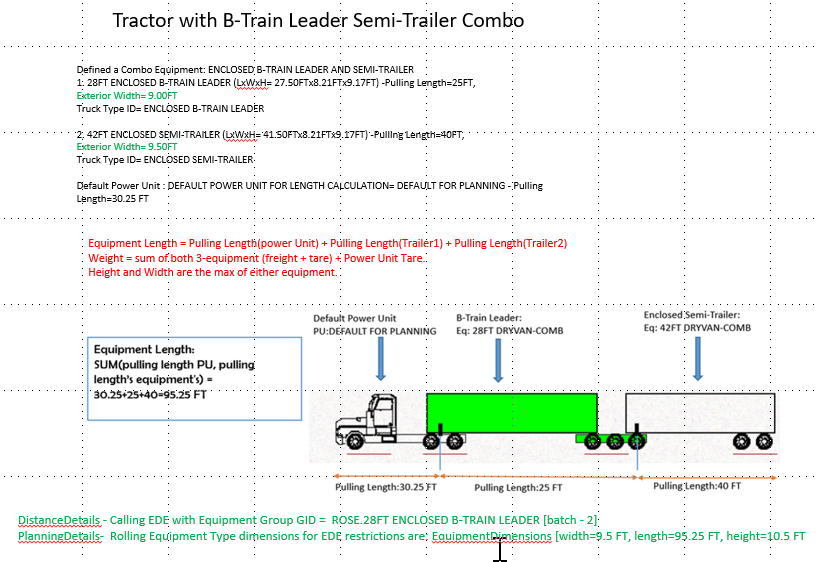

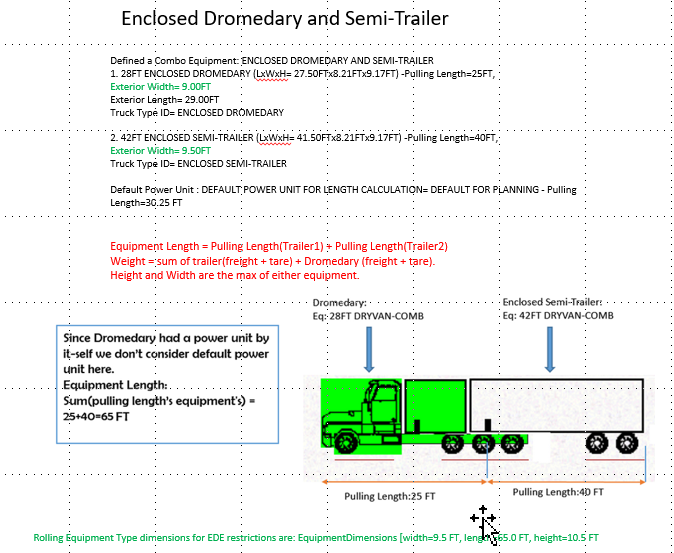

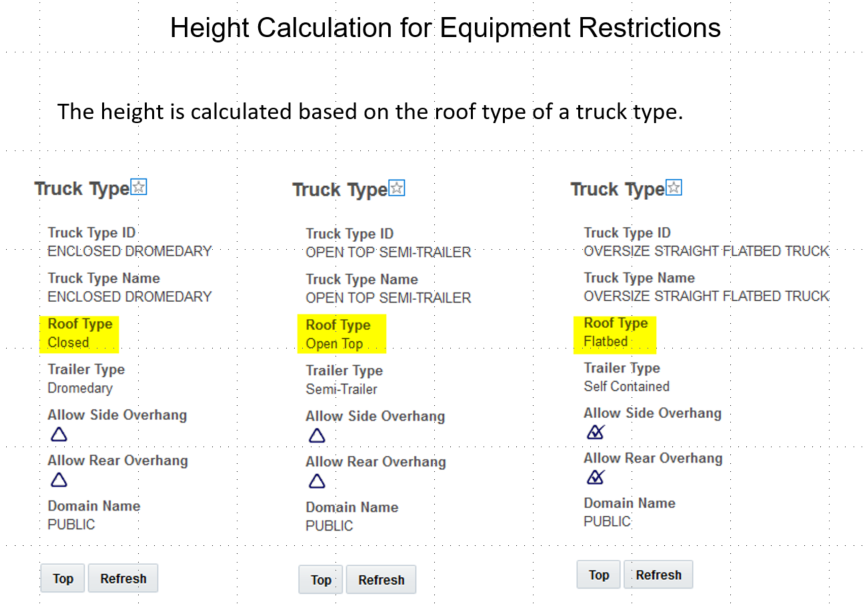

- External Distance Engine and External Service Engine Consider Equipment Restrictions

- Transportation Operational Planning

-

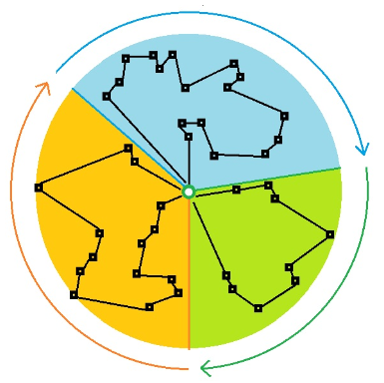

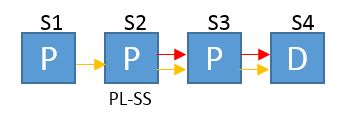

- Clustering Merge Algorithm

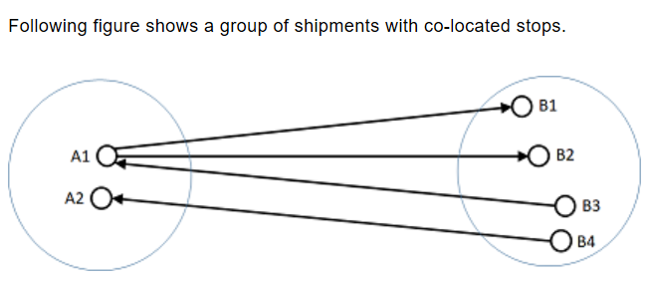

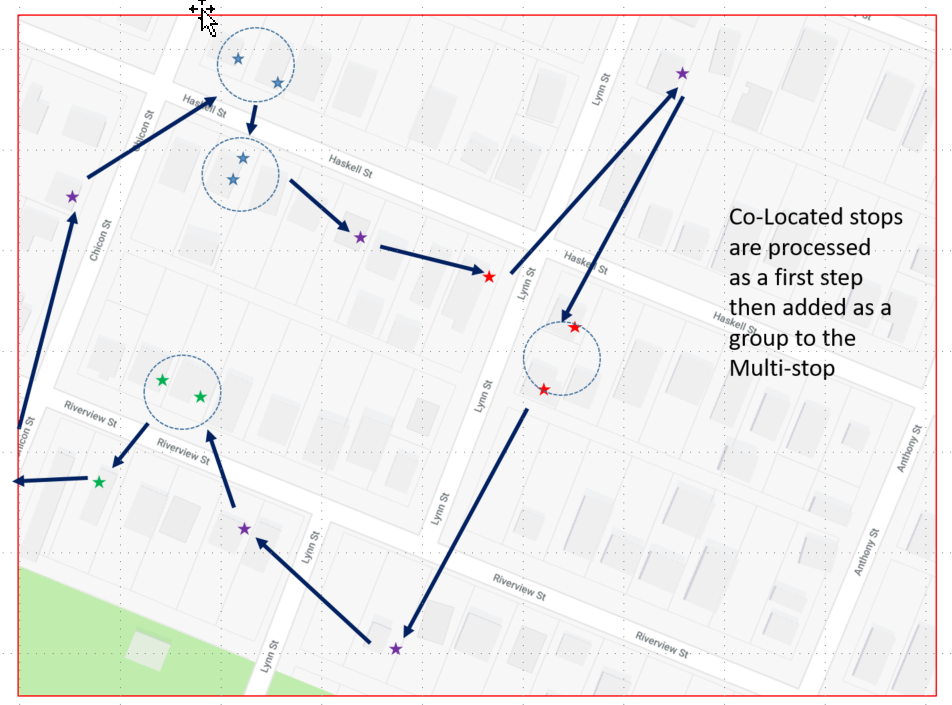

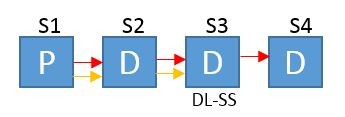

- Multi-Stop Consolidation for Co-Located Stops

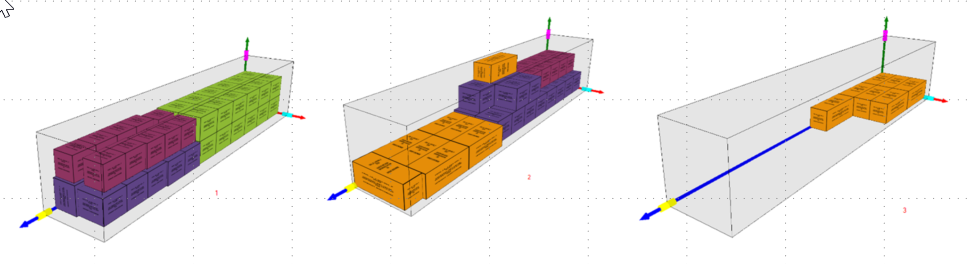

- Load Configuration - Scoring Algorithm Load Bearing

- Consider Service Provider Capacity Across Days

- Honor Location Inactive Flag for Intermediate Locations in Network Routing

- Rule 11 and Network Routing

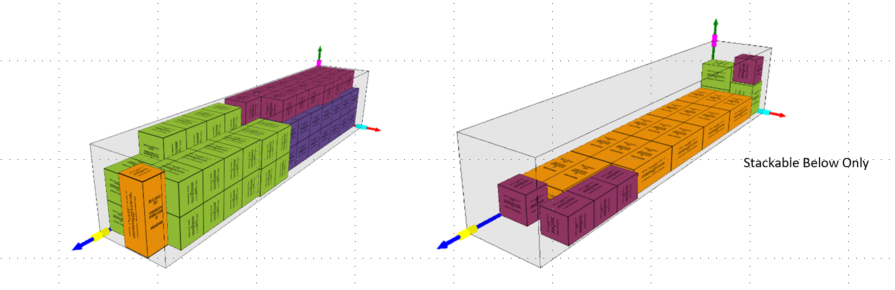

- Tracking Event Ahead/Late Calculation Based on ETA

- Ability to Turn Off Rating Within Network Routing

- Center of Gravity Out of Bounds Reporting

- Top-Off Orders

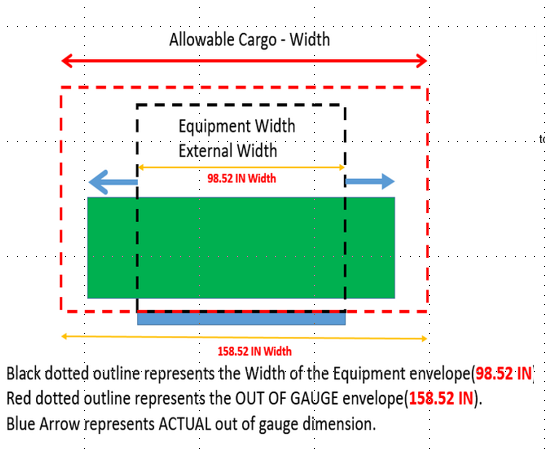

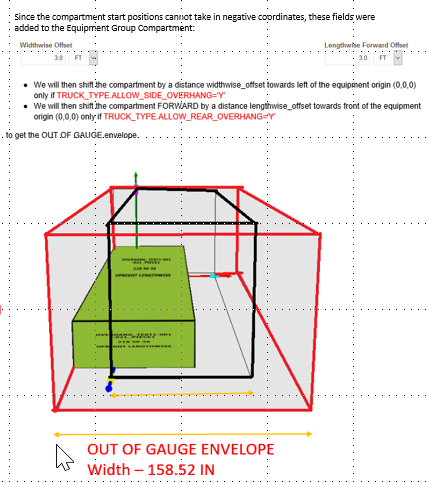

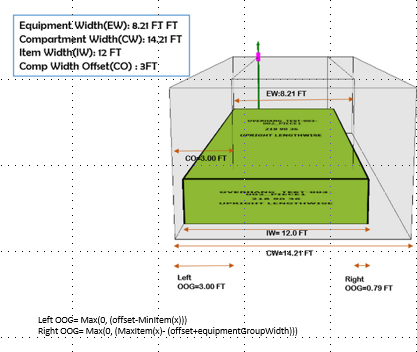

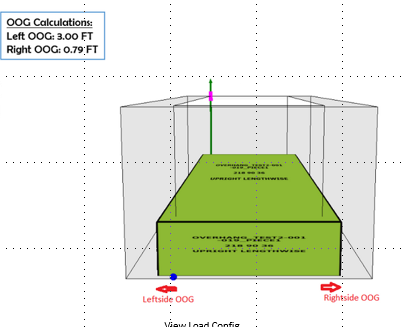

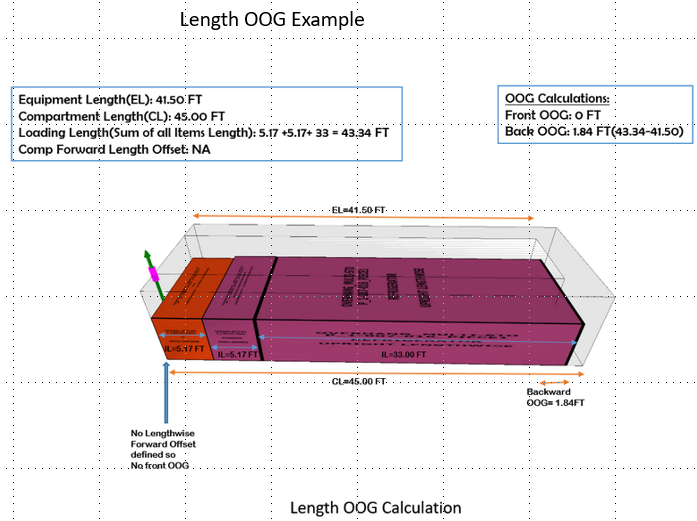

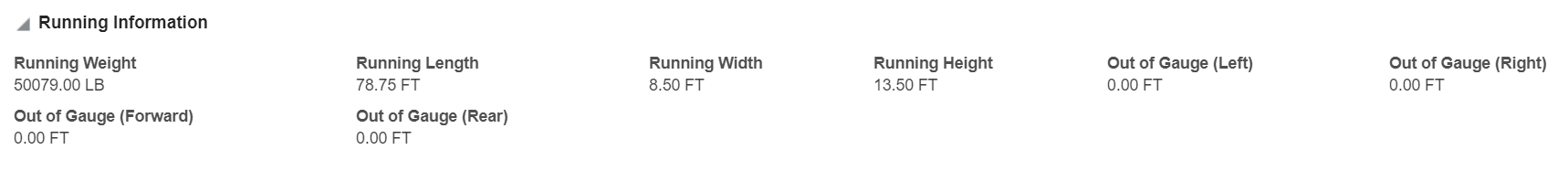

- Out of Gauge Load Building

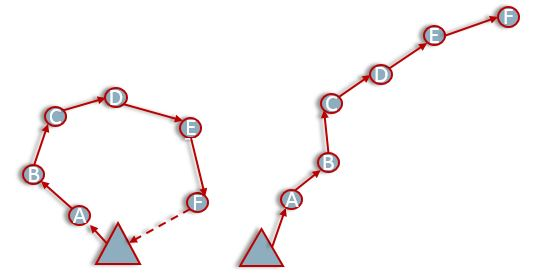

- Network Routing - Allow Order to Start and End at Through Point

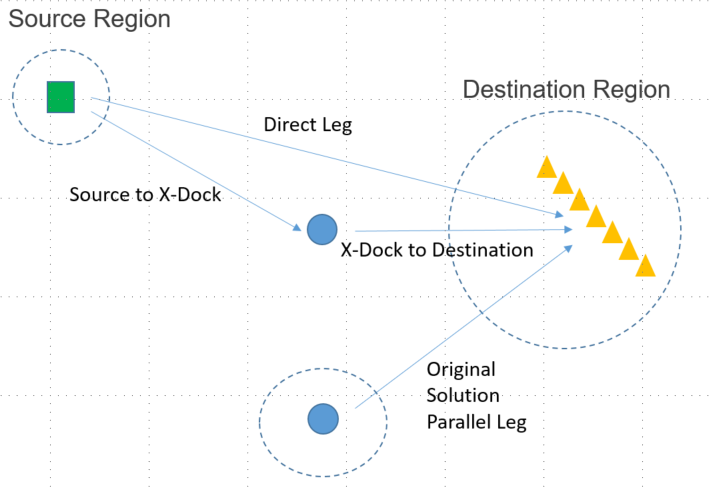

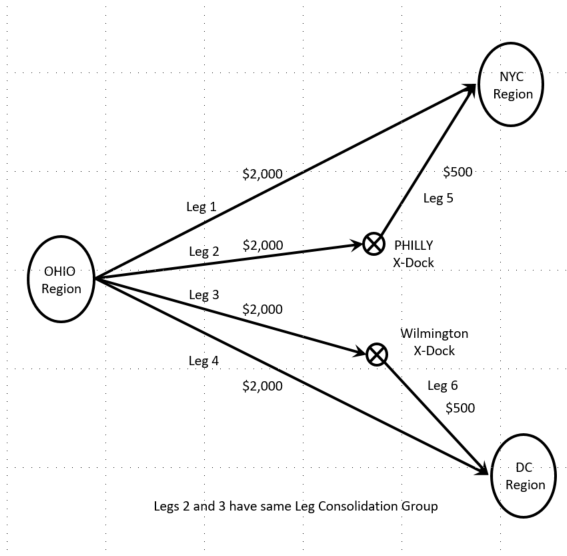

- Network Routing - Cross Leg Consolidation

-

- Oracle Fleet Management

-

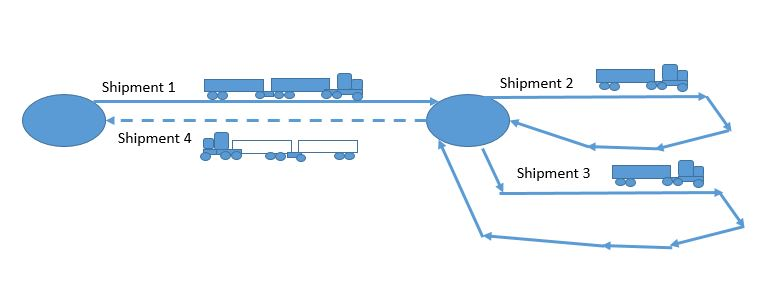

- Combination Equipment Group Usability - Return Set Scenario

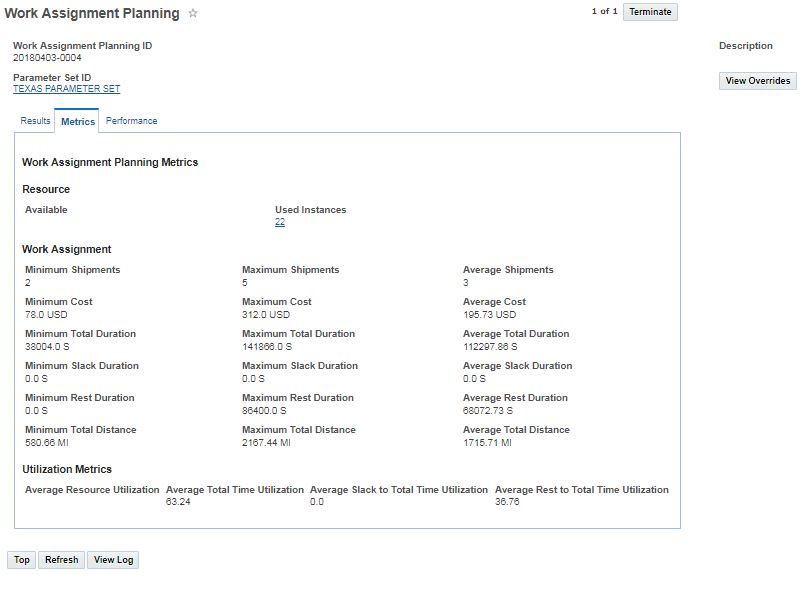

- Stand Alone Work Assignment Process

- Solution Quality Improvement for Round-Trip Shipment Sequence vs. One-Way Shipment Sequences

- Estimate Hours of Service When Tracking Events Are Received

- Combination Equipment Group Usability - Support Multi-Stop Scenarios

-

- Freight Payment, Billing, and Claims

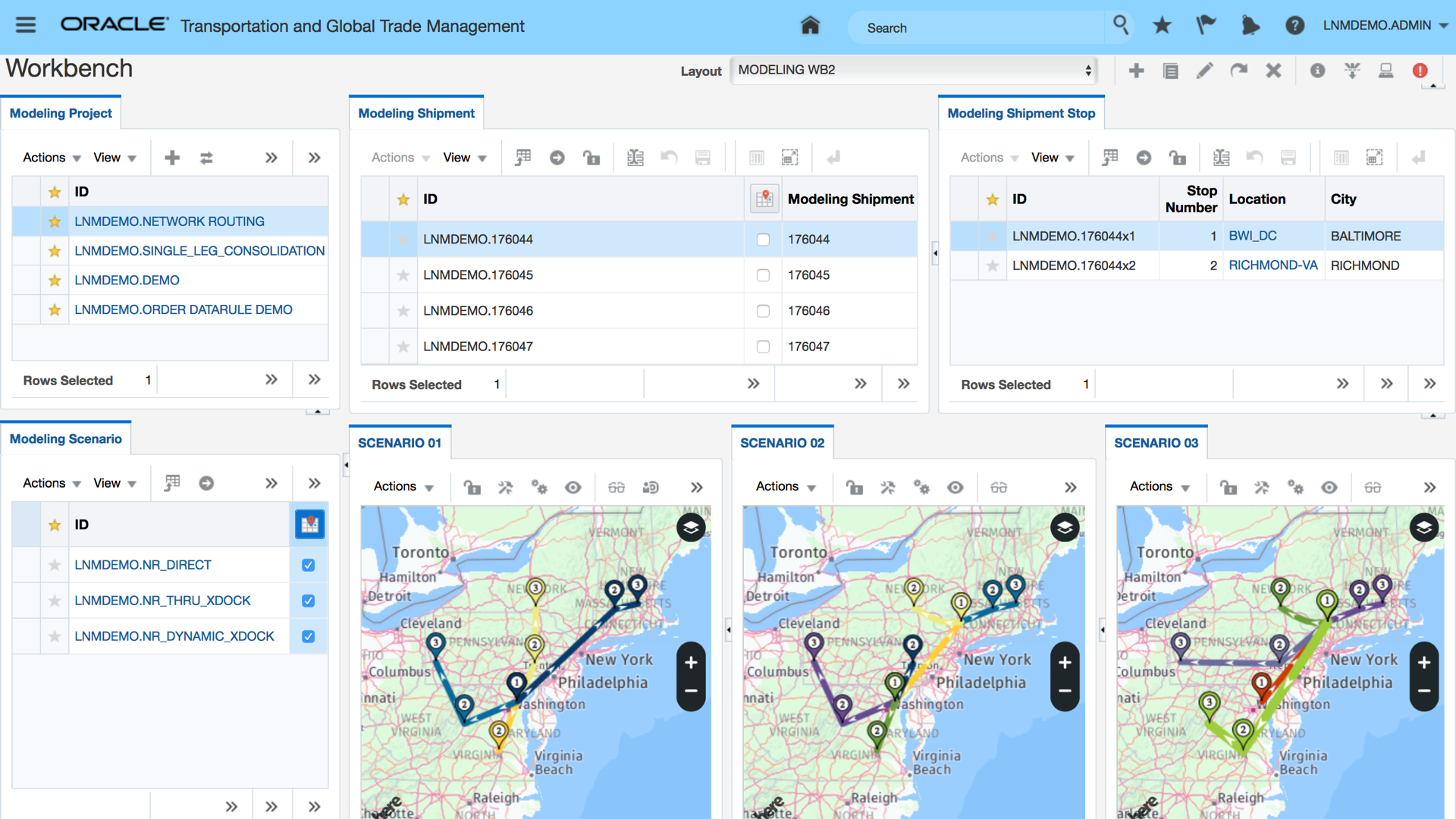

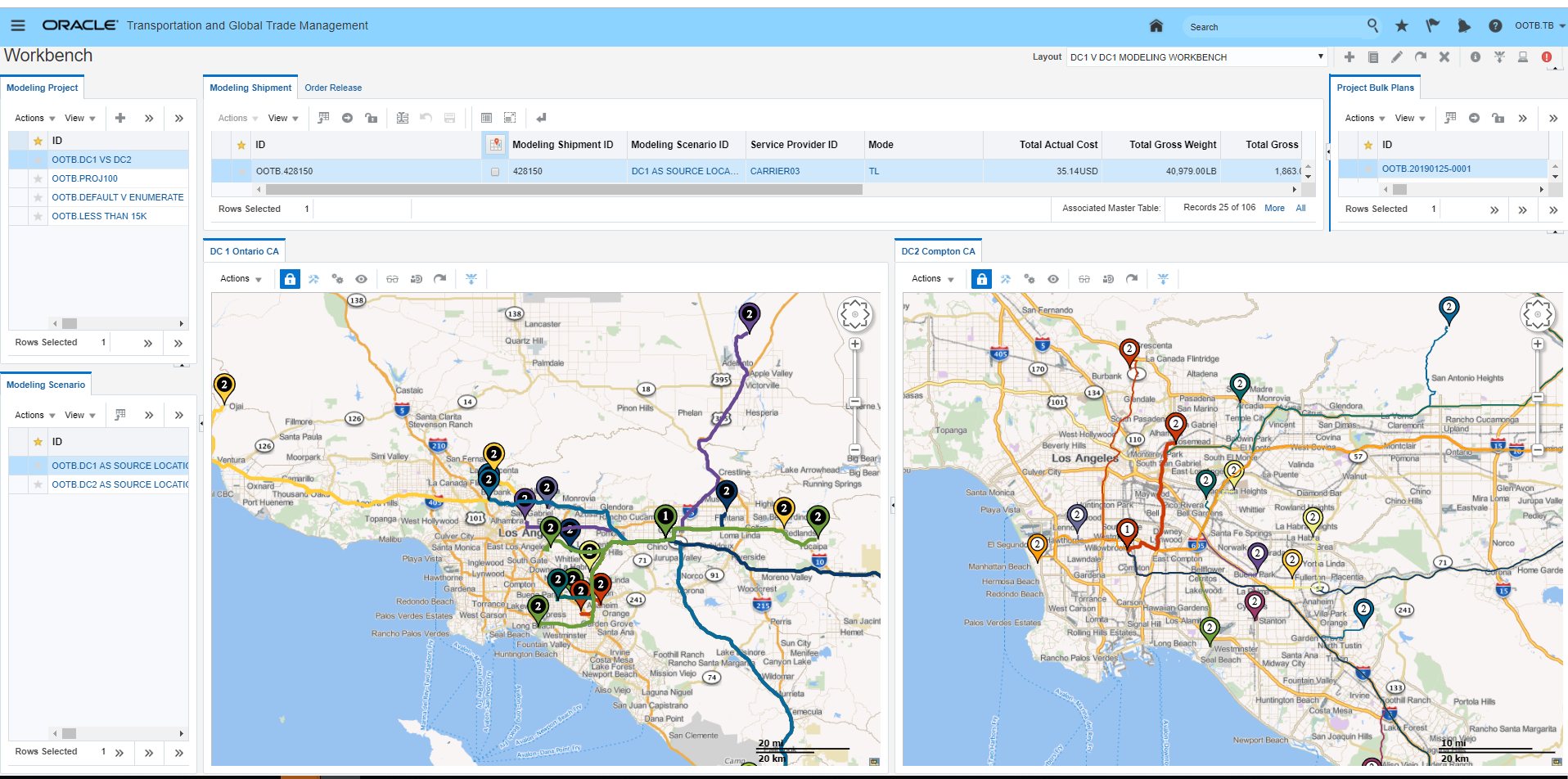

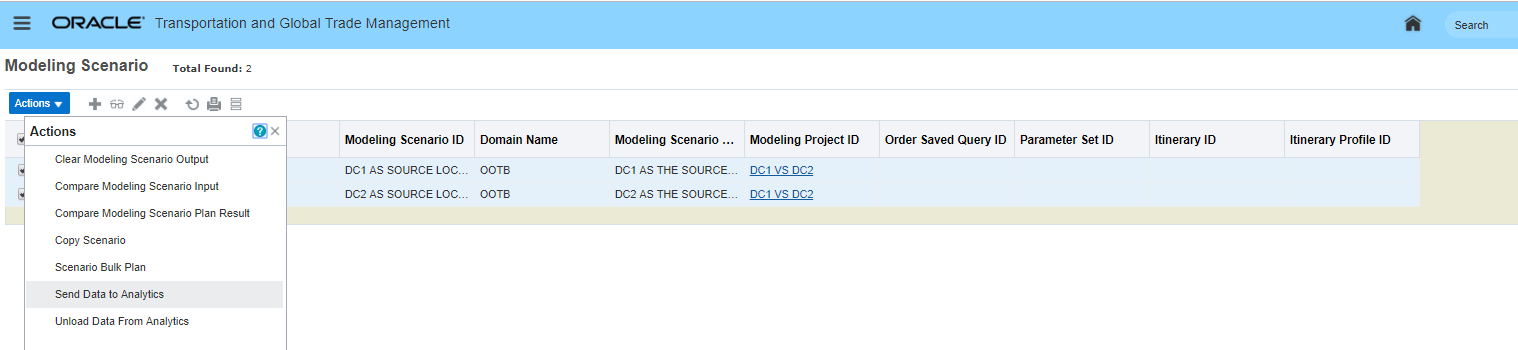

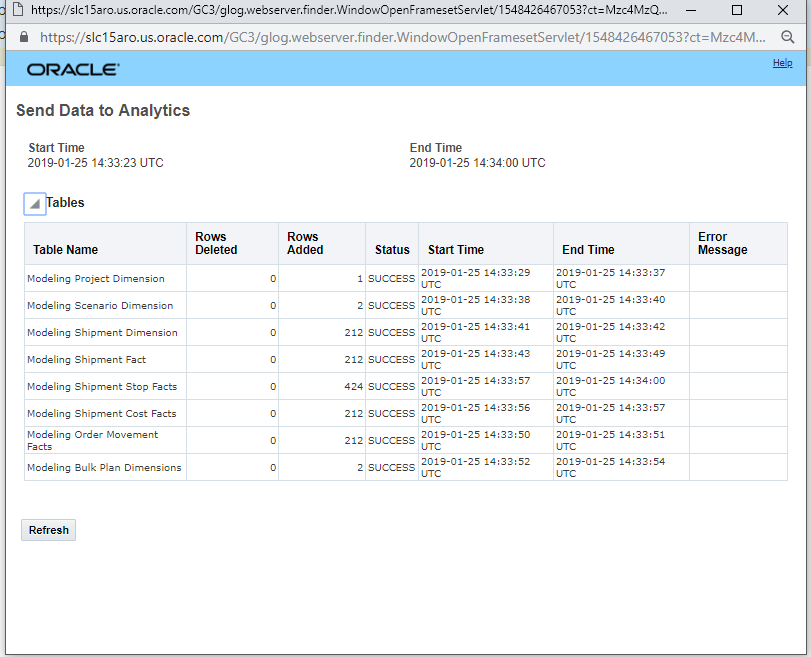

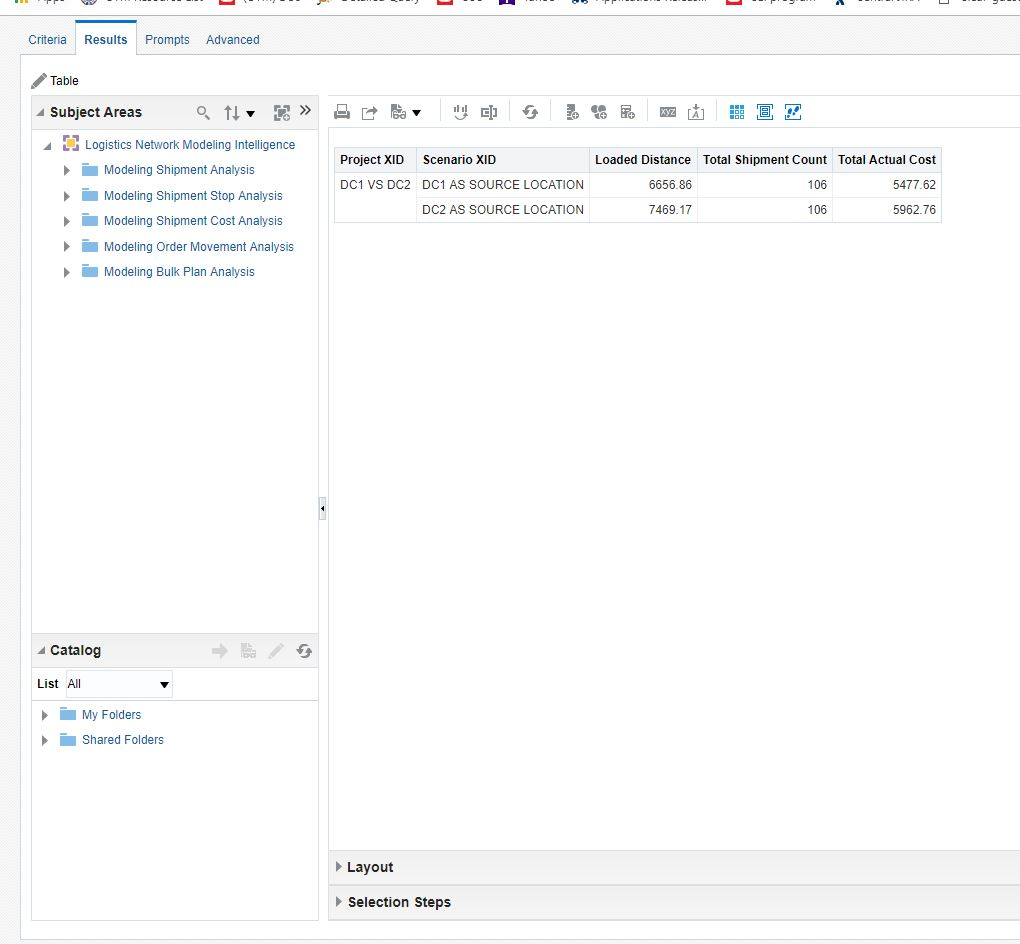

- Logistics Network Modeling

- Global Trade Management (Base)

-

- Flex Fields for Grouping and Aggregating Data

- Copy Flex Fields Using Data Configuration

- Report to Show License Assignment and Balances

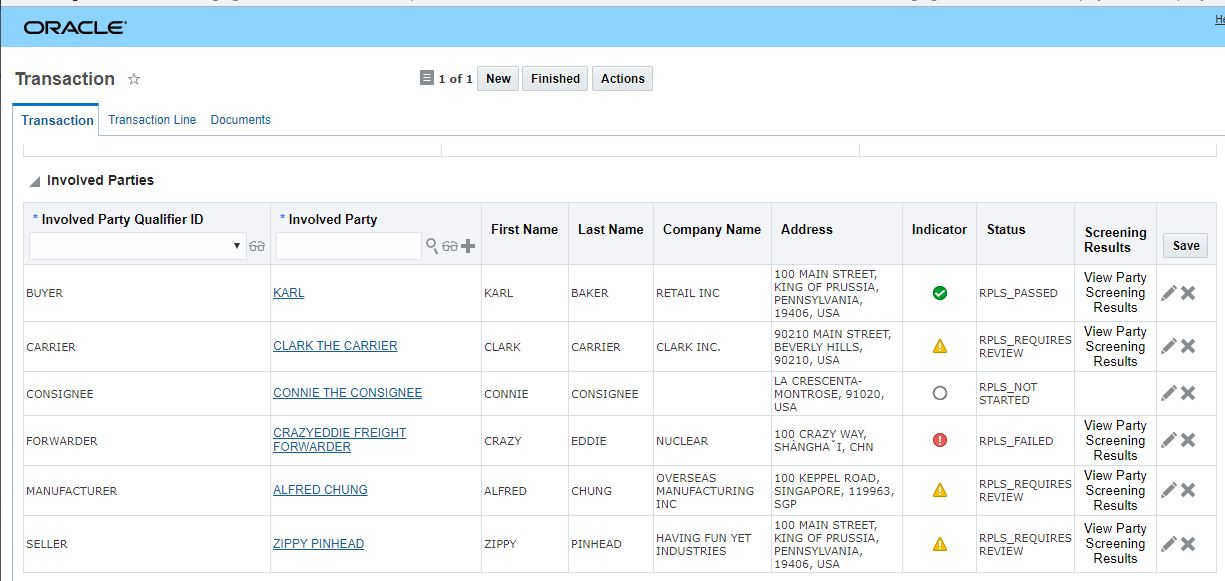

- Display Stoplight for Restricted Party Screening on Transaction and Declaration

- Shipment Group View Related Trade Transaction SmartLink

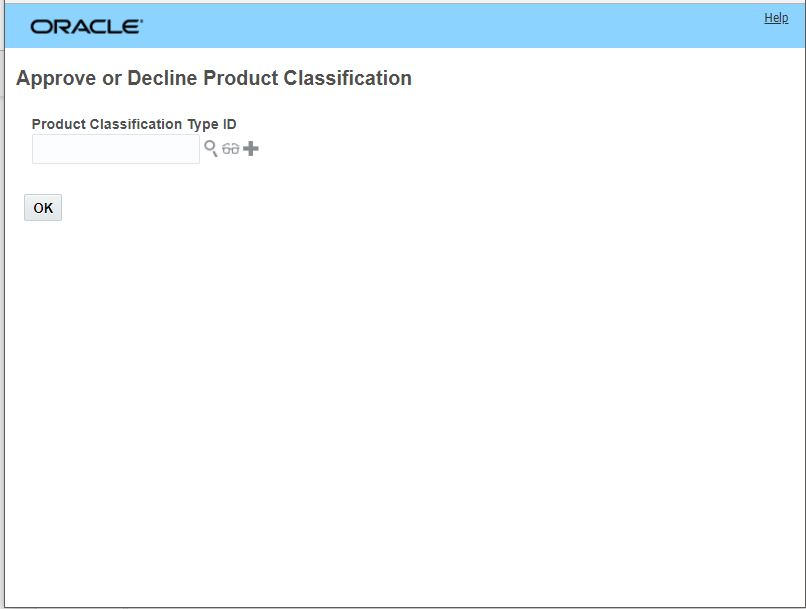

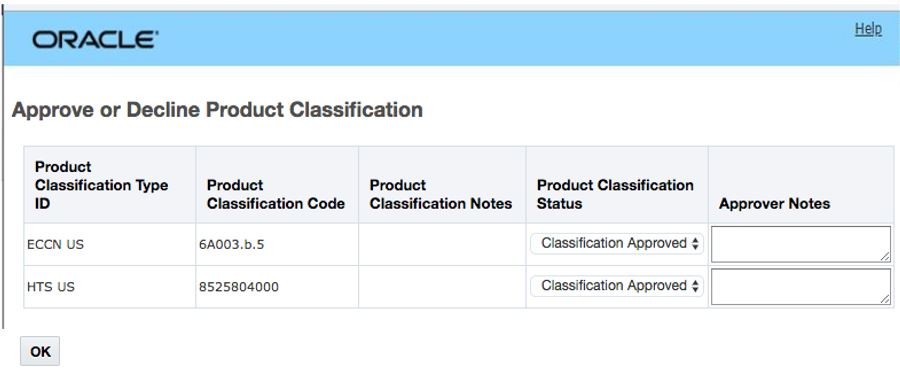

- Approve or Decline Classification at the Classification Type/Code Level on an Item

- Customs Description for Classification Code on Item

- Workbench - Work Queue Support

- GTM How To/Configuration Topic - Supplier Solicitation

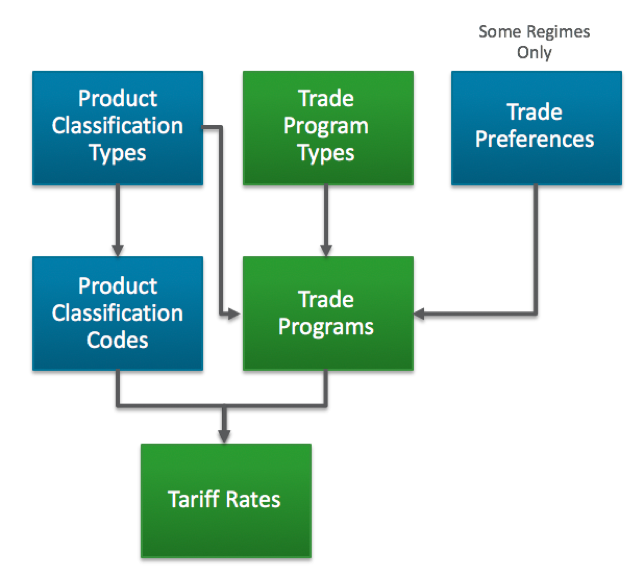

- GTM How To/Configuration Topic - Product Classification Process

- Review Match Factor Action to Use Inverse Index

- Rename Tariff Preference Types to Trade Preferences

- Track Supplier Information

- Accessibility Improvement for Party Screening Results

- SmartLinks Between Product Classification Type and Trade Programs

- SmartLinks Between Product Classification Code and Tariff Rates

- GTM How To/Configuration Topic - License Screening Enhancements

- AES Enhancements

- Order Release to Trade Transaction

- Campaign Management

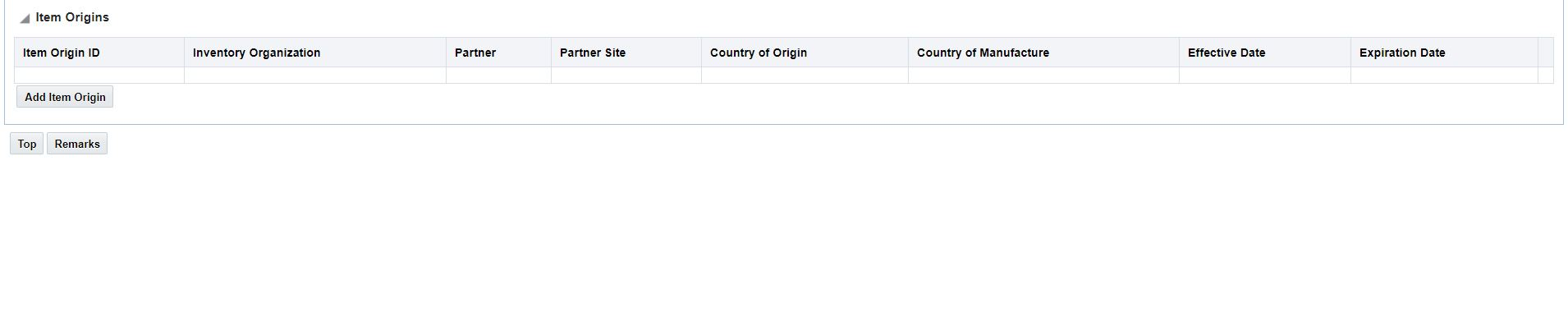

- Determine Trade Program Eligibility and Qualification Based on Item Origin

- Specify Item Type

- Rename Trade Item to Item

- Origin Management

- Party Site

- Tariff Rate Management

- Trading Partner Item

-

- Trade Compliance

- Trade Agreements

- Global Trade Intelligence (GTI)

This document will continue to evolve as existing sections change and new information is added. All updates appear in the following table:

| Date | Feature | Notes |

|---|---|---|

| 17 SEP 2019 | Display Stoplight for Restricted Party Screening on Transaction and Declaration | Updated document. Revised feature information. |

| 17 SEP 2019 | User Interface Refresh | Updated document. Revised feature information. |

| 19 APR 2019 | Agent Logging and Statistics | Updated document. Delivered feature in 19B. |

| 28 MAR 2019 | Invoice Adjustment Cost Behavior Enhancement | Updated document. Delivered feature in 19B. |

| 08 MAR 2019 | Created initial document. |

This guide outlines the information you need to know about new or improved functionality in Oracle Transportation & Global Trade Management Cloud Update 19B. Each section includes a brief description of the feature, the steps you need to take to enable or begin using the feature, any tips or considerations that you should keep in mind, and the resources available to help you.

Give Us Feedback

We welcome your comments and suggestions to improve the content. Please send us your feedback at otm-doc_us@oracle.com. Please indicate you are inquiring or providing feedback regarding the Oracle Transportation & Global Trade Management What’s New in Update 19B.

This section gives you information to help you plan, deploy, and validate your update. We make frequent additions to this document, so don’t forget to check back and get the latest information before your update starts.

Use the following resources to prepare for and validate your Oracle Engagement Cloud update.

On My Oracle Support Read:

- Doc ID 2508854.1

- Oracle Cloud Applications - Transportation and Global Trade Management Cloud: Quarterly Updates - Preparation and Testing Recommendations

- Doc ID 2095528.1

- Oracle Cloud Applications - Transportation and Global Trade Management Cloud: Quarterly Update Planning

- Doc ID 2096782.1

- Oracle Cloud Applications - Transportation and Global Trade Management Cloud: Quarterly Update Planning FAQs

- Doc ID 2098110.1

- Oracle Cloud Applications - Transportation and Global Trade Management Cloud: Update Policy

Column Definitions:

Report = New or modified, Oracle-delivered, ready to run reports.

UI or Process-Based: Small Scale = These UI or process-based features are typically comprised of minor field, validation, or program changes. Therefore, the potential impact to users is minimal.

UI or Process-Based: Larger Scale* = These UI or process-based features have more complex designs. Therefore, the potential impact to users is higher.

Customer Action Required = You MUST take action before these features can be used by END USERS. These features are delivered disabled and you choose if and when to enable them. For example, a) new or expanded BI subject areas need to first be incorporated into reports, b) Integration is required to utilize new web services, or c) features must be assigned to user roles before they can be accessed.

Transportation and Global Trade Platform

This feature provides you with the next chapter in Oracle's adoption of REST APIs. This REST Services feature provides you with an additional set of REST resources and supported operations as well as a completely revamped and enhanced REST API Documentation is on docs.oracle.com.

RESOURCES AND THE OPERATIONS SUPPORTED

| Resource |

Operations |

| Appointment |

GET |

| Bill |

GET |

| Claim |

POST, GET, PATCH, DELETE |

| Consol |

POST, GET, PATCH, DELETE |

| Contact |

POST, GET, PATCH, DELETE |

| Contact (Trade Parties) |

POST, GET, PATCH, DELETE |

| Corporation |

POST, GET, PATCH, DELETE |

| Driver |

POST, GET, PATCH, DELETE |

| PowerUnit |

POST, GET, PATCH, DELETE |

| Equipment |

POST, GET, PATCH, DELETE |

| EquipmentGroup |

POST, GET, PATCH, DELETE |

| EquipmentType |

POST, GET, PATCH, DELETE |

| GtmShipment |

POST, GET, PATCH, DELETE |

| GtmTransaction |

POST, GET, PATCH, DELETE |

| GtmLicense |

POST, GET, PATCH, DELETE |

| Invoice |

GET |

| PackagedItem |

POST, GET, PATCH, DELETE |

| Item |

POST, GET, PATCH, DELETE |

| Itinerary |

POST, GET, PATCH, DELETE |

| Location |

POST, GET, PATCH, DELETE |

| Order |

GET |

| OrLine |

GET |

| OrderBase |

GET |

| OrderMovement |

GET |

| Quote |

POST, GET, PATCH, DELETE |

| ServiceProvider |

GET, PATCH |

| Shipment |

GET, POST |

| SellSideShipment |

GET, POST |

| Voucher |

GET |

| Voyage |

GET |

| WorkInvoice |

GET |

| GtmCampaign |

POST, GET, PATCH, DELETE |

NEW DOCUMENTATION

The new REST API documentation provides you with comprehensive documentation for each resource and operation available in a standard Swagger format. The documentation provides proper request, response syntax, examples, and detailed field level description of all the REST API resources.

REST API Documentation

Steps to Enable

Review the REST service definition in the REST API guides, available from the Oracle Help Center > your apps service area of interest > REST API. If you're new to Oracle's REST services you may want to begin with the Quick Start section.

Tips And Considerations

PRIMARY KEY ATTRIBUTES

Almost all resources in OTM/GTM use a Unique Global Identifier, or 'GID', as the primary key for records in the database. The GID value is a concatenation of an External Identifier, or XID, and a Domain Name. Sub-resources can also have their own GID field as well as their parent GID field. Prior to this update, the data returned for a specific resource request contained all GID, XID and Domain Name attributes which meant that there was a level of redundancy between those values, and most of the sub-resources returned since the parent GID was repeated in each sub-resource even though the parent GID is implicit within the enclosing context.

NOTE: Starting with this update, the desire is to hide primary key values for requested resources and hide parent primary keys in sub-resources. However, due to backward compatibility requirements the default REST API configuration will still return all attributes. To enable the preferred approach (hide primary key values for requested resources and hide parent primary keys in sub-resources) please set the following configuration properties:-glog.fusion.cil.restapi.config.hidePks=trueglog.fusion.cil.restapi.config.hideParentPks=true It is highly recommended to use the property configurations as soon as backward compatibility is no longer an issue. It is likely that at some point in a future update that these property settings will be the default.

DOCUMENTATION

The old REST API Getting Started Guide has been replaced by the new interactive REST API Guide.

Key Resources

The old REST API Getting Started Guide has been replaced by the new interactive REST API Guide.

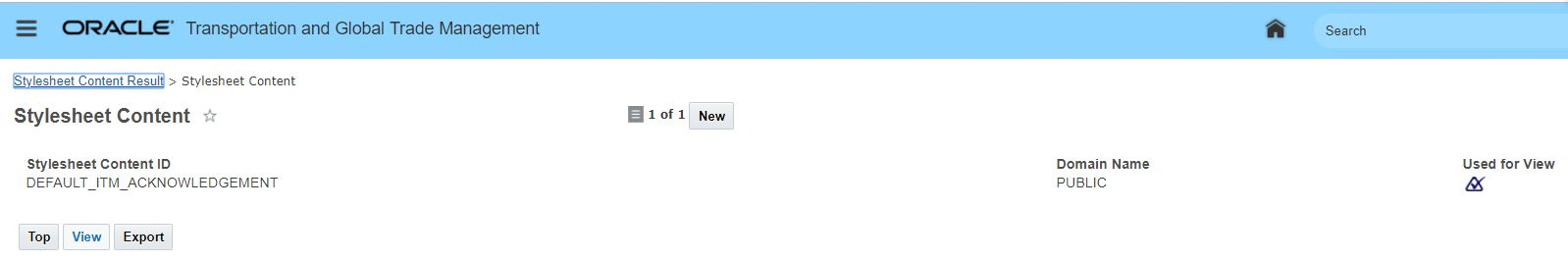

Default Notify Stylesheets Available as Public Content

This feature provides you with the default notify stylesheets loaded as PUBLIC content. This allows you to more easily download, edit and upload the notification stylesheets.

Default Notify Stylesheet Content

Steps to Enable

You don't need to do anything to enable this feature.

Tips And Considerations

The new content can be found at Business Process Automation > Power Data > Event Management > Stylesheet Profiles

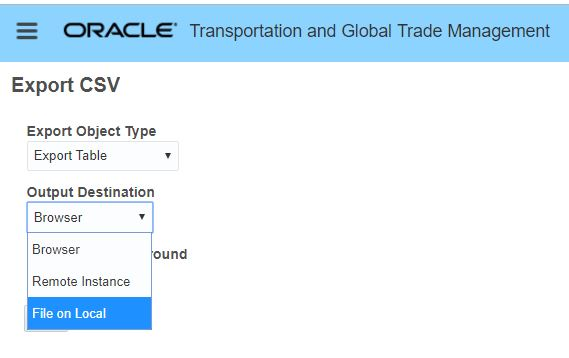

Export File Usability Improvements

For users involved in the export of data either as a CSV export, DBML export or using the Perform Integration Command to obtain the outbound transmission for a specific object this feature provides a number of usability improvements. You now have the option to save File on Local so the output can be saved locally, and a new browser text panel and Copy Text option has been provided which provides a cleaner view to the export values rendered on your browser.

File on Local Export Option

Browser Based View and Copy Text Option

Steps to Enable

You don't need to do anything to enable this feature.

Tips And Considerations

If the text displayed in the browser is very large, it is recommended that you use "File to Local" as Output versus using "Copy Text". The 'Browser" as Output Destination should be used primarily when the goal is to get a glance at the data.

This feature provides you with enhanced agent and agent action logging and statistics. With each agent/agent action execution the agent initiation, completion, error messages or customer specified log instructions are now captured.

In addition, cumulative statistics for each agent/agent action are also captured including the average and maximum time for agent/agent action completion. With this enhancement agent logging will generate a log entry even if the agent fails and rolls back.

Four new tables collect agent and agent action information:

- AGENT_LOG - a log of each agent execution. Each record represents an agent initiation, completion, error or customer-specified log instruction.

- AGENT_ACTION_LOG - a log of each agent action execution. Each record represents an action initiation, completion or error.

- AGENT_STATS - cumulative statistics for each agent. This includes average and maximum time for agent completion.

- AGENT_ACTION_STATS - cumulative statistics for each agent action. This includes average and maximum values for queue time, execution time and completion time.

AGENT_LOG Content

The following fields are tracked in the agent log:

- LOG_SEQUENCE - a unique sequenced ID for the log record. Log search results are sorted by this sequence in ascending order. This allows agent log statements to be viewed chronologically.

- AGENT_RUN_SEQUENCE - a unique ID for the execution instance of the agent. Each time an agent is run, it generates a run sequence. This allows log statements for a particular run to be logically grouped together.

- PARENT_AGENT_RUN_SEQUENCE - if an agent execution was triggered by activity from another agent (e.g. the RAISE EVENT agent action or a mod lifetime event after modifying data), this holds the execution ID of the parent agent.

- AGENT_GID - the agent ID

- STATE - the type of log message, usually reflecting agent state:

- STARTED - the first agent action has been published.

- NOTE - a user LOG action has specified an informational message

- WARNING - a user LOG action has specified a warning message

- ERROR - either an exception occurred during an agent action, a RAISE ERROR action was run or a user LOG action has specified an error message

- COMPLETED - the last agent action has completed.

- TIME - the UTC time for the log event

- LOG_PROCESS_ID - a link to the System Log process ID for the agent's execution instance. Note that process IDs are not guaranteed to be unique outside of a 24 hour period. Searches should include agent start time and process ID.

- APP_MACHINE_GID - the server the agent ran on. This is to allow for System log retrieval.

- LIFETIME_EVENT - the agent event that triggered the agent execution

- AGENT_DATA_QUERY_TYPE_GID - the data query type of the business object triggering the agent execution

- AGENT_BUSINESS_OBJECT - the ID of the business object triggering the agent execution

- RUN_TIME - for COMPLETED records, the time spent on agent execution. Note that this could be derived by subtracting the STARTED TIME from the COMPLETED TIME. This column is de-normalized to simplify searching for long agents in OTM finders.

- NOTES - for records created by the LOG agent action, any notes specified by the user

- ERROR_AGENT_ACTION_GID - for ERROR records caused by an agent action, the action that failed

- ERROR_CAUSE - for records created by the RAISE ERROR agent action, the error message specified in the action; for agent action exceptions, the first line of the exception

- ERROR_OBJECT - the business object that caused the error. This may differ from AGENT_BUSINESS_OBJECT if the error occurred in a DTA or FOR loop.

AGENT_ACTION_LOG Content

The following fields are tracked in the agent action log:

- LOG_SEQUENCE - a unique sequence ID for the log record. Log search results are sorted by this sequence in ascending order. This allows agent action log statements to be viewed chronologically.

- ACTION_RUN_SEQUENCE - a unique ID for the execution instance of the action. Each time the action is run, it generates a run sequence.

- AGENT_RUN_SEQUENCE - a link back to the execution instance of the agent that invoked the action

- AGENT_GID - the agent ID

- ACTION_FLOW - the agent block holding the action (Norm or Error)

- ACTION_SEQUENCE - the sequence # of the action in the action flow of the agent. Note that (AGENT_GID, ACTION_FLOW, ACTION_SEQUENCE) uniquely identify the action within the AGENT_ACTION_DETAILS table. If, however, the agent is modified after the log record is written, this identification may no longer be accurate.

- STATE - the type of log message, reflecting action state:

- STARTED - the action has begun execution

- ERROR - the action threw an exception

- COMPLETED - the action (and any triggered work) has completed

- TIME - the UTC time for the log event

- LOG_PROCESS_ID - a link to the System Log process ID for the action's execution instance

- APP_MACHINE_GID - the server the action ran on

- AGENT_DATA_QUERY_TYPE_GID - the data query type of the business object triggering the agent execution

- AGENT_BUSINESS_OBJECT - the ID of the business object triggering the agent execution

- AGENT_ACTION_GID - the agent action ID

- ACTION_DATA_QUERY_TYPE_GID - the data query type of the business object processed by the action. This may differ from AGENT_DATA_QUERY_TYPE_GID if in a DTA or FOR loop.

- ACTION_BUSINESS_OBJECT - the ID of the business object processed by the action. This may differ from AGENT_DATA_QUERY_TYPE_GID if in a DTA or FOR loop.

- RUN_TIME - for COMPLETED records, the time between the start of action execution and the completion of all related activity

- ERROR_MSG - for records created by the RAISE ERROR agent action, the error message specified in the action; for action exceptions, the first line of the exception

AGENT_STATS Content

- AGENT_GID - the agent ID

- NUM_RUNS - # of times the agent has been run since the SINCE date

- TOTAL_TIME = cumulative execution time, measured from the first action publish to the last action completion

- AVG_TIME = average execution time. This de-normalized field is provided for finder queries and sorting.

- MAX_TIME = maximum execution time

- SINCE = date the statistics were last reset

AGENT_ACTION_STATS Content

- AGENT_GID - the action's agent ID

- ACTION_FLOW - the agent block holding the action (Norm or Error)

- ACTION_SEQUENCE - the sequence # of the action in the action flow of the agent. Note that (AGENT_GID, ACTION_FLOW, ACTION_SEQUENCE) is a foreign key into the AGENT_ACTION_DETAILS table.

- NUM_WAITS - # of times the action has been published since the SINCE date

- TOTAL_WAIT_TIME = cumulative waiting time, measured from the publish to the beginning of execution

- AVG_WAIT_TIME = average waiting time

- MAX_WAIT_TIME = maximum waiting time

- NUM_EXECS - # of times the action has been executed since the SINCE date

- TOTAL_EXEC_TIME - cumulative execution time, measured from the beginning of execution to action termination (i.e. does not include completion due to published topics)

- AVG_EXEC_TIME= average execution time

- MAX_EXEC_TIME = maximum execution time

- NUM_RUNS - # of times the action has run to completion since the SINCE date

- TOTAL_RUN_TIME - cumulative run time, measured from the the publish to action completion

- AVG_RUN_TIME = average run time

- MAX_RUN_TIME = maximum run time

- SINCE = date the statistics were last reset

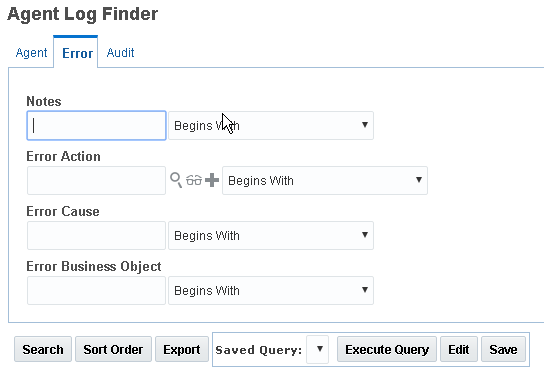

VIEWING THE AGENT AND AGENT ACTION LOGS FROM OTM

Agent and Agent Action log records can be accessed from OTM via any Process Management menu.

Agent Logs

The Agent link brings up a Finder for AGENT_LOG records.

View Agent Log Finder

View Agent Log Finder Error Tab

Note that the finder assumes that the Agent and Application Server exist in the database. To search for old data after agent deletion, customers cannot use these criteria.

On the finder result page, two smartlinks are available for Agent Log records:

- Action Log - displays all Agent Action Log records related to the run instance of the current agent.

- System Log - display all System Log records from the time of the Agent Log record, using the System Log ID of the record and retrieving lines from the specified Application Server. Note that system logs will cycle frequently so this information is likely to be unavailable for historical analysis.

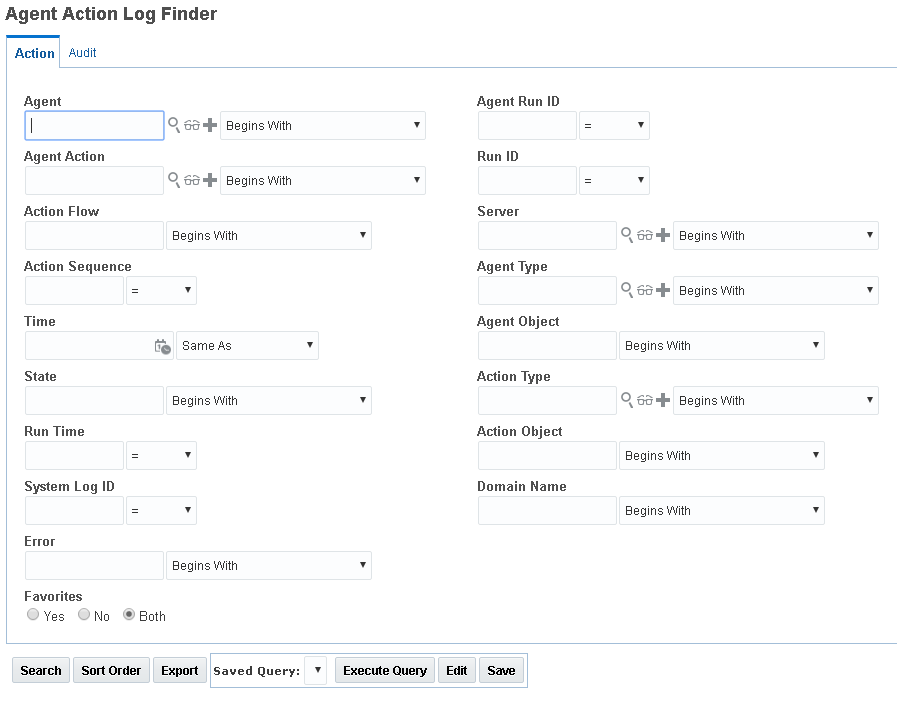

Viewing Agent Action Log

The Agent Actions link brings up a Finder for AGENT_ACTION_LOG records.

Agent Action Finder

Note that the finder assumes that the Agent and Application Server exist in the database. To search for old data after agent deletion, customers cannot use these criteria.

On the finder results page, there is one smart link: AgentLog. This brings up all agent log records for the agent run sequence that ran the action.

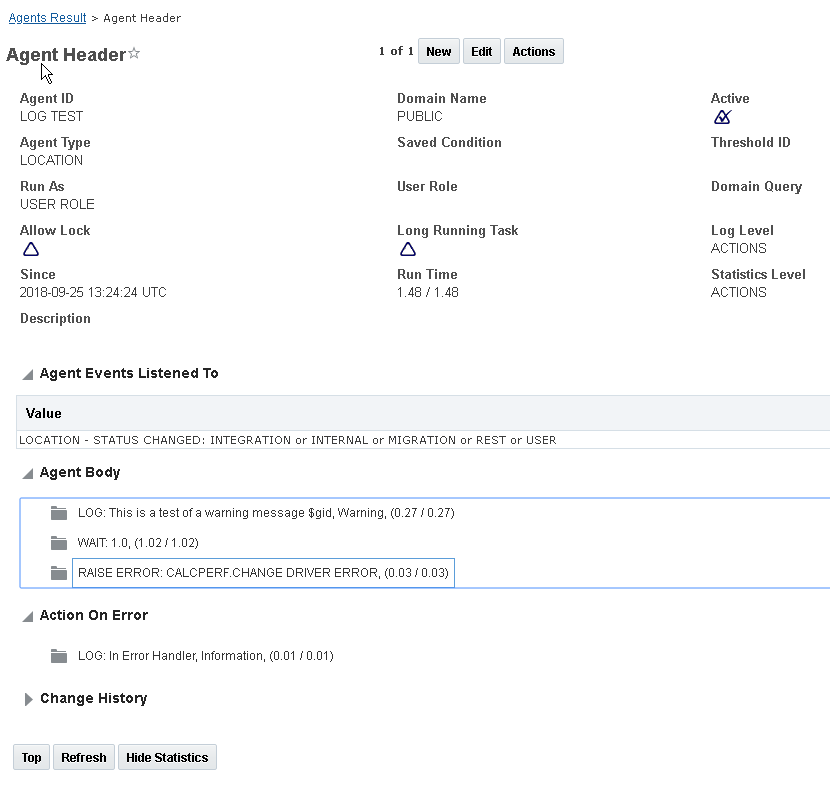

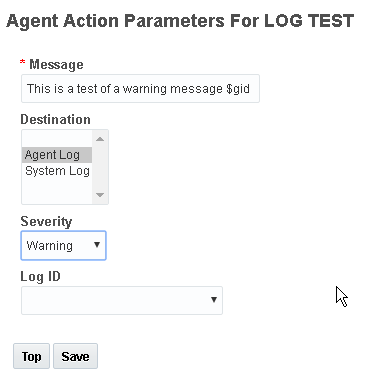

Viewing Agent and Agent Action Statistics

Agent and Agent Action statistics can be viewed directly on the Agent viewer or Agent manager. E.g.:

Steps to Enable

CONTROLLING AGENT LOGGING AND STATISTICS GATHERING

Agent logging and statistics can be controlled globally or per-agent. The following properties control default behavior for all agents:

- glog.agent.defaultLogLevel = [NONE| AGENT |ACTIONS] - the default logging for agents that don't explicitly set their logging in AGENT.LOG_LEVEL

- NONE = no agent logging is performed. There should be no performance overhead for agent logging when it is turned off.

- AGENT = agent activity is logged. This includes only AGENT_LOG records.

- ACTIONS = agent and agent action activity is logged. This includes both AGENT_LOG and AGENT_ACTION_LOG records.

- glog.agent.defaultStatsLevel = [NONE | AGENT | ACTIONS] - the default statistics collection for agents that don't explicit set their statistics collection in AGENT.STATS_LEVEL

- NONE = no agent statistics are collected. There should be no performance overhead for statistics collection when it is turned off.

- AGENT = agent statistics are collected. This includes inserts/updates to the AGENT_STATS records.

- ACTIONS = agent and agent action statistics are collected. This includes inserts/updates to both AGENT_STATS and AGENT_ACTION_STATS records

On the agent header, these defaults can be overridden for a specific agent:

Agent Header

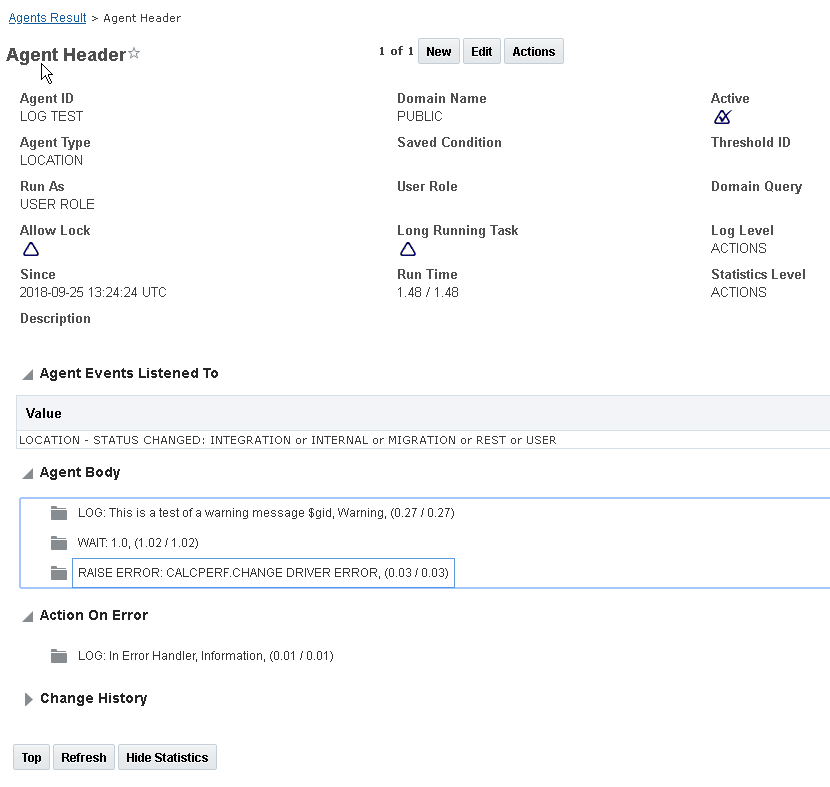

ADDING CUSTOM LOG RECORDS

Customers can add information to the agent and agent action logs via the LOG agent action. This action has been updated to:

- allow a message to be written to the System Log, the Agent Aog or both

- assign a severity to the logged message

- assign a specific Log ID to messages written to the System Log.

By using the ASSIGN VARIABLE action with the LOG action, customers can add the result of ad-hoc queries to agent logs.

Agent Actions Parameters

Note that only the Message field is required. The following defaults are used if selection is not explicitly made:

- Destination = System Log

- Severity = Information

- Log ID = Workflow

Thus, the action is backward compatible with v18 AGENT_ACTION_DETAILS records. No data migration is needed.

Tips And Considerations

VIEW AGENT LOG FINDER ERROR TAB

- Note that the finder assumes that the Agent and Application Server exist in the database. To search for old data after agent deletion, customers cannot use these criteria.

VIEWING AGENT ACTION STATISTICS

- If the Agent statistics level is AGENT or ACTIONS, the agent header will show the average and maximum Run Time since the Since date. This run time is measured from the publish of the first agent action to the agent process' completion. Note that it does not include the time to evaluate any agent saved condition. If the Agent statistics level is ACTIONS, each action will show the average and maximum completion time for that action. This time is measured from the time the action begins execution to the completion of the action process.

- A new action on the Agent finder / manager, Reset Statistics, allows agent statistics to be reset. This can be used to test agent performance under a particular scenario (which may have its control flow path).

- Unlike the Agent Log, Agent and Action Statistics are tied to an existing agent and/or agent action via foreign keys. Any modification of an agent (including a change in log or statistics level) will reset the statistics.

The UI changes covered in this section may require modification to your existing UI automated test fixtures if the automated testing tool being used is dependent on the previous UI’s behavior and backend code.

Specifically, for this update - the improvements made to support Accessibility could require a modification to your existing automated UI test scripts.

For example:

- Buttons of all XSL pages now have a button tag

- The "Main" Buttons of all JXPS pages now have a button tag

- Tabbing behavior has been modified:

- All the tabs on JSP pages (ex. edit manager layouts) and XSL pages (ex. Finder criteria) now have one tab stop

- Arrow keys are now used to navigate between tabs

- The Unified Global Header tab stop on the search icon (magnifying glass) has been removed

- Finder Results table navigation:

- Now supports use of arrow keys

- Corrected and made consistent tab sequence for toolbar icons and links

Accessibility - Keyboard Navigation

Using only the keyboard you can navigate OTM and GTM without a mouse. You can use the tab key to navigate most of the application and shift + tab keys to navigate backwards.

Here are some of the areas where improvements were made:

- Eliminated any keyboard traps to make sure you don't get stuck when navigating the application.

- Make it easier for you to jump between sections/tabs

- Key various keyboard keys to navigate the application.

Here is a summary of the keyboard navigation for a few areas of the application.

NAVIGATOR MENU

The first level menu groups are always expanded and cannot be collapsed.

- Move to the next first level menu group - Tab key

- Move to the previous first level menu group - Shift + Tab key

- Move up to the previous item in a menu group - Up arrow

- Move down to the next item in a menu group - Down arrow

- Expand a menu group - Right arrow

- Collapse a menu group - Left arrow

- When selected on a menu link, opens the page - Enter

GLOBAL HEADER

- Use tab or Shift + tab keys to navigate the Global Header functions

SPRINGBOARD MENU

- Move to the left through the menu groups or links at the same level - Left arrow

- Move to the right through the menu groups or links at the same level - Right arrow

- Close a menu group and places the focus back on the higher level menu group - Up arrow

- Open a menu group and places the focus on the first item in the next level menu group - Down arrow

- When selected on a menu link, opens the page - Enter

FINDER CRITERIA

- Use tab or Shift + tab keys to navigate the fields on the Finder Criteria page.

FINDER RESULTS

- The body of the results table is a single tab stop.

- Use Tab to access the table.

- Use the left, right, up and down arrow keys to move around within the table.

Steps to Enable

You don't need to do anything to enable this feature.

Accessibility - Skip Navigation Menu

On all pages with the Unified Global Header, the first tab stop on the OTM/GTM page opens a skip navigation list that allows you to skip the global header and jump straight to the main page or other components of the page. Also, press ALT + 1 to access skip navigation at any time. The menu varies based on where you are in the application.

If you are on the home screen/springboard the Skip Navigation menu is the following:

- Skip to Content allows you to jump to the Springboard

- Skip to Search allows you to jump to the search field in the toolbar

- Skip to Footer allows you to jump to the last icon available in the Springboard or to the bottom section of the page

If you are on a page within the application where tabs are found (Search Criteria and Manager Layout) you can navigate the tabs using the Skip Nav menu.

When you are on the Tab option you see all of the available tabs:

The Skip Navigation menu always has a Home option, this allows you to return to the Springboard.

Steps to Enable

You don't need to do anything to enable this feature.

Accessibility - Validate Usage of Color

When considering accessibility, it is important to not convey information to the user using color alone. Any pages that were previously using color alone are now using another conveyance as well, take the following two areas as examples:

- Mass Update in the legacy finder showed the saving of the objects to you as green when successful and red when unsuccessful, now indicators are included as well to more vividly convey this success or failure to you.

- Links are displayed in underlined text as well as being light blue.

Steps to Enable

You don't need to do anything to enable this feature.

This feature provides you with a screen readable navigation path through OTM and GTM. Screen readers convert digital text to synthesized speech and are used to help people who are blind or who have low vision to use information technology with the same level of independence and privacy as anyone else. Screen readers depend on a consistent UI format and layout to provide a usable and seamless navigation process.

To improve the OTM and GTM screen reader navigation experience the following improvements were made:

- Table Format

- Add proper headings and alternative labels to allow the screen reader to successfully read a finder results table to the end user

- Add Roles

- To allow the user to take advantage of additional benefits of using a screen reader proper roles need to be assigned to various areas of the application

- Buttons should have role of Buttons

- Menus should have the role of menu

- Sections/Headings

- Verify all types of fields have the proper sections/headings to insure the screen reader can navigate properly

- Alternative Text

- Add additional text for certain areas to be read by the screen reader including images

Steps to Enable

You don't need to do anything to enable this feature.

A new topic, Accessibility Features Guide, has been added to OTM/GTM online help. This guide outlines how to navigate OTM/GTM without the use of a mouse or with a screen reader.

Topics covered include:

Keyboard Controls across the application including:

- Springboard

- Navigator Menu

- Finder Results Pages

- Inline Edit

- Tree Control

- Automation Agent Actions or Error Handler

Skip Repeated Navigation:

- On all pages with the Unified Global Header, the first tab stop on the OTM/GTM page opens a skip navigation list that allows you to skip the global header and jump straight to the main page.

Steps to Enable

You don't need to do anything to enable this feature.

User Experience - General - New Indicators

Two new indicators have been added to the list of options within OTM and GTM, blue and orange, also the previous indicators have been updated.

The new indicators are:

Steps to Enable

You don't need to do anything to enable this feature.

Home Experience Improvements - Default Colors and Theme Management Enhancements

NEW DEFAULT COLORS

Default colors for the OTM/GTM header and springboard have been changed to light sky blue background with dark gray font and header icons as seen in the below image.

THEME MANAGEMENT ENHANCEMENTS - NEW COLOR SETTINGS

New features have been added to Theme Management to allow you to manage your home experience font and icon colors. New fields added include:

- Main Font Color - The font color used for the top level springboard menu items. This color is also used for third level springboard menu items.

- Springboard Submenu Font Color - The font color used for the second level springboard menu items.

- Springboard Submenu Background Color - The background color used for the second level springboard menu items. The default is white.

- Header Icon Color - The color used for the Unified Global Header icons.

- Header Background Color - The color used as the background color for the Unified Global Header.

THEME MANAGEMENT ENHANCEMENTS - COLOR SCHEMES

Select a Color Scheme to see/choose a pre-selected grouping of colors and images to use as the basis for your theme. There are several color schemes available by default:

- Autumn Red

- Crisp Green

- Dark Blue

- Dark Gray

- Midnight Blue

- Sky Blue (Default): this is the default color scheme automatically used by OTM/GTM.

Steps to Enable

You don't need to do anything to enable this feature.

Manager Layout - Support Removal of Reference Number Grid

The list of objects below support moving or hiding/removing the Reference Number Grid from the Manager Layout.

This allows you to:

- Move this grid to another tab

- Remove the grid from your Manager Layout

Here is a list of objects that support this feature when configuring their Manager Layout:

OTM OBJECTS

- Driver

- Freight Forwarding

- Invoice

- Invoice Line

- Item

- Item Qualification

- Item Remarks

- Location

- Order Base

- Order Base Line

- Order Base Ship Unit

- Order Release

- Order Release Line

- Order Release Ship Unit

- Packaged Item

- Power Unit

- Rapid Order

- Ready to Ship OB Line

- Ready to Ship OB Ship Unit

- Release Instructions

- Ship Unit Line

- Shipment

- Shipment Actuals

- Shipment Ship Unit

- Shipment Ship Unit Line

- Shipment Stop

GTM OBJECTS

- Bond

- Campaign

- Campaign Line

- Compliance Rule

- Contact

- License

- License Line

- Location

- Registration

- Shipment

- Shipment Line

- Structure

- Structure Component

- Trade Agreement

- Transaction

- Transaction Line

Steps to Enable

To remove or move a reference number grid from a supported manager layout you should follow the steps used to modify manager layouts.

- Go to the Manager Layout manager found in Configuration and Administration > User Configuration > Manager Layout.

- Select the supported manager.

- Then select the Detail tab.

- In the Manager Layout Detail page you can configure the manager including modifying or deleting Reference Number Grids.

Remove Move Reference Number Grid in Manager Layout

Tips And Considerations

The primary reason to remove this grid is if you have grid flattened one or more reference numbers into fields on your Manager Layout and don't want them to display in this grid as well.

Screen Set Result Improvements

Screen Set Result Improvements include the following:

- Show multiple values for a single remark or reference number qualifier in the Finder Results in a comma separated string

- Reference Numbers and Remarks can display as active links in the finder results

Here's a screen shot displaying both of these changes:

- You'll notice the first Order Release has multiple values for the Buyer Number reference number qualifier and they are displayed here in a comma separated string

- You'll notice the second Order Release has a link displayed for the Buyer Number, you can click this active link directly from these finder results. This is configured in screen set results using the "Display as Link" setting.

Steps to Enable

Setting up Reference Numbers and Remarks so they can display as active links is is configured in screen set results using the "Display as Link" setting.

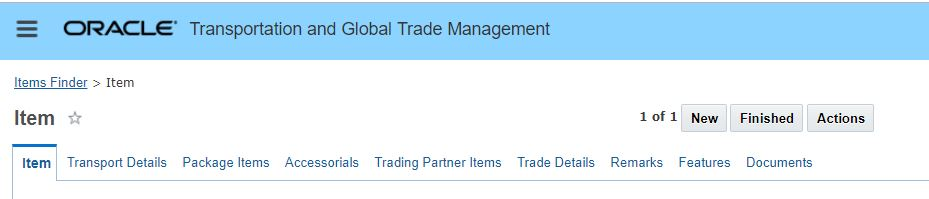

This feature provides one user interface for Item across both OTM and GTM. The unified item includes all the information applicable to a user’s transportation or trade needs. The new unified item can be accessed from the same OTM menu and GTM menu as in previous releases. In addition, the Trade Item in GTM has been renamed to Item.

Unified Item - Tabs

Steps to Enable

You don't need to do anything to enable this feature.

Tips And Considerations

This is the new default Item Manager for both OTM and GTM.

Your previously configured Item Managers are still fully supported.

Workbench - Additional GTM Objects Supported in the Workbench

This feature provides additional GTM workbench table supported objects. With these added objects, along with the long list of already supported objects, the Workbench should become the first place you go for configuring any work environment that involves relating multiple objects and components together in one view.

Additional GTM objects available for adding as a table include:

- Campaigns

- Campaign Lines

- Campaign Line Documents

Steps to Enable

You don't need to do anything to enable this feature.

Workbench - Additional OTM Objects Supported in the Workbench

When adding a table to the workbench, the following are now available:

- Claims

- Shipment Cost Object

- Work Assignment Bulk Plan

Steps to Enable

You don't need to do anything to enable this feature.

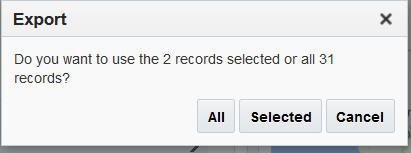

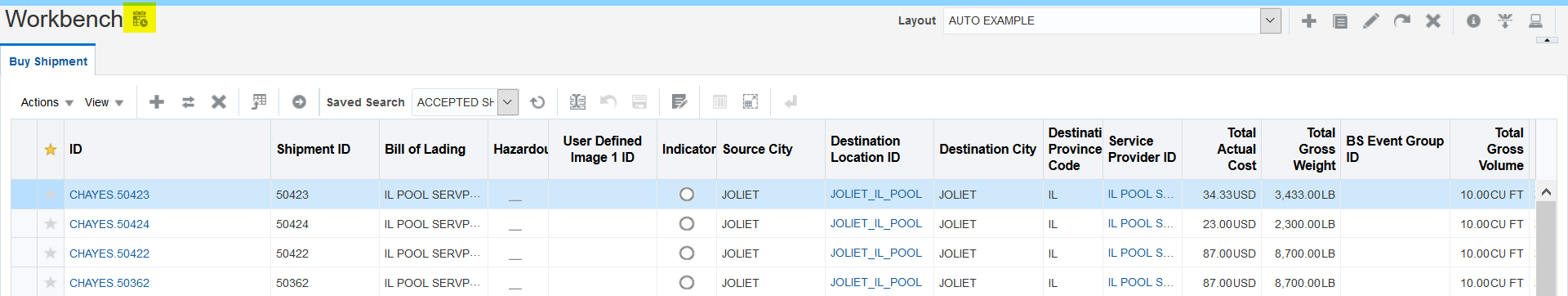

Workbench - Export to Excel Support for Workbench Tables

A new icon/button is available on the workbench table toolbar to allow you to export to excel directly from a workbench table. This functionality lets you export the selected rows or all of the table rows to Excel.

Export

Steps to Enable

- For the workbench table you want to export records from select the Export icon to initiate the process. You will be given the option - in the next step to export all the records or only your selected records.

- Select the option to export either all the records in the table or the selected records.

- Wait for the file to export and download the file.

- Open the exported xls file.

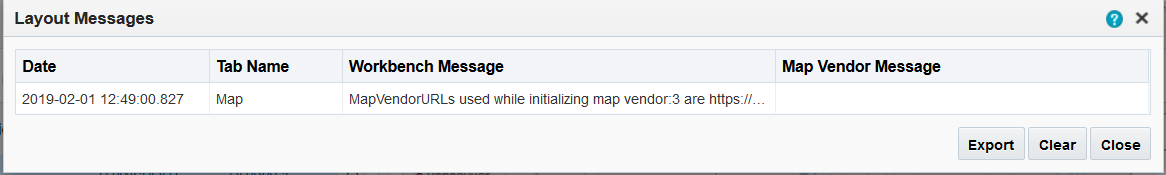

The Messages icon appears on a Workbench Layout only when an error or informational message is available. Upon hovering over the icon, a tool tip displays the number of new messages (# Messages). If you click the icon, you see a window displaying the messages in detail. The information provided in a message includes:

- Date: Displays the date and time at which the message occurred. This will be the server date and time.

- Tab Name: Displays the name of the Workbench tab that generated the message.

- Workbench Message: Displays the message generated by the Workbench.

- Map Vendor Message: Displays the map vendor specific message if there is one.

After reviewing these messages you can export or clear the messages. The Messages icon continues to display on the workbench layout toolbar until the messages have been cleared.

Steps to Enable

You don't need to do anything to enable this feature.

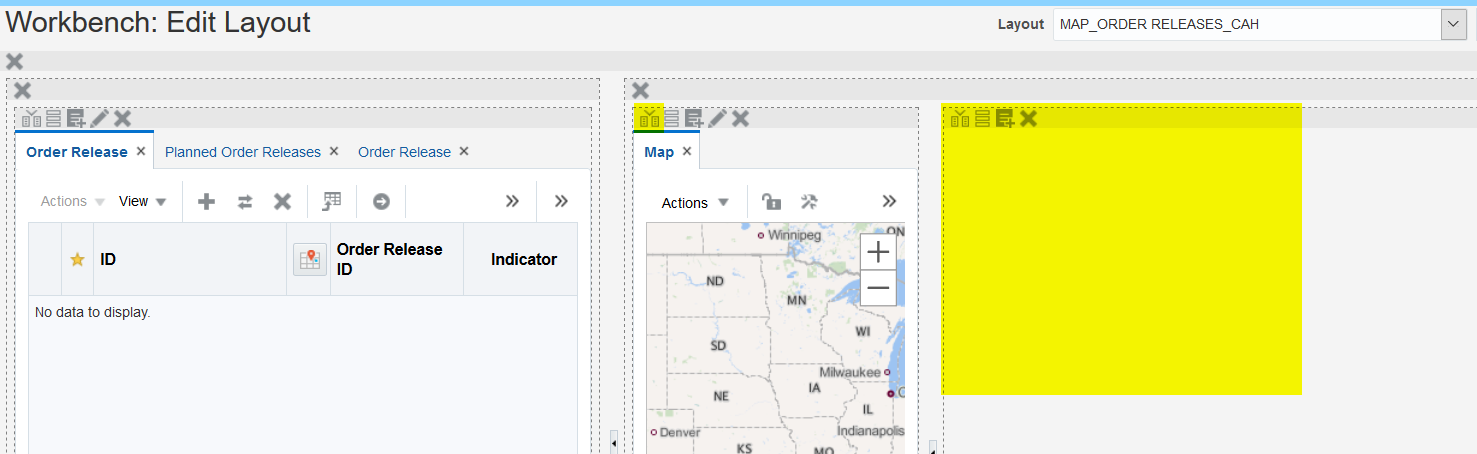

Workbench - Splitter Configuration - Split Existing Region

In prior releases, in order to add a new split region (both horizontal and vertical) on a region that already has content, you must delete the tabs first and then add the split and recreate the tabs. In this release, the split vertically and split horizontally buttons are available in the region even when there is already content.

Upon performing the split function, the first pane contains the contents of the original and the second one is empty and available for you to add content. In this example, the map pane was split horizontally and the original map is on the left and the new pane is on the right.

To reverse the split operation, you can delete the empty region using the delete button that is available in the pane.

Steps to Enable

You don't need to do anything to enable this feature.

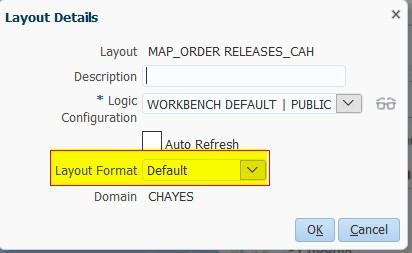

Workbench - Layout Display Format

Use the Layout Display function to reduce white space and make your current layout more compact. This is especially useful for large layouts.

The layout display options are:

- Default: select for the largest font and spacing

.

Default Largest Font

- Compact: select for medium font and spacing.

Compact Medium Size Font

- Super Compact: select for the smallest font and spacing

.

Super Compact Smallest Size Font

Steps to Enable

You can assign a layout format to a specific workbench layout when you create, copy, or edit a layout using Create/Copy/Edit Layout.

Set Layout Format

The layout format specified by clicking Layout Display overrides the layout format selected when the layout was created/edited.

Workbench - Manager Layout a Region

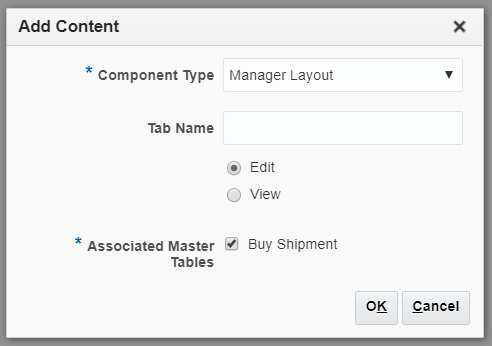

You can configure a Workbench Layout with a View and/or Edit Manager Layout as a detail region of a Workbench. There is a new option of Manager Layout when adding a region to a Workbench Layout.

When adding a Manager Layout to a Workbench, you need to specify:

- Tab Name

- Indicate if the Edit or View manager layout is to occupy this region

- The Associated Master Table, the manager layout used is the one that is associated with the master table's screen set (it's configured in the general tab of the screen set).

Here is an example of a Workbench Layout with an Edit Manager Layout:

Here is an example of a Workbench Layout with a View Manager Layout:

As with most detail regions within a Workbench Layout, there is a Lock/Unlock function on the region.

- Click the Lock View icon to lock that manager layout and keep the results; then, if you click another row in the master table the results displayed in the manager layout do not change.

- This is useful, for example, when you have a shipment master table and a shipment manager layout since it allows you to select a shipment to view and then lock the manager layout. With the shipment manager layout locked, you can click around in the shipment table and the manager layout will not change.

Steps to Enable

You don't need to do anything to enable this feature.

Tips And Considerations

It is important to keep in mind that it is suggested to use simplified/configured Manager Layouts where possible since the default/PUBLIC Manager Layouts have a vast amount of data displayed and could be overwhelming when displayed within a Workbench Layout.

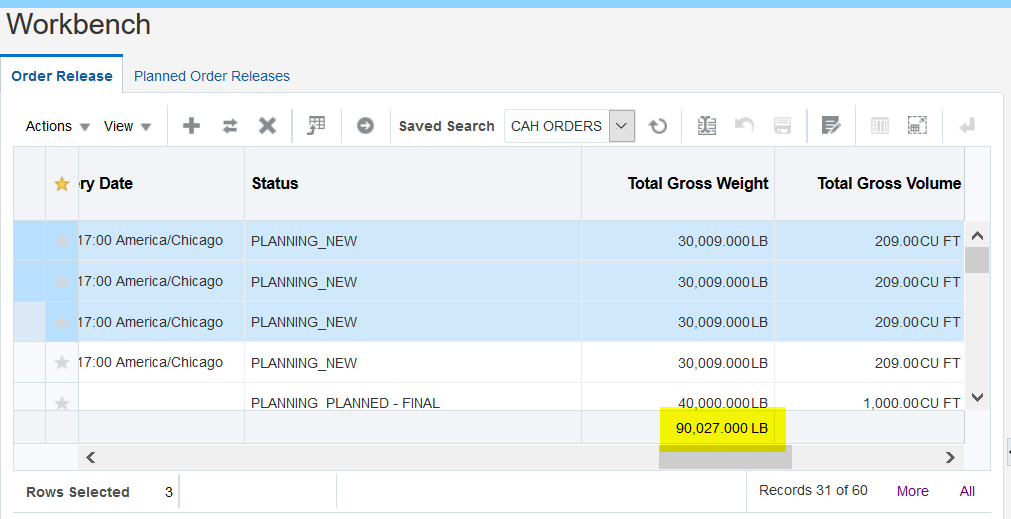

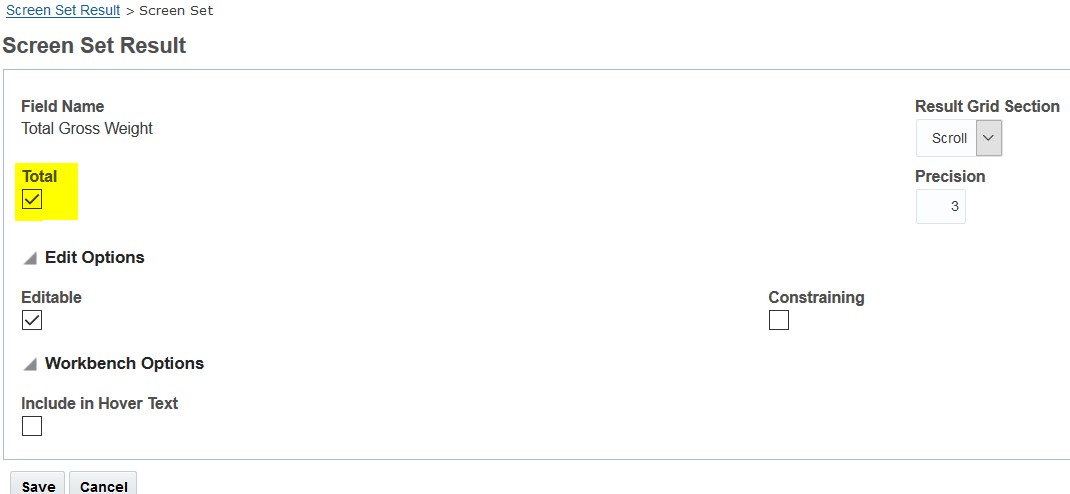

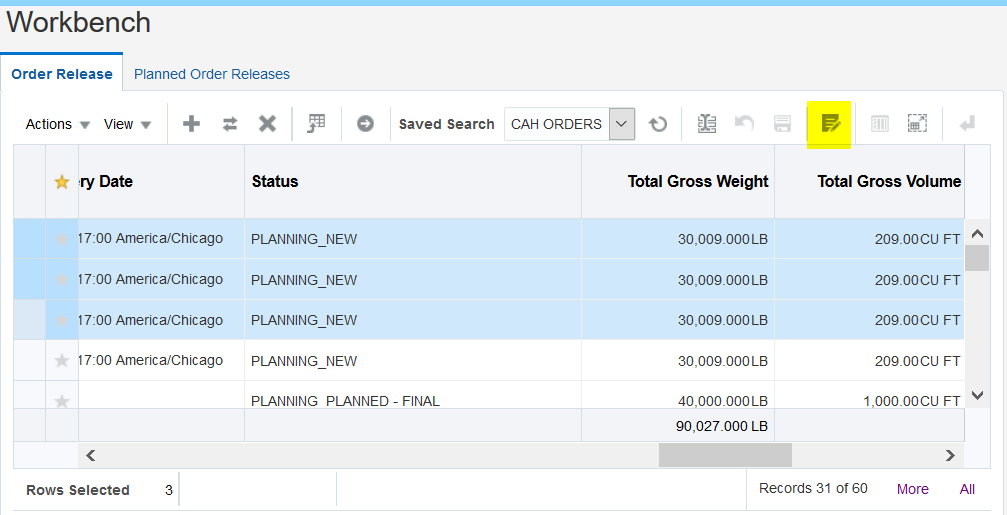

Workbench - Selected Rows Totals for Workbench Tables

If a screen set result column is configured to total, the total will be shown in the workbench table for the selected rows. The total is displayed at the bottom of the table.

Total Display

Steps to Enable

Configure the relevant result columns in the screen set to total, then use that screen set when configuring your workbench table.

Here's an example of a screen set result column being set to total, this setting is available via the "more" button in the screen set results configuration.

Workbench - Mass Update Support for Workbench Tables

When a result column is configured as editable within a screen set column, it is available for mass update. A new function has been added to the workbench table and is visible when a screen set used for the workbench table has columns that are configured as editable.

This allows you to make the same edit to multiple records at the same time.

Steps to Enable

- To initiate a mass update, select the records in the table and then click the mass update icon:

- A popup will display where you can make your changes

- Save your changes

- This functions the same way that it does in finders except you do not get the confirmation popup.

Workbench - Multiple Masters to One Detail Table

When configuring a table in a Workbench, if it's configured as a detail table you now have the ability to select one or more master tables. Previously only one master table was available to be selected, for example Shipments in Planning status and Shipments in Execution status can both be master tables for one Shipment Stop detail table.

Steps to Enable

- Configure a workbench table

- Designate the table to be a Detail table

- Specify a saved search for each of the desired master tables, any master tables without a saved search populated will not be masters for this detail table. The saved search is used to establish the relationship between the detail and it's master(s).

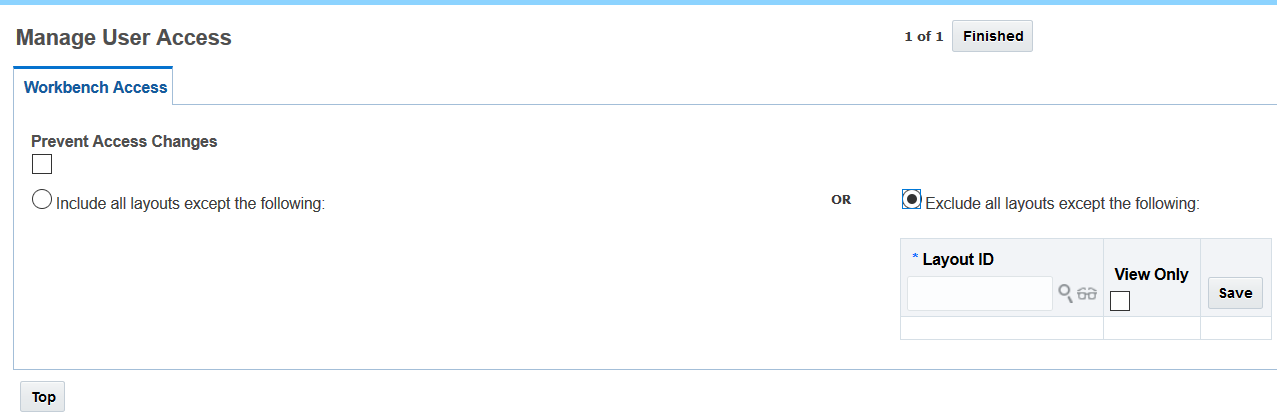

User Access has been enhanced to allow users to be limited to view only access for selected workbench layouts. If a user has view only access to a Workbench Layout they will not be able to edit or delete the layout.

Steps to Enable

An administrator with User Access permissions can configure which users have access to specific Workbench Layouts and in this release can indicate if access should be limited to "View Only" access by setting the flag accordingly next to the Workbench Layout in the User Access Manager.

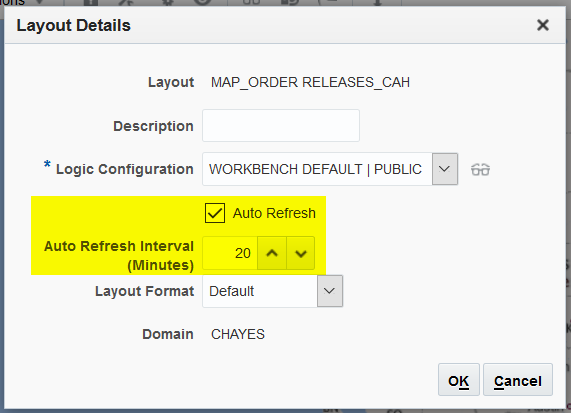

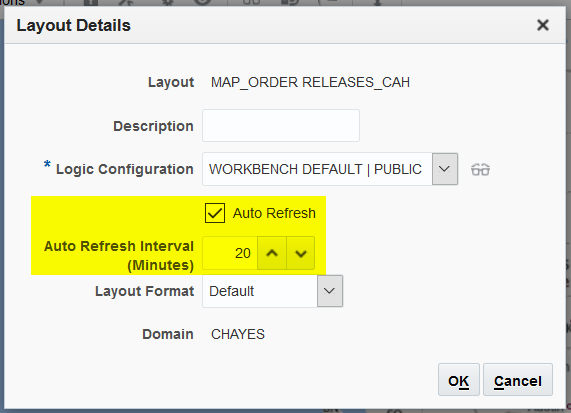

The OTM data displayed in a workbench layout can often become stagnant because other OTM users or backend processes might have modified the data. To refresh the data, you now have 2 options, auto refresh or manual refresh all, to refresh each (non-child) component in the layout. A refresh all process (either manual or automatic) retrieves the latest data for the existing objects on each non-child component, selection may not be retained because the selected object might no longer exist.

There are 2 new Refresh All functions available for your workbench.

- Auto Refresh - An ADMIN user (a user with the ADMIN role) can configure a workbench layout to refresh automatically after a set duration (between 5 and 120 minutes), this is suitable for monitoring data with minimal intervention. This setting is available when creating the layout or available in layout details when editing the layout.

- Refresh All Data (button/icon) on the Toolbar - You can initiate the workbench refresh directly within your workbench using the new "Refresh All Data" icon/button on the workbench layout toolbar.

Steps to Enable

REFRESH ALL - AUTO

An ADMIN user (a user with the ADMIN role) can configure a workbench layout to refresh automatically after a set duration (between 5 and 120 minutes). This setting is available when creating the layout or available in layout details when editing the layout. When the duration passes a Refresh All will initiate and will do a complete refresh for the entire workbench including a re-querying of the data displayed.

Setting a workbench layout to auto refresh can be done when creating the workbench layout or via the layout details icon on the layout toolbar.

If a workbench is set to auto refresh a calendar icon will be evident at the top of the workbench layout. Upon hovering over this icon the user can see the time of last refresh and the time of next refresh.

REFRESH ALL - MANUAL

You can initiate the workbench refresh directly within your workbench using the new refresh all icon/button on the workbench layout toolbar.

Refresh all will do a complete refresh for the entire workbench including a re-querying of the data displayed. If a manual refresh is performed on a workbench layout that is configured for auto refresh, the auto refresh timer is reset. For example, if your auto refresh interval is 20 minutes and the last refresh time is 9:00, you manually refreshed the layout at 9:15, then the next refresh time would be updated to 9:35 (20 minutes after your manual refresh).

Tips And Considerations

A couple of important things to consider when using either the manual refresh all function or the auto refresh capability:

- The Refresh All function (either manual or auto) should not be run if a component is in Inline Edit mode. If a change has been made to the data but not yet saved, the change will be lost upon refresh.

- Refresh all will do a complete refresh for the entire workbench including a re-querying of the data displayed.

- If a manual refresh is performed on a workbench layout that is configured for auto refresh, the auto refresh timer is reset. For example, if your auto refresh interval is 20 minutes and the last refresh time is 9:00, you manually refreshed the layout at 9:15, then the next refresh time would be updated to 9:35 (20 minutes after your manual refresh).

Workbench - Refresh After Action

Upon the completion of an action on an object within a Workbench a refresh is automatically sent back to the workbench to allow the display of updated data where possible. Most actions are supported, however, more complex actions are excluded, including but not limited to the following:

- Work Assignment Actions

- Add Shipment

- Remove Shipment

Steps to Enable

You don't need to do anything to enable this feature.

Workbench - Refresh Detail Tables When Master Table is Refreshed

When a Master table record is refreshed or selected, the Detail table records are re-queried as there may be a change in the records. The re-queried data is made available in the detail table.

Steps to Enable

You don't need to do anything to enable this feature.

Workbench - Saved Query No Longer Runs During Creation or Edit of a Workbench Table

To speed up the creation of Workbench Layouts, the Saved Query will not be run during Workbench table creation or edit.

Steps to Enable

You don't need to do anything to enable this feature.

Tips And Considerations

While the saved query no longer runs automatically during creation or edit - you do have the option of manually running the saved query when creating or editing a workbench.

Document Management - Add Document Multi-Select Document Option

This feature provides you with an expanded set of document capabilities for the OTM Shipment, GTM Campaign, and GTM Campaign Line managers. The new capability allows you to perform a multi-select to create, link or copy your documents across multiple objects to better support common business relationships where a single document can be related to multiple selections. For example you can now copy a standard certification to a set of shipments or upload a single Certificate Of Origin for multiple Campaign Lines.

The Add Document action capabilities include:

- Create As New - allows you to add a new document to one or more selected objects in your finder results.

- Create As Link - allows you to link a selected/existing document to one or more of your selected finder results.

- Create As Copy - allows you to copy a selected/existing document to one or more of your selected finder results.

Other capabilities provided with this feature include:

- Document Used As designation. The possible Used As options are:

- Individual (I) - used to identify a stand alone document that was created as an individual document. The document will retain it's Used As Individual designation even if it is subsequently linked to other documents or copied.

- Linked (L) - linked documents are documents that are linked directly to other documents. The linked from document will be identified on the linked document through the Related Document ID. In addition, any document that is generated from a Document Type where the Consolidated flag has been selected will have the Used As designation of Linked and the Related Document ID for these documents will link to the Consolidated Document.

- Consolidated (C) – a consolidated document is a cross reference document that is used to provide a cross reference link for those documents that are generated with data coming from many objects e.g., a quarterly shipment summary document that summaries the activities of many shipments, or a summary document that is generated across many campaign lines. The generation of the consolidated document is triggered by having the Consolidated flag checked on the documents related Document Type. For Consolidated documents - there will be one consolidated document that will be generated, then for each object there will be a linking document assigned that references, by way of the Related Document ID, the consolidated document. Note that each object owns their link document, but the Consolidated document itself is not related to any single object it only exists to create the cross reference between all the involved objects.

- Template (T) – identifies a document that represents a blank form that the user can download and fill out and then upload as a completed document. A template document can be attached to a document type and the template can be downloaded when that document type is selected.

- Document Context Qualifier ID and Value - allows the you to create your own unique identifier qualifier value for your documents.

- Document Effective Date and Expiration Date.

Steps to Enable

You don't need to do anything to enable this feature.

Tips And Considerations

The new Business Process Automation> Documents> Add option supports the same functionality (and more) that is provided by the Attach Documents, Generate Document and Upload Document actions - where the new Add option is available you can reduce the number of actions provided to you users to help avoid confusion.

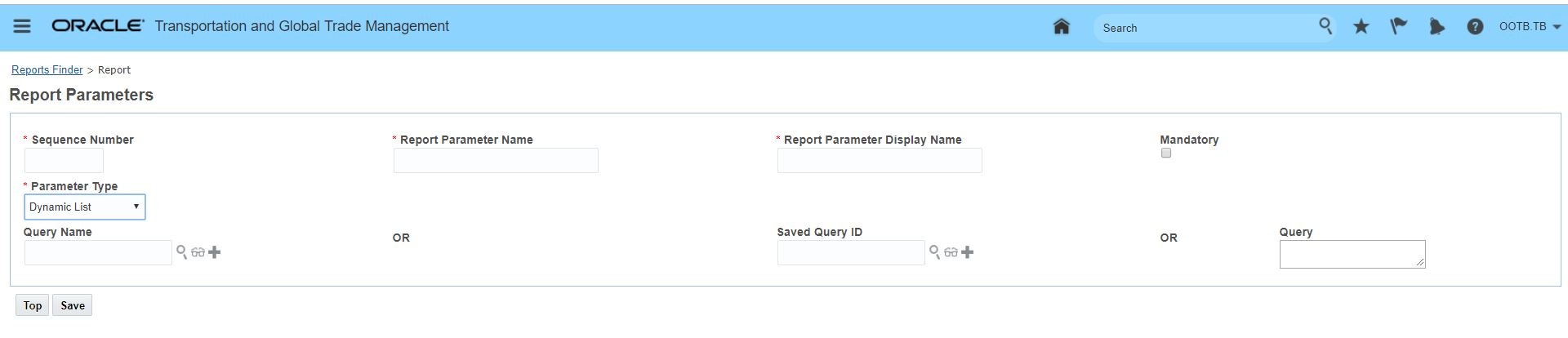

Query Based Dynamic Drop List for Reports

This feature provides you with the ability to create a SQL query that can be used to generate a drop list of values for your reports - this will allow you to restrict the parameter values used for generating your reports.

Steps to Enable

A text area is available when defining the report parameter where a SQL query can be written which will be executed and will generate a drop list based on the output of the query entered.

Under Business Process Automation>Power Data>Document Generation>Reports

- Create a report parameter of the type 'Dynamic List'

- Enter an SQL query for the parameter

Dynamic List Input

Running the report will generate a drop list based on the SQL query entered.

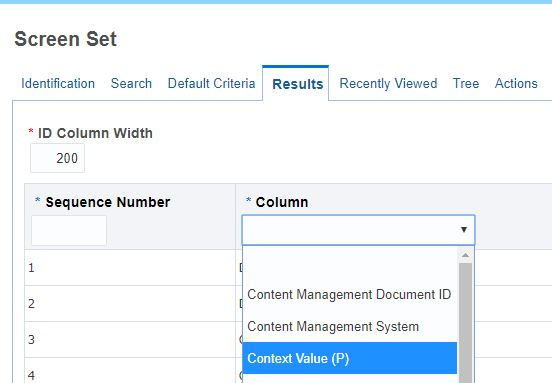

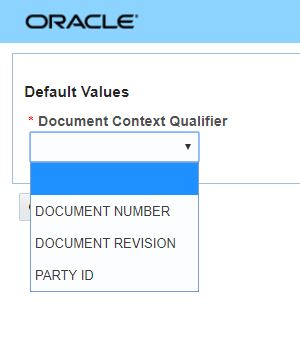

Support for Document Context as Pseudo Field

This feature provides you with the option to use the Document Context field as a Pseudo Field providing you with all the configuration options that Pseudo Fields provide. For example, you can configure a pseudo for the Document Context Qualifier = Document Revision add that pseudo field to your finder and then easily search for documents that do or do not have revisions.

Document Context Pseudo Field

Pseudo Field for Document Context

Steps to Enable

This feature is enabled by following the standard Screen Set Manager steps used for any other Pseudo Field.

- Go to Configuration and Administration > User Configuration > Screen Set Manager and copy the Public Document screen set.

- Go to the Results tab. Use this page to configure the columns that appear on the Results page of the business object assigned to this screen set. Enter an ID column width which consistently appears as the first column on all Results pages.

- The Document Context field is identified as a Pseudo and is marked with the letter P.

- Add the Document Context field to your results.

- Select the Document Context Qualifier to use for the field.

Oracle Transportation Management (Base)

Multi-Threading for Rating Engine

This feature provides you with the option to multi-thread your rating engine calls - either for internal OTM rates or for external rating engine calls. The ability to multi-thread the rating engine calls will provide - in most situations - an improvement in the rating performance of OTM in the areas where rating is called including: Rate Inquiry (aka RIQ), Bulk Planning or actions that involve rating.

Steps to Enable

The initial thread count/the default value is 1 - which means the rate record evaluation will be performed in sequence.

If you are noticing a large Backlog and a high (average) Queue Size then it is suggested to increase the number of threads in a step of 2. The recommended approach is to increase the thread count in a batch of 2 checking the throughput (Backlog and Queue Size) after each new setting.

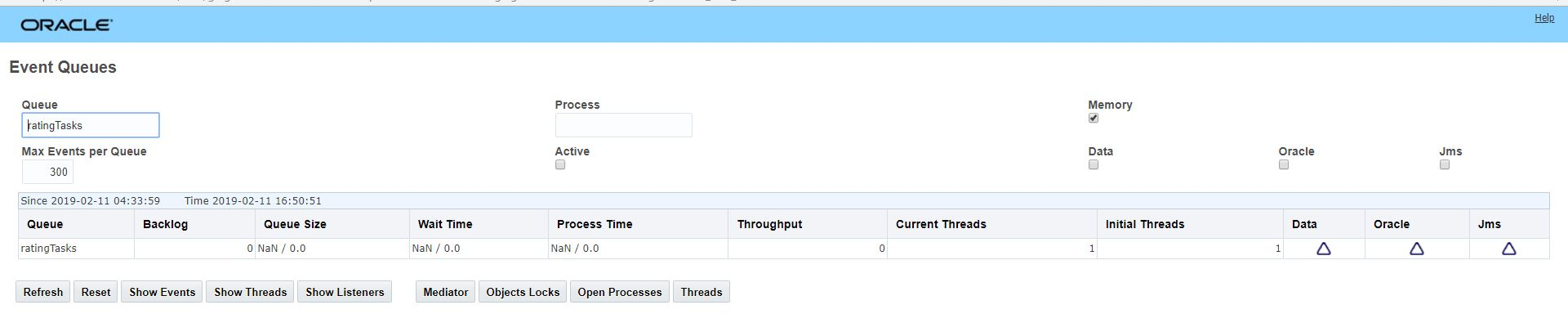

You can change/tune and review the impact of different settings batch thread settings through the Event Queues page. Note that changing the settings in the Event Queue is a temporary change that will be lost when the server is restarted. Once the desired thread setting has been determined - the approach is to set the thread group before starting the server.

The Event Queues page can be accessed via Configuration and Administration > Technical Support > Diagnostics and Tools > EventManagement > Event Queues.

You must be logged into OTM as DBA.ADMIN to access the Event Queues page.

Event Queue Rating Tasks

Key Resources

For more information on the effective use of multi-threading with OTM/GTM:

- Review the Help topic 'Multi-threading Logic in OTM',

- Review the ‘Workflow Thread Tuning’ section of the Cloud Getting Started Guide: https://docs.oracle.com/cd/E60665_01/otmcs_gs/OTMCG/OTMCG.pdf

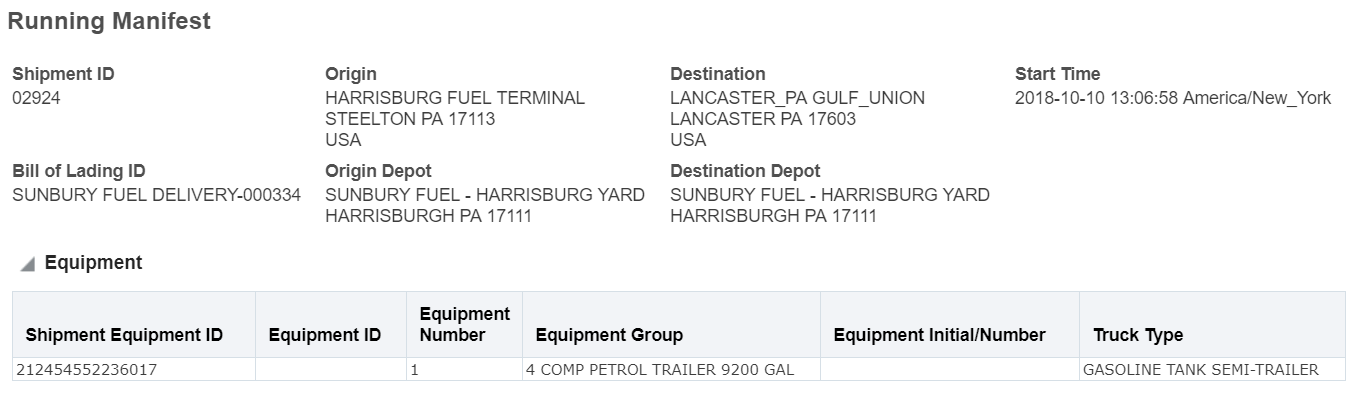

This feature provides you with a shipment action to generate a running or rolling manifest view of your shipments. The running manifest provides stop level detail from stop to stop capturing the details of the freight being transported.

The Running Manifest action is accessed via Shipment Management > Shipment Management > Buy Shipments > Actions > Shipment Management > View > Running Manifest.

THE EXAMPLE BELOW IS FOR A MULTI-STOP SHIPMENT WITH A MULTI-COMPARTMENT TRUCK

Header

The header provides the basic shipment information about the geography, reference numbers, and equipment. The equipment section provides the only reference to the Shipment-Equipment ID since the equipment will subsequently be referenced by a sequence number. The equipment information also includes the number painted on the actual truck and the type of truck, if this has been configured.

Running Manifest Header

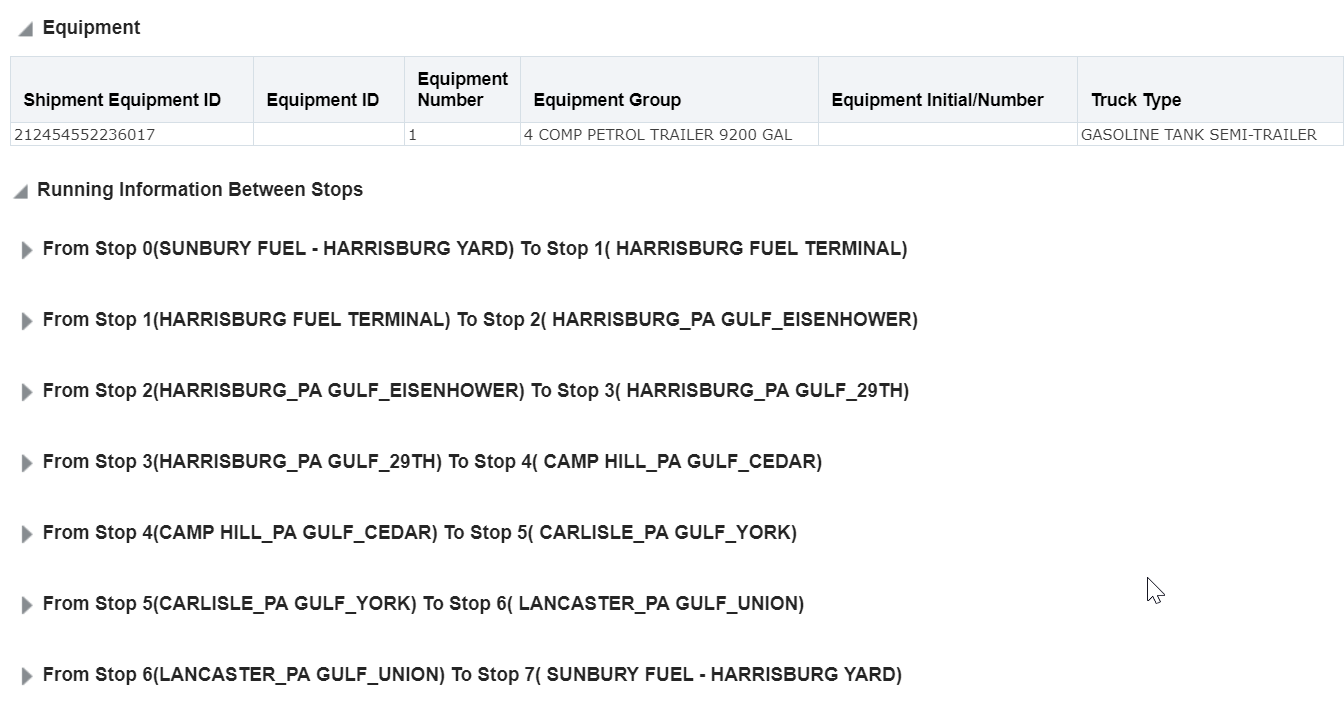

STOP TO STOP DETAIL

The result screen is then segmented by the stop to stop details. These sections can be expanded to provide the information between the stops.

Running Manifest Stops

The expanded sections are each arranged to provide a header which explains the details associated with the next stop. There is also a detailed listing of each ship unit on board between those stops. There are also 2 sections that show summaries of the equipment and compartments in terms of utilization. Each section needs to be expanded to show the details.

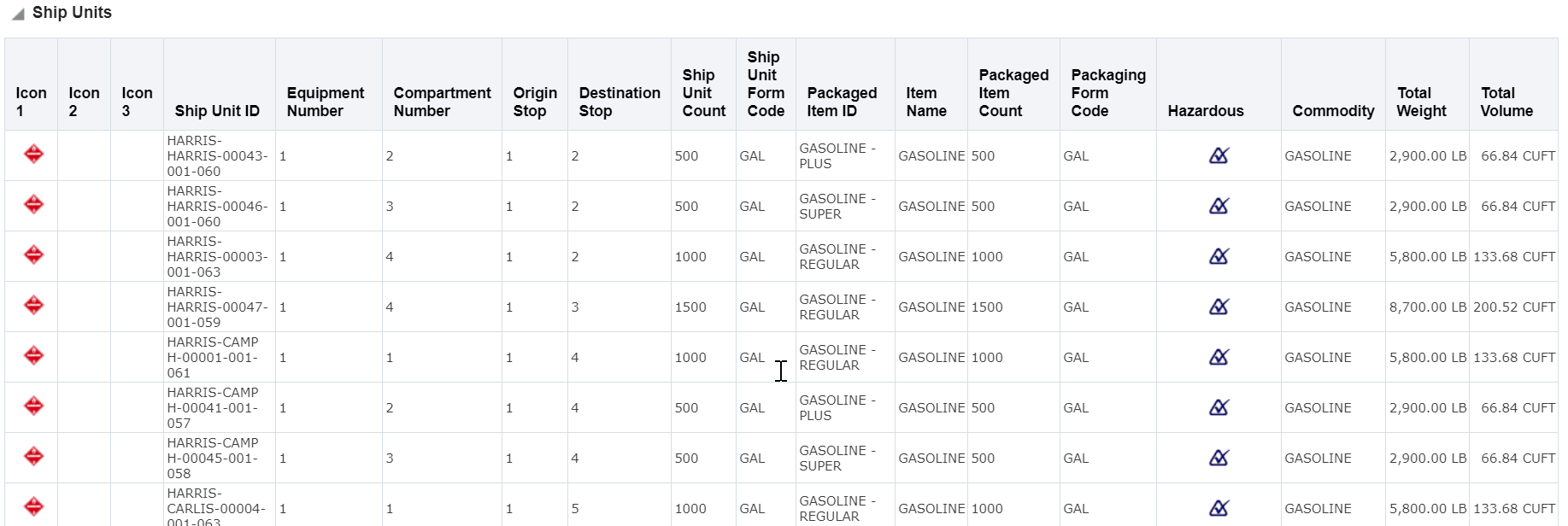

Running Manifest Stop 1 to Stop 2 Details

Expanding the Ship Unit details will provide information on the freight that is being carried between stops. This is organized by equipment, compartment and stop number. This allows the user to understand the inventory on board and where is is destined. For those users who know how to properly ship bulk, the ship unit count will be of great utility to the clients. This report intends to have commercial purpose in the fact that it contains details about the quantities and weights.

Ship Units - Stop 1 to Stop 2 (Partial List)

Steps to Enable

You don't need to do anything to enable this feature.

Tips And Considerations

You must set the parameter RUN HAZMAT QUALIFICATION PROCESS to true to view the Hazmat Icons on the Running Manifest.

Addition Release Method Order Configuration Options

This feature provides you with two additional order release line level fields that you can use to define your order configurations.

The added order configuration options are

- Order Release Line Weight

- Order Release Line Volume

Both fields support three values:

- NEVER,

- IF NULL (default value),

- ALWAYS.

Order Release Line Weight and Volume Options

Steps to Enable

You don't need to do anything to enable this feature.

Tips And Considerations

The following properties are deprecated:

- glog.business.order.orLineVolumeCalcType

- glog.order.line.alwaysRecalcWeightVolume

Documents Actions Added to Tracking Events, Document Element Added to Shipment Status Interface

This feature provides you with Document Management capabilities that you can use with your Tracking Events (ShipmentStatus).

Tracking Events now support all of the standard Business Process Automation Document Management actions:

- Attach Documents

- Generate Documents

- Limited Documents

- Upload Documents

In addition, the Document element has been added to the ShipmentStatus (aka as Tracking Event) interface - this gives you the ability to load documents directly from within the ShipmentStatus interface versus having to load your documents using the Document interface.

Steps to Enable

You don't need to do anything to enable this feature.

Key Resources

- Review the Integration Guide on the Oracle Help Center for more information regarding OTM/GTM's integration capabilities: https://docs.oracle.com/cloud/latest/otmcs_gs/docs.htm

External Distance Engine and Map Enhancements

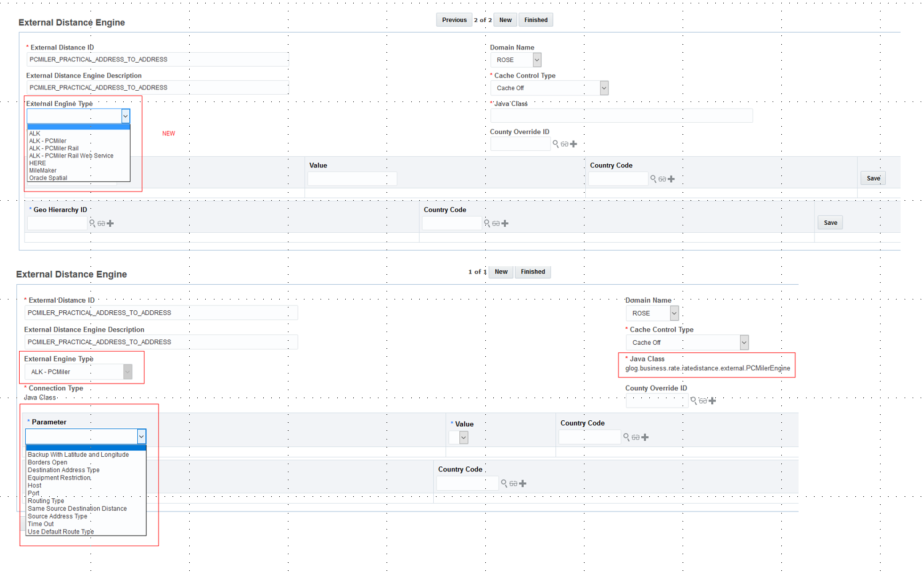

Simplified External Distance Engine Configuration UI

The configuration of the External Distance and External Service Engines was confusing at best since the user was responsible to assure that the configured attributes were compatible with the engine. This process has been re-designed to be more user friendly with only the compatible attributes being available on the UI, depending on the engine that was selected.

The UI supports supports HERE, ALK, and Oracle Spatial engines.

When the user selects an External Engine Type, the UI will only display the attributes associated with the selected External Engine Type. The code will also fill in the correct Java Class.

We have also provided a way to turn off the Cache and this is a new option.

Simplified Engine Configuration

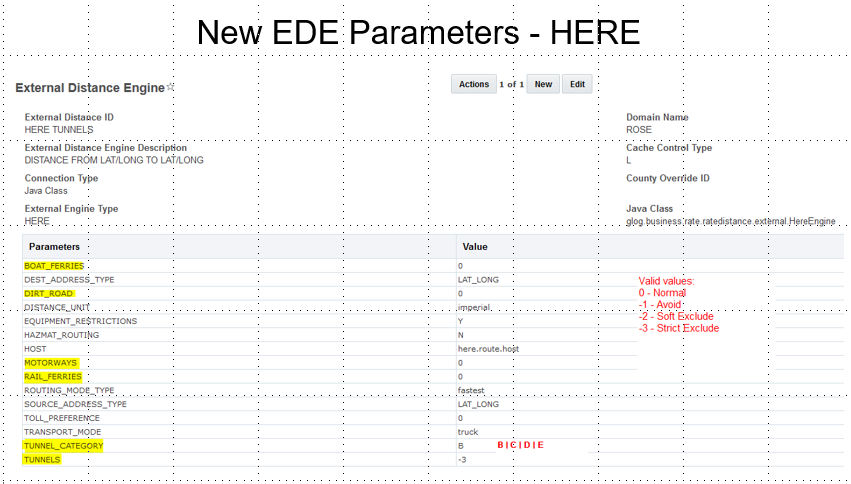

The HERE engine has been updated with new parameters as well. Additionally, the user will be able to fix the limited weight by configuring it on EDE/ESE else it will be dynamically calculated. This Limited Weight is an additional Parameter that is only used when the user would like to set a fixed Limited Weight and should not be confused with the dynamically calculated method which does not require the parameter.

New Here Parameters

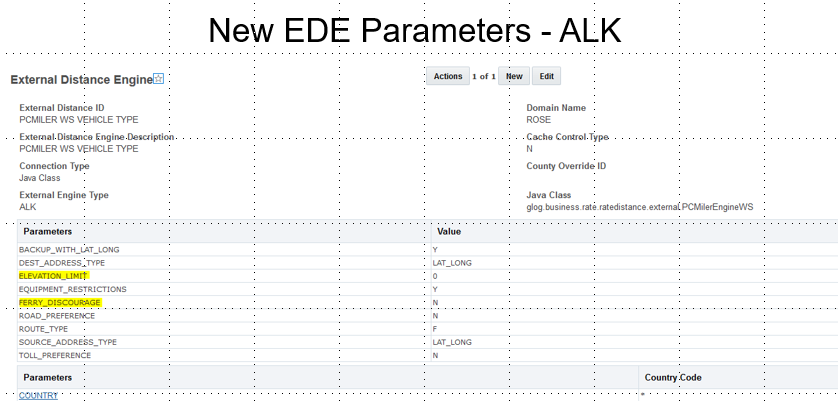

The ALK Engine has been updated with new parameters as well.

New ALK Parameters

Steps to Enable

This feature is all about configuration of the external engines. What is specified for the EDE (External Distance Engine) also applies for the ESE (External Service Engine).

The user is directed through the setup with a "smart" UI. First the user selects the engine to be used. The options that are available are based on that engine. The user does not have to remember the Java Class either. This is done for the user.

Tips And Considerations

It was decided to provide logic for specific engines based on the attributes that they supported. This way a user would not be able to specify an incompatible attribute nor an incompatible value where there is a specified list of values.

The down side of this is that any new engine must be on-boarded in the same manner. Since it is rare to find new vendors for such extensive applications, it is unlikely that they will be timing issues once a new product is announced.

It was decided that the benefits of the ease of use far outweighed any considerations for generic solutions.

Vendor solutions are expensive so it is also likely that an implementation may only go with one vendor, but OTM can handle multiple engine configurations. It is common that users will have more than one engine configuration for the same vendor so that is why the effort was made to simplify the configuration.

Screen Set - Configure Map Hover Fields

The "Include in Hover" setting in the Screen Set results has been enhanced to be used for configuring map hover as well as Gantt hover in a Workbench Layout.

Steps to Enable

Use the "Include in Hover" setting in the Screen Set to configure your map hover fields in the Workbench Layout.

Workbench Map - Expose Vendor Map Controls

Using the "Controls" function on the Workbench Map, you see a list of vendor-specific controls that you can add to the map. The list of controls varies depending on the map vendor selected. Select a control from the list to display it on the map. Select the control a second time to remove it from the map.

NOTE: The functionality of each control is determined by the map vendor.

HERE MAP

Configurable Controls:

- Zoom to Area

- Overview Map

- Measure Distance

ALK MAP

Configurable Controls:

- Mouse Coordinates

- Overview Map

- Navigation Toolbar

- Geolocation Toolbar

ORACLE ELOCATION MAP

Configurable Controls:

- Magnify Area

- Toolbar

Steps to Enable

You don't need to do anything to enable this feature.

Workbench - Configure Map Hover Text in Screen Set

Hover Text Configuration in Screen Set is now used for both map and Gantt hover. To allow these screen sets to be assigned to a Workbench Map a new section is available when configuring a map region in a Workbench Layout to indicate the screen sets that should be used for each object. Some default content is included in the hover, for example Order Release data is shown in a Shipment hover but the object's data relies on Screen Set configuration.

In each section there is a Show Default Hover check box. This check box controls which default fields are shown in the map hover text pop-up. This check box is selected by default. To remove the default hover fields deselect this check box.

Steps to Enable

- If you want to change which fields appear by default in the map hover text, expand the Hover Screen Sets section.

- Select user-defined screen sets for one or more objects for which you want to change the hover text.

- A Hover Screen Set controls the fields which appear in the hovering pop-up window when you click the Show Details Hover icon on the map.

- Within the Hover Screen Sets section, you can select the screen sets for various objects supported by the map component

- To configure the fields displayed in the hovering pop-up window on the screen set, select the Include in Hover Text option for fields in the Results tab via the More button.

- Once expanded, the Hover Screen Sets section is grouped as follows:

- Shipment: includes shipment-related objects such as buy shipment and shipment stop as well as logistics network modeling related objects such as modeling shipment and modeling shipment stop.

- Order: includes order-related objects such as order movement and order release.

- Driver: includes the driver object.

- Network: includes network-related objects such as location, network leg, region, and region details.

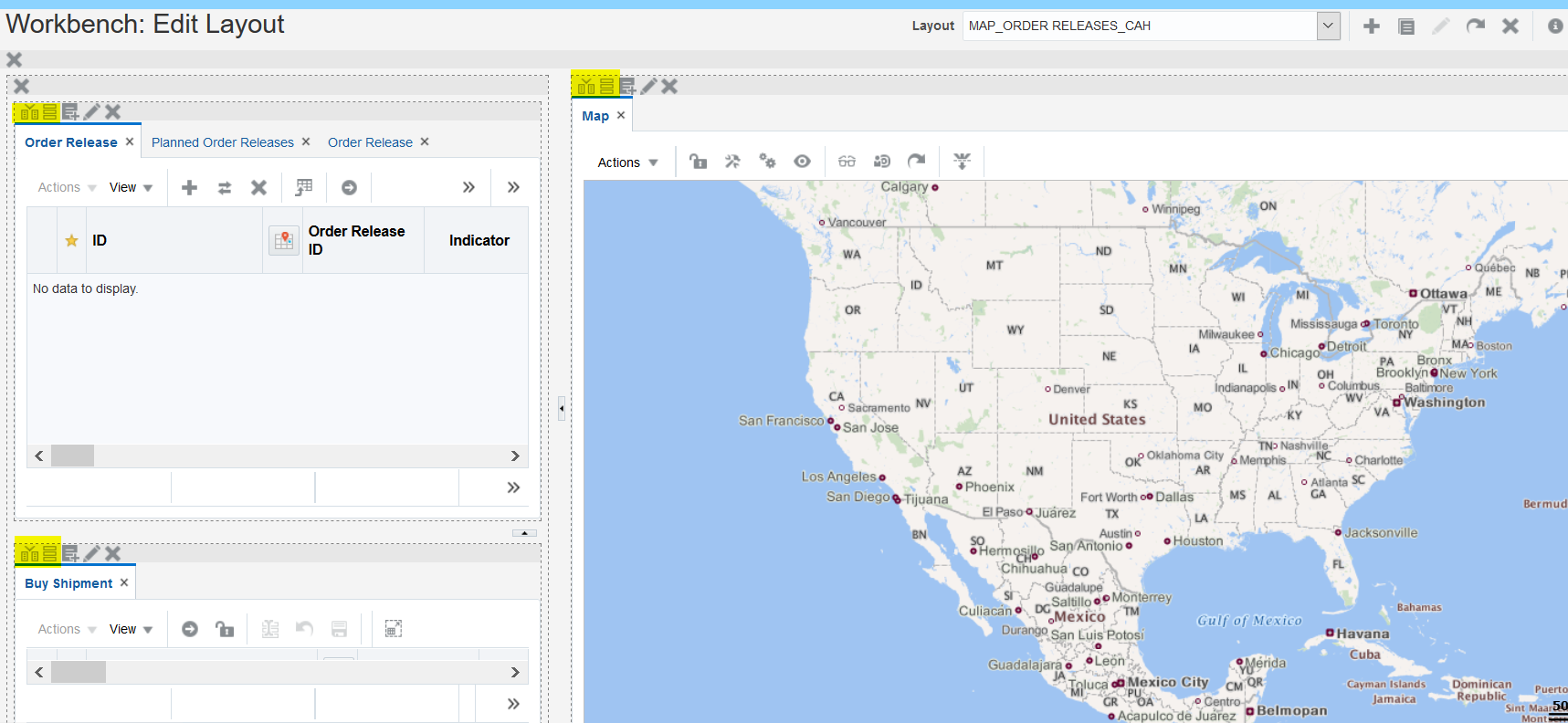

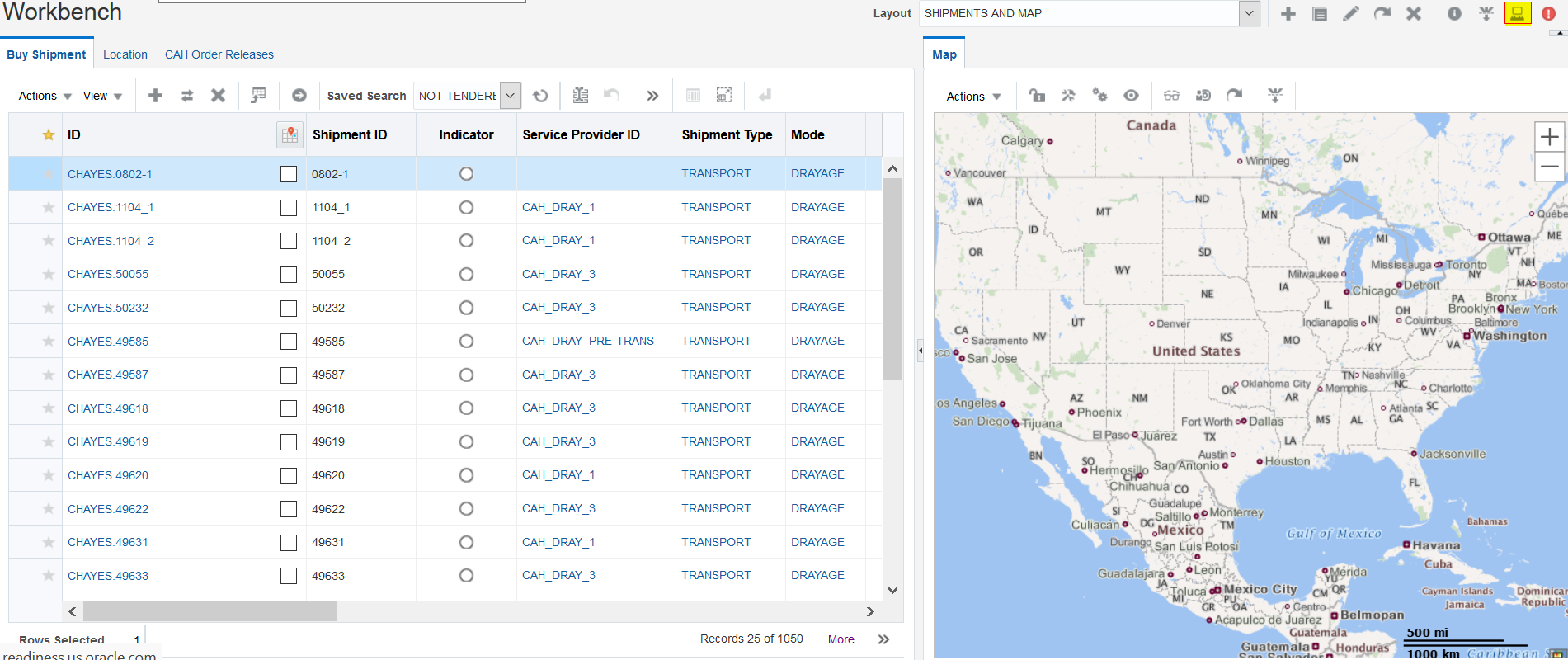

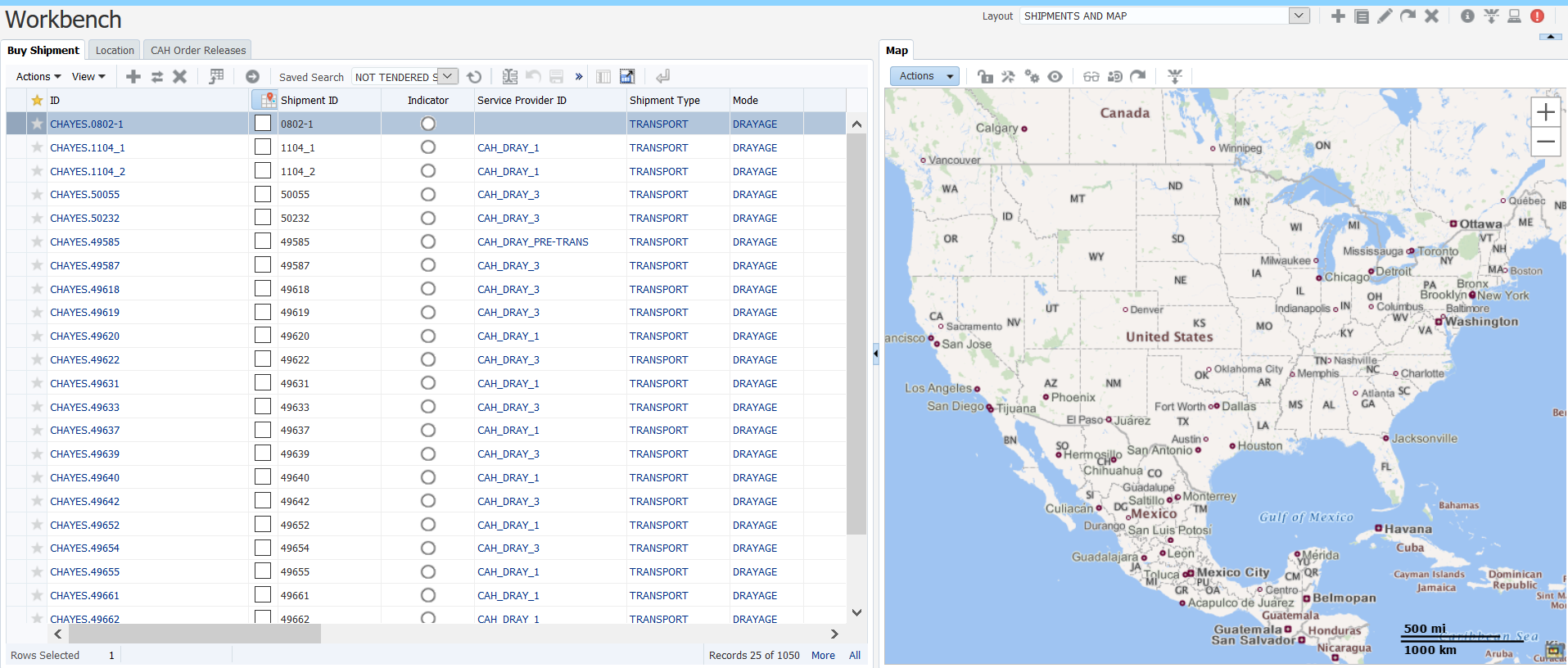

Workbench Map - Support Multiple Maps in Workbench Layout

A Workbench Layout now supports having multiple map regions. The "add to map" function in the Workbench Table adds the objects to all of the maps within the Workbench Layout. Using this along with the Map Filters feature allows you to display different data in your multiple maps. For example, you can map all of the shipments from a shipment table, and use the Map Filter to show TL shipments on one map and LTL shipments on the other.

Steps to Enable

The additional map regions can be added following the steps used to add a single map region in previous releases.

Workbench Map - External Distance Engine and Map - Consider Traffic Between Stops

Previous implementation provided the departure time of only the origin to the map vendor (ALK or Here) to calculate the route based on historic traffic conditions. This change allows the logic to consider the estimated departure times at all stops and pass them on to the vendors.

New logic parameter USE TRAFFIC PER STOP is added to control the use of traffic per stop pair.

- User can still use the existing USE TRAFFIC parameter if the idea is to use historic traffic, but not at each stop.

- If the new parameter is turned on, the planned departure time of each stop will be calculated and the origin stop departure time of each stop pair will be passed on to the vendors.eg if the shipment contains 3 stops;A-B-C .This contains 2 stop pairs .A-B and B-C. For the first pair the departure time of A will be passed and for the second the departure time of B will be provided.

- If both USE TRAFFIC and USE TRAFFIC PER STOP are turned on USE TRAFFIC PER STOP will take precedence.

Steps to Enable

You don't need to do anything to enable this feature.

Workbench Map - Consider Hazmat for Each Pair of Stops

This feature expands the Workbench Map functionality to consider Hazmat between each pair of shipment stops. In past releases, multi-stop shipments were plotted similar to 2 stop shipments with HAZMAT and resulted in sub-optimal routing since the route was decided by the most hazardous item on the shipment. With the implementation at stop pair, the route will be calculated on the hazmat items specific to the stop pair.

- If hazmat is present, always consider stop pair level routing

- Hazmat Items are identified as relevant to a pair of shipment stops and pass them on to Here or ALK when calculating route.

- Both adding a route to map and show driving directions will be affected when HAZMAT is present.

- Total distance and total time taken will be calculated individually for each segment of the route.

Steps to Enable

Previous functionality of external distance engine parameters for HAZMAT on Map didn't vary based on the number of stops on the shipment. This feature expands the functionality to consider Hazmat between each pair of shipment stops. In past releases, multi-stop shipments were plotted similar to 2 stop shipments with HAZMAT and resulted in sub-optimal routing since the route was decided by the most hazardous item on the shipment. With the implementation at stop pair, the route will be calculated on the hazmat items specific to the stop pair.

- If hazmat is present, always consider stop pair level routing

- Hazmat Items are identified as relevant to a pair of shipment stops and pass them on to Here or ALK when calculating route.

- Both adding a route to map and show driving directions will be affected when HAZMAT is present.

- Total distance and total time taken will be calculated individually for each segment of the route.

Workbench Map - Lock Zoom Level and Lock View on Map

This feature provides you with two new lock functions on the Workbench Map toolbar. The new lock functions improve the usability of the map display by allowing you to control the view and zoom more directly.

LOCK ZOOM LEVEL

- Click the Lock Zoom Level icon to lock that map's zoom level and maintain the current zoom view of the map.

- For example, if you select shipment A which starts and ends on the west coast of the US and you click the Lock Zoom Level icon, the map will stay zoomed into that shipment. Next, if you select a second shipment, shipment B which starts and ends on the east coast of the US, the map will not redraw to show that shipment. Instead the map zoom will remain on shipment A which starts and ends on the west coast of the US.

- If you manually zoom in or out using the mouse or map controls, the zoom level icon is automatically unlocked.

LOCK VIEW

- Click the Lock View icon to lock that map and keep the current items on the map; then, if you click another object such as a shipment or order release to be added to the map, the map does not change.

- This is useful when you have two maps, because you can add a shipment to the map and then lock that map. With one map locked, you can add a second shipment to the second map.

Steps to Enable

By default, the Lock Zoom Level icon is shown as unlocked. When you click the Lock Zoom Level icon to lock a map, the icon is shown as locked.

- Click the Lock Zoom Level icon to lock that map's zoom level and maintain the current zoom view of the map.

- For example, if you select shipment A which starts and ends on the west coast of the US and you click the Lock Zoom Level icon, the map will stay zoomed into that shipment.

- Next, if you select a second shipment, shipment B which starts and ends on the east coast of the US, the map will not redraw to show that shipment. Instead the map zoom will remain on shipment A which starts and ends on the west coast of the US.

- If you manually zoom in or out using the mouse or map controls, the zoom level icon is automatically unlocked.

Workbench Map - Additional HERE Supported Parameters

A set of additional HERE parameters that you can take advantage of in your HERE Workbench map..

BOAT_FERRIES

- 0 - Normal

- 1 - Avoid

- 2 - Soft Exclude

- 3 - Strict Exclude

MOTORWAYS

- If you specify motorway:-2, OTM will exclude motorways (highways) from the route calculation if it is possible to find a route that avoids them. Otherwise, the route calculation will include motorways.

- Valid values are:

- 0 - Normal

- -1 - Avoid

- -2 - Soft Exclude

- -3 - Strict Exclude

TUNNELS

- 0 - Normal

- 1 - Avoid

- 2 - Soft Exclude

- 3 - Strict Exclude

RAIL_FERRIES

- 0 - Normal

- 1 - Avoid

- 2 - Soft Exclude

- 3 - Strict Exclude

DIRT_ROAD

- 0 - Normal

- 1 - Avoid

- 2 - Soft Exclude

- 3 - Strict Exclude

FIXED_LIMITED_WEIGHT

- If this is set, OTM will use the weight here rather than the OTM shipment calculation.

- Values entered must be in tons.

- For example, if the shipment limit is 8 tons then the parameter must be set to 8.

TUNNEL_CATEGORY

- Truck routing only, specifies the tunnel category to restrict certain route links. The route will pass only through tunnels of a less strict category.

- The HAZMAT_ROUTING parameter needs to be set to Y for the routing engine to calculate the value for tunnel category. See the HAZMAT_ROUTING parameter details as well.

- The valid values are B, C, D and E with B being the least restrictive.

EQUIPMENT_RESTRICTIONS

- This is used to ensure proper street level routing.

- OTM needs to send equipment dimensions (length, width and height) for the EDE to use.

- Correct dimensions are needed for street level directions for tunnel and overhead height, bridge weight restrictions and so on.

- Equipment dimensions include the length, width and height of the total equipment. For this, set this to Y (on).

- Restrictions will not be used if the parameter is set to N (the default).

Steps to Enable

You don't need to do anything to enable this feature.

Workbench Map - ALK Rail Routes

A new MAP SHIPMENT LINE STYLE is available for RAIL. If RAIL is specified, the shipments have sufficient rail related details and ALK Rail is configured properly the actual rail routing is displayed on the map for all rail shipments.

- RAIL is for use with ALK only. A separate license is required for ALK RAIL.

- ALK's RAIL routing api is called for a RAIL shipment only if the RAIL mode's line style is set to RAIL.

To plot rail shipments ALK's api needs these parameters for each stop location.

- Format - Station format type

- Name - Station name or code

- Railroad - Standard Carrier Alpha Code

The following maps the above parameters to the corresponding OTM object from which the values can be retrieved.

- railroad = BuyShipment - > Mode -> Air/Rail Route Code - > Rail Route Code ID - > Carrier SCAC (from the list of Sequences)

- When Rail Route Code has more than one sequence with the "Rail Junction Code" then all the intermediate junctions are used for fetching the routes between the stop location.

- Rail junctions Carrier SCAC code comes from the next sequence's SCAC code.